Belen Alastruey

@b_alastruey

Followers

768

Following

243

Media

25

Statuses

95

PhD student @AIatMeta & @PSL_univ. Previously: @amazon Alexa, @apple MT, @mtupc1

Barcelona, España

Joined November 2021

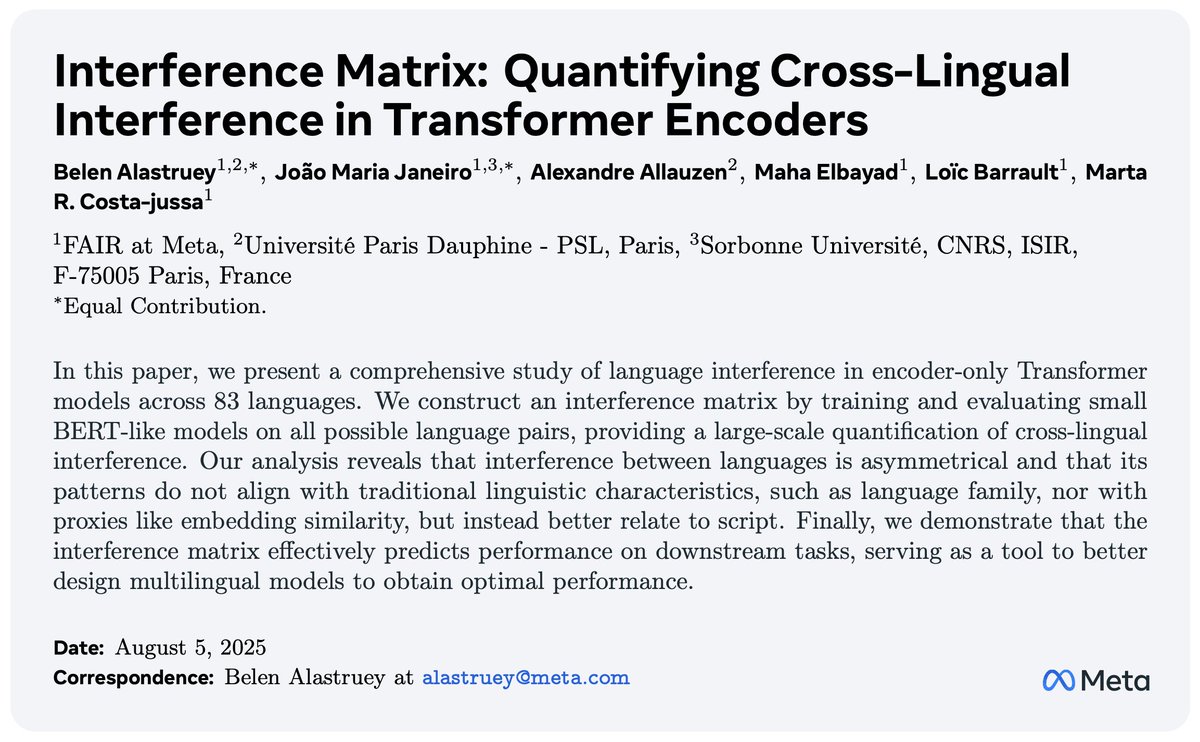

🚀New paper alert! 🚀. In our work @AIatMeta we dive into the struggles of mixing languages in largely multilingual Transformer encoders and use the analysis as a tool to better design multilingual models to obtain optimal performance. 📄: 🧵(1/n)

1

17

73

RT @JoaoMJaneiro: If you are attending ACL2025 join our oral presentation! Happening at 15:00 in room 1.86 🙂.

0

3

0

RT @eduardosg_ai: Happy to see that Linguini, our benchmark for language-agnostic linguistic reasoning, has been included in DeepMind’s BIG….

0

3

0

TL;DR: We conduct the first-ever analysis of the training dynamics of ST systems. Based on its results, we adjust the Transformer architecture to enhance the performance while bypassing the pretraining stage. We're thrilled to share these findings at #EMNLP! See you in Miami! 🏖️

0

0

1

🚨New #EMNLP Main paper🚨. What is the impact of ASR pretraining in Direct Speech Translation models?🤔. In our work we use interpretability to find out, and we use the findings to skip the pretaining!🔎📈. w/@geiongallego @costajussamarta. 📄: 🧵(1/n)

1

4

58

RT @JoaoMJaneiro: Last week we released the first paper of my PhD, "MEXMA: Token-level objectives improve sentence representations". We….

0

8

0

Happy to share Linguini🍝, a benchmark to evaluate linguistic reasoning in LLMs without relying on prior language-specific knowledge. We show the task is still hard for SOTA models, achieving below 25% accuracy. 📄:

🚨NEW BENCHMARK🚨. Are LLMs good at linguistic reasoning if we minimize the chance of prior language memorization?. We introduce Linguini🍝, a benchmark for linguistic reasoning in which SOTA models perform below 25%. w/ @b_alastruey, @artetxem, @costajussamarta et al. 🧵(1/n)

0

3

52