Ao Zhang

@zhanga6

Followers

666

Following

165

Media

10

Statuses

65

Ph.D student @NUSingapore. Core contributors of GR00T N1, MiniCPM-V, NExT-Chat. Research on MLLMs.

Central Region, Singapore

Joined January 2016

🚀NExT-Chat🚀: An LMM for Chat, Detection and Segmentation.All of the demo code, training code, evaluation code, and model weights are released at This a large multimodal model for chat, detection and segmentation as shown in the demo video:

3

15

49

Like this interesting work❤️! Generate LLM params for new tasks in 1 sec!.

Customizing Your LLMs in seconds using prompts🥳!.Excited to share our latest work with @HPCAILab, @VITAGroupUT, @k_schuerholt, @YangYou1991, @mmbronstein, @damianborth : Drag-and-Drop LLMs(DnD). 2 features: tuning-free, comparable or even better than full-shot tuning.(🧵1/8)

2

1

2

Wow, new release is coming 🥳!.

#NVIDIAIsaac GR00T N1.5 is now accessible to #robotics developers working with a wide range of robot form factors, and available to download from @huggingface. 🎉. Dive into our step-by-step tutorial to learn how to easily post-train and adapt it to the LeRobot SO-101 arm, and

0

0

1

🚀So excited to share our recent work! GR00T N1 is a 2B model for humanoid robots, which is validated on a series of sim and real robot benchmarks🙌! Try it out!.

Excited to announce GR00T N1, the world’s first open foundation model for humanoid robots! We are on a mission to democratize Physical AI. The power of general robot brain, in the palm of your hand - with only 2B parameters, N1 learns from the most diverse physical action dataset

0

0

10

Why are MiniCPM-V series so powerful😆?.

💥 Introducing MiniCPM-o 2.6: An 8B size, GPT-4o level Omni Model runs on device .✨ Highlights: .~Match GPT-4o-202405 in vision, audio and multimodal live streaming .~End-to-end real-time bilingual audio conversation ~Voice cloning & emotion control .~Advanced OCR & video

0

0

1

Real-time video generation🤩🤩🤩. Congrats to Xuanlei and Kai.

Real-Time Video Generation: Achieved 🥳. Share our latest work with @JxlDragon, @VictorKaiWang1, and @YangYou1991: "Real-Time Video Generation with Pyramid Attention Broadcast.". 3 features: real-time, lossless quality, and training-free!. Blog: (🧵1/6)

0

0

3

RT @FuxiaoL: Thanks for my excellent collaborators @zhanga6, @imhaotian, Hao Fei, Yuan Yao, @zhangzhuosheng. Our.@CVPR tutorial on "From Mu….

0

6

0

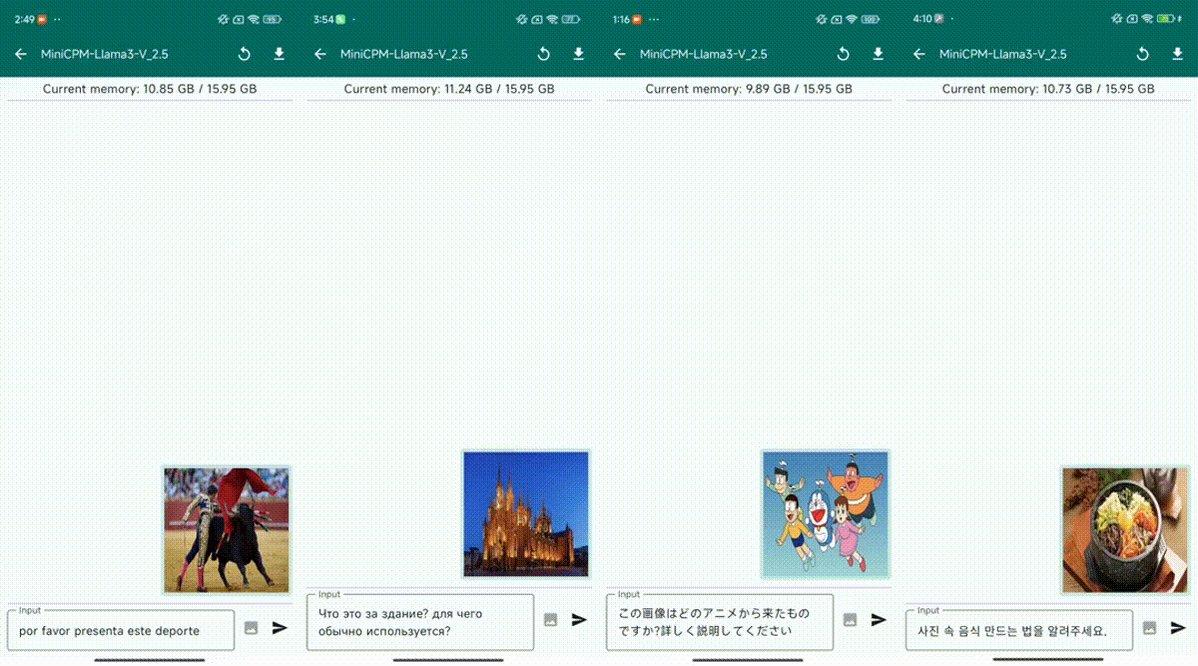

After receiving the issue from @yangzhizheng1 on GitHub, we launched a serious investigation. We can obtain inference results correctly using Llama3-V checkpoint with MiniCPM-Llama3-V 2.5's code and config file following @yangzhizheng1's instruction on GitHub. Even more, we also.

1

2

29

Comparable to GPT-4V with only 8b param😱. Welcome to check out our new MiniCPM-Llama3-V 2.5.

🚀 Excited to introduce MiniCPM-Llama3-V 2.5! With 8B parameters, it’s our latest breakthrough, outperforming top models like GPT-4V. 📈.💪 Superior OCR capabilities.🔑 Supports 30+ languages.HuggingFace:GitHub:

0

0

4

Shocked by the performance💥!. Also solve my long confuse about which version of ChatGPT to use for eval.

[p1] 🐕Direct Preference Optimization of Video Large Multimodal Models from Language Model Reward🐕. Paper link: page: How to effectively train video large multimodal Model (LMM) alignment with preference modeling?

0

0

0

RT @arankomatsuzaki: Some people criticize next token prediction like "we should also predict more future tokens beyond the immediate futur….

0

69

0