Chaojun Xiao

@xcjthu1

Followers

242

Following

4

Media

4

Statuses

19

PhD Student @TsinghuaNLP @OpenBMB, LLM

Joined March 2021

We release Ultra-FineWeb, a high-quality pre-training corpus with 1.1 T tokens !!.

🚀 Introducing Ultra-FineWeb 🔥.~1T English and 120B Chinese tokens!.~Training fuel of MiniCPM4!. 🎯 Highlights.~Efficient Verification Strategy: Reduces data verification cost by 90%.~High-Efficiency Filtering Pipeline: Optimizes selection of both positive and negative samples.

0

1

10

Efficiently scaling the context length !!!!.

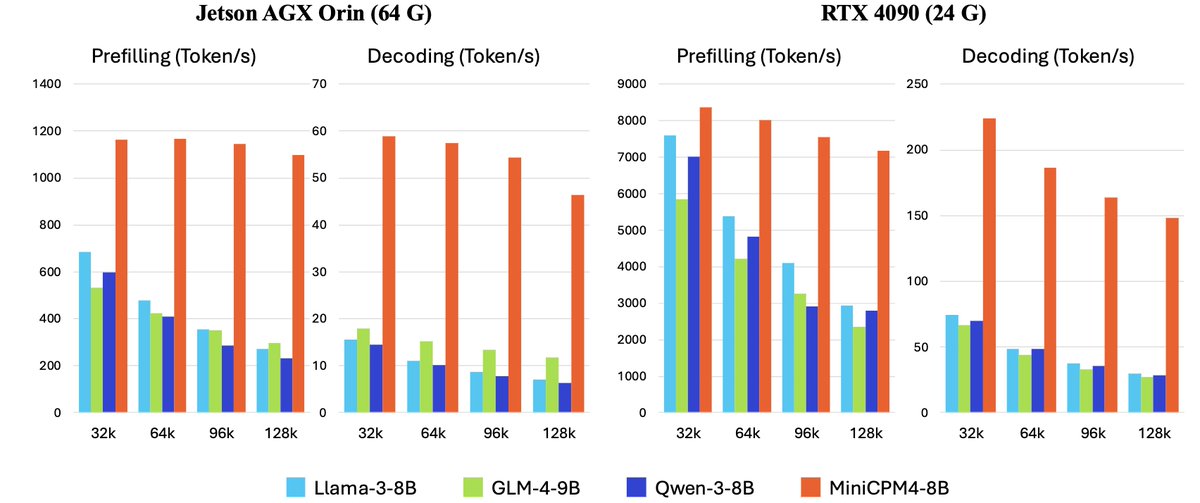

🚀 MiniCPM4 is here! 5x faster on end devices 🔥.✨ What's new:.🏗️ Efficient Model Architecture.- InfLLM v2 -- Trainable Sparse Attention Mechanism.🧠 Efficient Learning Algorithms.- Model Wind Tunnel 2.0 -- Efficient Predictable Scaling.- BitCPM -- Ultimate Ternary Quantization

0

0

5

RT @ZhiyuanZeng_: Is a single accuracy number all we can get from model evals?🤔.🚨Does NOT tell where the model fails.🚨Does NOT tell how to….

0

91

0

RT @nlp_rainy_sunny: (Repost) We are thrilled to introduce our new work 🔥#SparsingLaw🔥, a comprehensive study on the quantitative scaling p….

0

4

0

RT @TsinghuaNLP: Pre-trained models show effectiveness in knowledge transfer, potentially alleviating data sparsity problem in recommender….

0

6

0

RT @TsinghuaNLP: Welcome to the @TsinghuaNLP Twitter feed, where we'll share new researches and information from TsinghuaNLP Group. Looking….

0

11

0