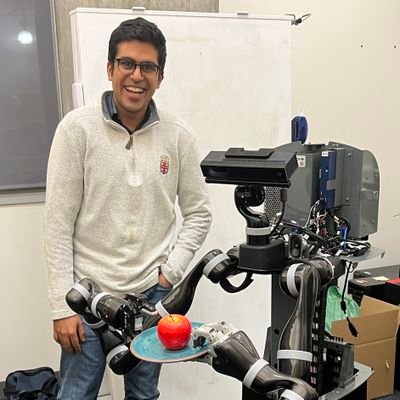

Yusen Luo

@yusen_2001

Followers

31

Following

138

Media

0

Statuses

10

USC MSCS | RA at Lira Lab | Interested in Robot Learning & Robot Foundation Model | 🚀Applying phd @26fall!

Joined August 2023

We’re excited to release the code for our CoRL 2025 (Oral) paper: “ReWiND: Language-Guided Rewards Teach Robot Policies without New Demonstrations.��� 🌐 Website: https://t.co/0yLFfgUn5O 📄 Arxiv: https://t.co/H0EU1IpfT6 💻 Code: https://t.co/BPgbtsRkNm

https://t.co/OoeZSiFE8E

Reward models that help real robots learn new tasks—no new demos needed! ReWiND uses language-guided rewards to train bimanual arms on OOD tasks in 1 hour! Offline-to-online, lang-conditioned, visual RL on action-chunked transformers. 🧵

4

34

149

How can we help *any* image-input policy generalize better? 👉 Meet PEEK 🤖 — a framework that uses VLMs to decide *where* to look and *what* to do, so downstream policies — from ACT, 3D-DA, or even π₀ — generalize more effectively! 🧵

1

31

120

Thrilled to share that ReWiND kicks off CoRL as the very first oral talk! 🥳 📅 Sunday, 9AM — don’t miss it! @_abraranwar and I dive deeper into specializing robot policies in our USC RASC blog post (feat. ReWiND + related work): 👉

Reward models that help real robots learn new tasks—no new demos needed! ReWiND uses language-guided rewards to train bimanual arms on OOD tasks in 1 hour! Offline-to-online, lang-conditioned, visual RL on action-chunked transformers. 🧵

2

6

47

World models hold a lot of promise for robotics, but they're data hungry and often struggle with long horizons. We learn models from a few (< 10) human demos that enable a robot to plan in completely novel scenes! Our key idea is to model *symbols* not pixels 👇

19

81

504

Introducing Importance Weighted Retrieval, a simple modification to existing retrieval methods! Our importance sampling inspired approach helps us more effectively retrieve from prior datasets for few shot imitation learning! #CoRL2025 Oral w/ Rahul Chand @DorsaSadigh @JoeyHejna

5

27

173

How can non-experts quickly teach robots a variety of tasks? Introducing HAND ✋, a simple, time-efficient method of training robots! Using just a **single hand demo**, HAND learns manipulation tasks in under **4 minutes**! 🧵

6

32

241

https://t.co/z8dr3LNw6W ReWiND: Language-Guided Rewards Teach Robot Policies without New Demonstrations LLM generated instructions z + demo -> learning-based reward model (progress) R(o,z) -> optimize policy via RL online

0

13

82

Absolutely thrilled to be part of this work— I truly enjoyed every moment of the collaboration. Huge thanks to all the amazing collaborators who made it happen! 😄

Reward models that help real robots learn new tasks—no new demos needed! ReWiND uses language-guided rewards to train bimanual arms on OOD tasks in 1 hour! Offline-to-online, lang-conditioned, visual RL on action-chunked transformers. 🧵

0

4

10

Reward learning (like DYNA) has enabled e2e policies to reach 99% SR but (1) generalization to new tasks and (2) sample efficiency are still hard! ReWiND produces better rewards for OOD tasks than SOTA like GVL & LIV from @JasonMa2020 that inspired us! 🌐:

Introducing Dynamism v1 (DYNA-1) by @DynaRobotics – the first robot foundation model built for round-the-clock, high-throughput dexterous autonomy. Here is a time-lapse video of our model autonomously folding 850+ napkins in a span of 24 hours with • 99.4% success rate — zero

1

11

54

Excited to release FAST, our new robot action tokenizer! 🤖 Some highlights: - Simple autoregressive VLAs match diffusion VLA performance - Trains up to 5x faster - Works on all robot datasets we tested - First VLAs that work out-of-the-box in new environments! 🧵/

20

97

528