Yan-Bo Lin

@yblin98

Followers

126

Following

1K

Media

25

Statuses

107

Ph.D. student @unccs working on multimodal learning including audio📣, videos🎬, and language💬.

Chapel Hill, NC

Joined August 2022

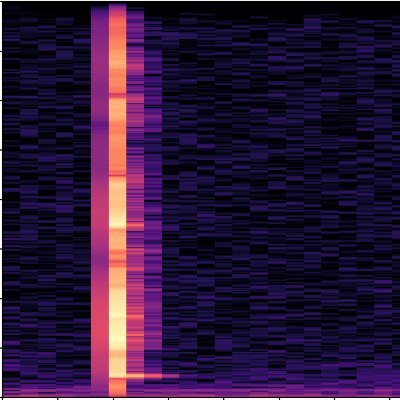

🔊Check out our preprint, AVSiam 🤝. We use a single shared ViT to process audio and visual inputs, improving its parameter efficiency⚡, reducing the GPU memory footprint💻, and allowing us to scale📈 to larger datasets and model sizes. w. @gberta227 .👇.

arxiv.org

Traditional audio-visual methods rely on independent audio and visual backbones, which is costly and not scalable. In this work, we investigate using an audio-visual siamese network (AVSiam) for...

2

5

23

RT @hanlin_hl: Thanks AK for sharing our work! . For readers interested in our work, please check our project page: .

0

11

0

RT @shoubin621: New paper Alert 🚨 Introducing MEXA: A general and training-free multimodal reasoning framework via dynamic multi-expert ski….

0

29

0

RT @gberta227: I will be presenting more details on SiLVR at the LOVE: Multimodal Video Agent workshop at 12:15pm CST in Room 105A!.

0

3

0

RT @CMHungSteven: @CVPR is around the corner!!.Join us at the Workshop on T4V at #CVPR2025 with a great speaker lineup (@MikeShou1, @jw2yan….

0

19

0

RT @Han_Yi_724: 🚀 Introducing ExAct: A Video-Language Benchmark for Expert Action Analysis.🎥 3,521 expert-curated video QA pairs in 6 domai….

0

5

0

RT @gberta227: Check out our recent paper, which presents a new framework for complex video reasoning tasks. A straightforward and intuitiv….

0

6

0

Check out our recent work. We leverage the strong reasoning capabilities of LLMs for complex vision-language tasks. The code is easy to adapt for newer model versions.

Recent advances in test-time optimization have led to remarkable reasoning capabilities in LLMs. However, the reasoning capabilities of MLLMs still significantly lag, especially for complex video-language tasks. We present SiLVR, a Simple Language-based Video Reasoning framework.

0

0

5

RT @CMHungSteven: The 4th Workshop on Transformers for Vision (T4V) at CVPR 2025 is soliciting self-nominations for reviewers. If you're in….

0

11

0

RT @gberta227: For those of you who know me, I've always been very excited to combine my two passions for basketball and CV. Our #CVPR2025….

sites.google.com

We present BASKET, a large-scale basketball video dataset for fine-grained skill estimation. BASKET contains more than 4,400 hours of video capturing 32,232 basketball players from all over the...

0

13

0

RT @yilin_sung: 🚀 New Paper: RSQ: Learning from Important Tokens Leads to Better Quantized LLMs. We show that not all tokens should be trea….

0

41

0

RT @yilin_sung: I'm on the industry job market!. If you're hiring, let's connect (I will attend #NeurIPS2024 in person too)! I'm excited to….

0

23

0

RT @jmin__cho: 🚨 I’m on the 2024-2025 academic job market!. I work on ✨ Multimodal AI ✨, with a special focus on en….

0

43

0

RT @cyjustinchen: 🚨 Reverse Thinking Makes LLMs Stronger Reasoners. We can often reason from a problem to a solution and also in reverse to….

0

105

0

RT @andrewhowens: We generate a soundtrack for a silent video, given a text prompt! For example, we can make a cat's meow sound like a lion….

0

5

0

RT @ZunWang919: 🚨Thrilled to share my first PhD project:. DreamRunner✨: Fine-Grained Storytelling Video Generation with Retrieval-Augmented….

0

42

0

RT @jmin__cho: Modern T2V generation models still make simple mistakes, such as generating the incorrect number of objects or semantically….

0

11

0

RT @SenguptRoni: SOTA virtual face re-aging techniques often struggle to preserve identity for large age changes. We present MyTimeMachine,….

0

4

0

RT @jmin__cho: Check out M3DocRAG -- multimodal RAG for question answering on Multi-Modal & Multi-Page & Multi-Documents (+ a new open-doma….

0

90

0

RT @hanlin_hl: Glad to share our new preprint from my Meta @AIatMeta internship and @uncnlp collaboration: . VEDiT: Latent Prediction Arc….

0

44

0