Ce Zhang

@cezhhh

Followers

94

Following

41

Media

16

Statuses

30

CS phd student at UNC Chapel Hill.

Chapel Hill, NC

Joined September 2023

Work done with amazing collaborators: @yblin98 @ZiyangW00 @mohitban47 @gberta227 . Paper: Project Page: Code:

github.com

Official Implementation for "SiLVR : A Simple Language-based Video Reasoning Framework" - CeeZh/SILVR

0

0

1

RT @YuluPan_00: 🚨 New #CVPR2025 Paper 🚨.🏀BASKET: A Large-Scale Dataset for Fine-Grained Basketball Skill Estimation🎥.4,477 hours of videos….

0

4

0

Excited to share that LLoVi is accepted to #EMNLP2024. We will present our work in poster session 12, Nov. 14 (Thu.) 14:00-15:30 ET. Happy to have a chat!. Check out our paper at: Code: Website:

sites.google.com

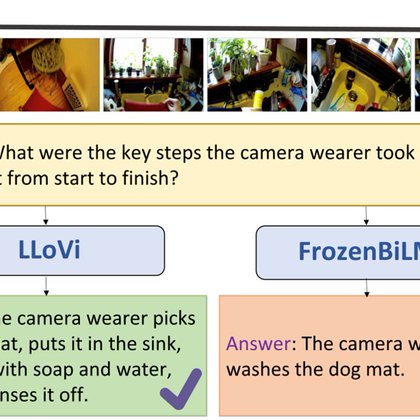

Motivation

First, LLoVi uses a short-term visual captioner to generate textual descriptions of short video clips (0.5-8s in length) densely sampled from a long input video. Afterward, an LLM aggregates the short-term captions to perform long-range temporal reasoning.

0

3

19

RT @KevinZ8866: (0/7) #ICLR2024 How could LLM benefit video action forecasting? Excited to share our ICLR 2024 paper: AntGPT: Can Large Lan….

0

2

0

RT @gberta227: The 3rd Transformers for Vision workshop will be back at #CVPR2024! We have a great speaker lineup covering diverse Transfor….

0

22

0

RT @gberta227: The code is now publicly available at .

github.com

Official implementation for "A Simple LLM Framework for Long-Range Video Question-Answering" - CeeZh/LLoVi

0

12

0

Work done with amazing collaborators: @lu_taixi @mmiemon @ZiyangW23972334 @shoubin621 @mohitban47 @gberta227.

0

0

3