Satpreet Singh

@tweetsatpreet

Followers

3K

Following

18K

Media

61

Statuses

2K

AI x Neuro/Bio Postdoc @harvardmed @KempnerInst; PhD @UW; Ex @Meta @LinkedIn.

🌎

Joined October 2010

1/n Excited to share our new preprint where we study turbulent plume tracking using deep reinforcement learning (DRL) trained RNN *agents* and find many intriguing similarities with flying insects. w/ @FlorisBreugel @RajeshPNRao @bingbrunton; #tweeprint @flypapers #Drosophila

8

43

175

More on collective behavior: Our new Annual Review of Biophysics piece - with the stellar Danielle Chase - explores how animals sense, share information, and make group decisions. In honeybees and beyond 🐝 https://t.co/UcuG35gUu5

15

459

2K

Coming March 17, 2026! Just got my advance copy of Emergence — a memoir about growing up in group homes and somehow ending up in neuroscience and AI. It’s personal, it’s scientific, and it’s been a wild thing to write. Grateful and excited to share it soon.

16

38

319

#KempnerInstitute research fellow @t_andy_keller and coauthors Yue Song @wellingmax and Nicu Sebe have a new book out that introduces a framework for developing equivariant #AI & #neuroscience models. Read more: https://t.co/fc92JnImeD

#NeuroAI

kempnerinstitute.harvard.edu

Humans have a powerful ability to recognize patterns in a dynamic, ever-changing world, allowing for problem-solving and other cognitive abilities that are the hallmark of intelligent behavior. Yet...

0

7

31

I promised I’d make a thread about it, so here goes - Are you interested in horses? Musculoskeletal modelling? Predictive simulations of quadrupedal gaits? Then this paper is for you! This made the cover of @ICB_journal ! With @tgeijten @Anneschulp Ineke Smit & Karl Bates

5

47

203

If you like scaling laws in AI you’ll love scaling laws in biology, like allometric scaling of energy production density as a power law with exponent -1/4 across 100’s of millions of years of evolution. Also a new and similar scaling law for sleep - connecting it to metabolism!

As you know I'm obsessed with power laws in biology, which is a biological consequence of fundamental principles, like energy conservation from the first law of thermodynamics. Geoffrey West showed how highly optimized biological networks—think blood vessels or respiratory

3

16

121

Why do video models handle motion so poorly? It might be lack of motion equivariance. Very excited to introduce: Flow Equivariant RNNs (FERNNs), the first sequence models to respect symmetries over time. Paper: https://t.co/dkk43PyQe3 Blog: https://t.co/I1gpam1OL8 1/🧵

7

72

396

NeurIPS is pleased to officially endorse EurIPS, an independently-organized meeting taking place in Copenhagen this year, which will offer researchers an opportunity to additionally present their accepted NeurIPS work in Europe, concurrently with NeurIPS. Read more in our blog

11

114

794

Announcing AI for Science at @NeurIPSConf 2025! Join us in discussing the reach and limits of AI for scientific discovery 🚀 📍 Workshop submission deadline: Aug 22 💡 Dataset proposal competition: more details coming soon! ✨ Amazing line up of speakers and panelists

3

27

155

New Book & Video Series!!! (late 2025) Optimization Bootcamp: Applications in Machine Learning, Control, and Inverse Problems Comment for a sneak peak to help proofread and I'll DM (proof reading, typos, HW problems, all get acknowledgment in book!)

244

203

2K

At ICML for the next 2 days to present multiple works, if you're into interpretability, complexity, or just wanna know how cool @KempnerInst is, hit me up 👋

2

14

85

Excited to share a new project spanning cognitive science and AI where we develop a novel deep reinforcement learning model---Multitask Preplay---that explains how people generalize to new tasks that were previously accessible but unpursued.

2

7

38

Everyone knows action chunking is great for imitation learning. It turns out that we can extend its success to RL to better leverage prior data for improved exploration and online sample efficiency! https://t.co/J5LdRRYbSH The recipe to achieve this is incredibly simple. 🧵 1/N

3

69

364

(1/7) New preprint from the Rajan Lab! 🧠🤖 @RyanPaulBadman1 & @SimmonsEdler show–through cog sci, neuro & ethology-how an AI agent with fewer ‘neurons’ than an insect can forage, find safety & dodge predators in a virtual world. Here's what we did Paper: https://t.co/DvRKjERrGl

4

13

64

🚨 Registration is live! 🚨 The New England Mechanistic Interpretability (NEMI) Workshop is happening August 22nd 2025 at Northeastern University! A chance for the mech interp community to nerd out on how models really work 🧠🤖 🌐 Info: https://t.co/mXjaMM12iv 📝 Register:

3

28

107

As AI agents face increasingly long and complex tasks, decomposing them into subtasks becomes increasingly appealing. But how do we discover such temporal structure? Hierarchical RL provides a natural formalism-yet many questions remain open. Here's our overview of the field🧵

12

64

281

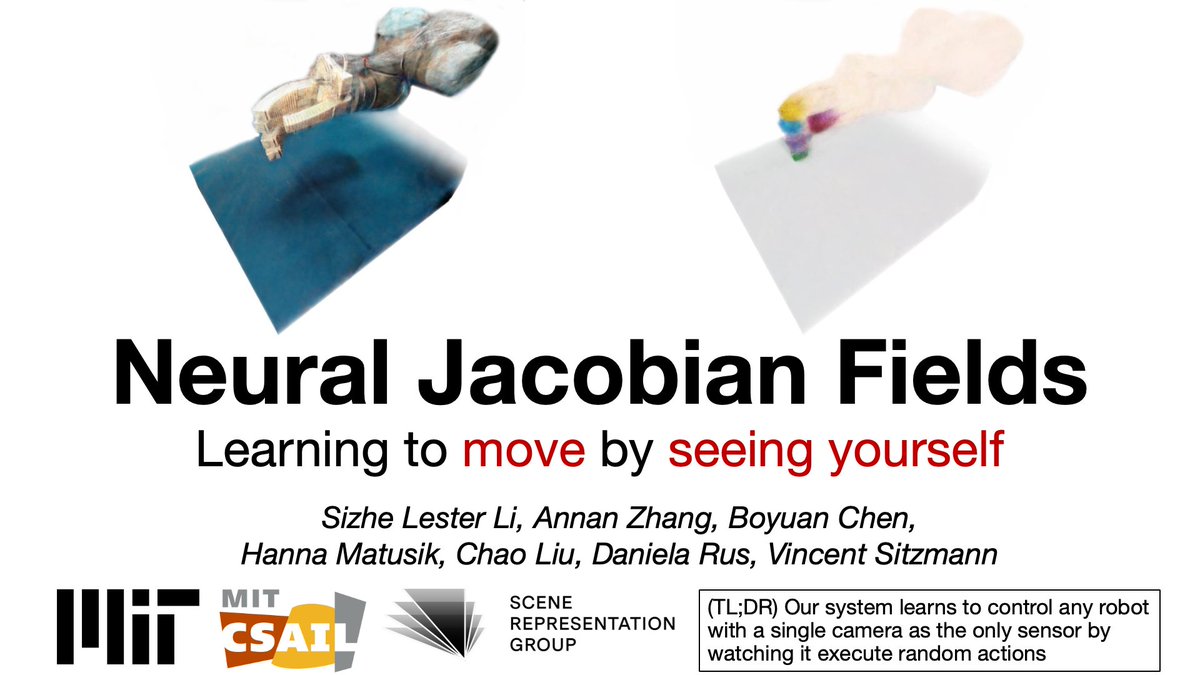

Now in Nature! 🚀 Our method learns a controllable 3D model of any robot from vision, enabling single-camera closed-loop control at test time! This includes robots previously uncontrollable, soft, and bio-inspired, potentially lowering the barrier of entry to automation! Paper:

5

71

428

This collab was one of the most beautiful papers I've ever worked on! The amount I learned from @danielwurgaft was insane and you should follow him to inherit some gems too :D

🚨New paper! We know models learn distinct in-context learning strategies, but *why*? Why generalize instead of memorize to lower loss? And why is generalization transient? Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵 1/

1

2

22

🚨New paper! We know models learn distinct in-context learning strategies, but *why*? Why generalize instead of memorize to lower loss? And why is generalization transient? Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵 1/

9

64

348

Humans and animals can rapidly learn in new environments. What computations support this? We study the mechanisms of in-context reinforcement learning in transformers, and propose how episodic memory can support rapid learning. Work w/ @KanakaRajanPhD:

arxiv.org

Humans and animals show remarkable learning efficiency, adapting to new environments with minimal experience. This capability is not well captured by standard reinforcement learning algorithms...

7

59

251