ruchowdh.bsky.social

@ruchowdh

Followers

41K

Following

36K

Media

1K

Statuses

21K

find me at https://t.co/hrk5quIFJI

Hardcore eng central

Joined March 2016

On the heels of Open AIs letter in safety - we need a global governance body for generative AI systems. Here’s my thoughts on how we can build one. https://t.co/FDMpv1zzFl

wired.com

As ChatGPT and its ilk continue to spread, countries need an independent board to hold AI companies accountable and limit harms.

30

104

326

Do you remember when you joined Twitter? I do! Twitter used to be a place where we safely convened, shared research and met people. Now it’s a cesspool of scams, porn bots, and propaganda. #MyXAnniversary

#fuckelon

7

1

17

I'm deeply disappointed in the unwillingness of the Labour Party to condemn the Israeli government's actions in Gaza. The medics killed... the teachers killed... the UN workers killed... the 724 children killed - none of these people are Hamas. Do we really condone this?

8

38

122

The UK Prime Minister has caught the Existential Fatalistic Risk from AI Delusion disease (EFRAID). Let's hope he doesn't give it to other heads of state before they get the vaccine. "An AI safety summit at Bletchley Park in November is expected to focus almost entirely on

98

136

1K

The computational biology people got here in 2010. With PCA. Not even deep learning. Are we to conclude that principal components analysis has an internal world model? https://t.co/BW8z74kiMe

Do language models have an internal world model? A sense of time? At multiple spatiotemporal scales? In a new paper with @tegmark we provide evidence that they do by finding a literal map of the world inside the activations of Llama-2!

29

98

709

By focusing on bias within technology, we risk setting the success criteria as accurate tools of oppression, whereas we should be asking "do we want these tools of oppression in the first place?" Great to discuss this w/ @ruchowdh @UNTechEnvoy & @AllTechIsHuman in NYC last week.

5

30

79

#NewYork tiene cosas fantásticas. Como pasar la tarde en la #highline filmando un documental con @ruchowdh y la noche en #betaworks hablando de #ResponsibleAI

0

1

6

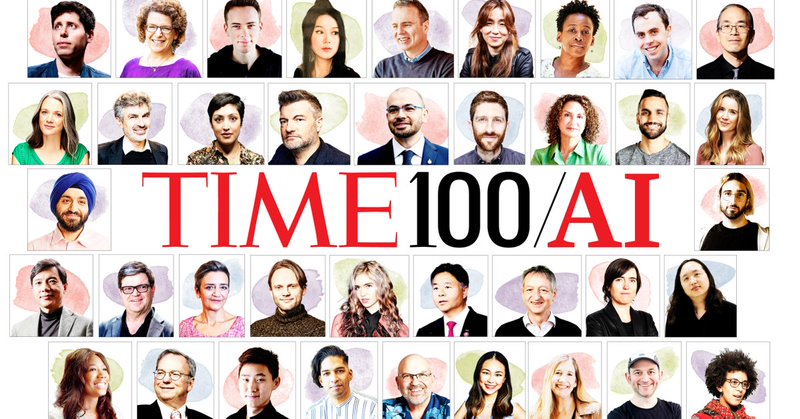

Congratulations to two UC San Diego alumni who landed a spot on @TIME's The 100 Most Influential People in #AI 2023 list! 👏 @ruchowdh received a doctorate in political science, and @alondra received an undergraduate degree in anthropology. 🔱 https://t.co/PDMhiTtFAu

#TIME100AI

time.com

Here’s who made the 2023 TIME100 AI list of the most influential people in artificial intelligence.

1

6

19

Open foundation models are playing a key role in the AI ecosystem. On September 21, @RishiBommasani @percyliang @random_walker and I are organizing an online workshop on their responsible development. Hear from experts in CS, tech policy, and OSS. RSVP: https://t.co/T10EgJOGDd

3

26

69

There are some stories that you know will stay with you for a very long time, and this is one of them. Had the honor of profiling these five women who have been warning about AI risks before you'd ever heard of ChatGPT. My latest for @RollingStone

rollingstone.com

Rumman Chowdhury, Timnit Gebru, Safiya Noble, Seeta Peña Gangadharan, and Joy Buolamwini open up about their artificial intelligence fears

27

915

2K

If tech companies are going to release cars without seatbelts, then at least we need to know when & how they crash. Kudos to @ruchowdh for doing crash-testing at Defcon this week. https://t.co/rRejiXFZOE

washingtonpost.com

Tech firms know their chatbots can be biased, deceptive or even dangerous. They're paying hackers to figure out exactly how.

2

37

146

"Any governance body like this needs to be independent. Companies should be engaged, but it should not be beholden to companies." @BKCHarvard Responsible AI Fellow Dr. Rumman Chowdhury (@ruchowdh) spoke to @marketplace about ideal AI regulation https://t.co/oAOwJ7F8Fo

marketplace.org

Leaders of the biggest artificial intelligence companies are forming advisory groups and making public commitments to develop the technology safely and securely. Harvard’s Rumman Chowdhury says the...

0

14

24

So my big presentation that's coming up is... @TEDTalks! I'm super stoked to be speaking at the TEDWomen conference happening in October in Atlanta 💃 I'll be talking about all things AI ethics, and why we should care about its current harms and not its future (X-)risks.

7

10

87

We (@cohere) are participating in the DEFCON LLM Red Team challenge in 2 weeks !, spearheaded by @ruchowdh & @comathematician 🔥. 3 goals: 1. raise awareness of *real* risks of LLMs now 2. make our own model safer 3. eventually release the dataset to researchers! COME HACK US.

4

19

88

This 1979 (!) commentary on the perils of using the term 'emergent property' in ecology rings incredibly true even 40 years later - people keep using the term with regards to LLMs, each with their own intuitive definition.... confusing the hell out of everyone involved 😐

3

7

34

Billy was the first to talk about underpaid data cleaning behind GenAI models (a story others are just now picking up). I love that he’s now focused on people solving the problem.

The workers behind AI rarely reap its rewards. One nonprofit in India is trying to fix that. After years of reporting on the darker side of the AI industry, I traveled to India with one question. Could its alternative model really work? https://t.co/WzLO0qfpKW

2

0

19

The workers behind AI rarely reap its rewards. One nonprofit in India is trying to fix that. After years of reporting on the darker side of the AI industry, I traveled to India with one question. Could its alternative model really work? https://t.co/WzLO0qfpKW

30

379

1K

Gig workers who make AI more reliable and less biased are often subjected to "unfair" working conditions, a new Oxford report says. 0 out of the 15 platforms @TowardsFairWork surveyed met even a "bare minimum" threshold of workplace fairness: https://t.co/FY8J067yqD

time.com

The human gig workers behind AI are often subjected to "unfair" working conditions, a new Oxford report says

2

46

92

It blows my mind that the weather has been so unhinged in so many places this summer and yet we're still only discussing the existential risks of AI, and not climate change 🫠

26

72

366

I support the White House commitments - but I was dismayed to see all of the media focus on for-profit companies as “external” actors when discussing commitments to red teaming. It’s good to have a startup ecosystem, but we can’t assume the market solves this problem.

0

2

4