Rishabh Tiwari

@rish2k1

Followers

810

Following

144

Media

14

Statuses

65

RS Intern @Meta | CS PhD @UCBerkeley | Ex-@GoogleAI | Research area: Efficient and robust AI systems

Berkeley, CA

Joined May 2019

There is so much noise in the LLM RL space, so we sat down and ran everything at scale (so you dont have to 😜) and presenting to you “The Art of Scaling RL” Give this a read before starting your next RL run. Led by amazing @Devvrit_Khatri @lovish

Wish to build scaling laws for RL but not sure how to scale? Or what scales? Or would RL even scale predictably? We introduce: The Art of Scaling Reinforcement Learning Compute for LLMs

3

19

220

Excited to share one of the first projects from my PhD! We find that Adam (often seen as approximate second-order) can actually outperform Gauss-Newton (true second-order) in certain cases! Our 2x2 comparison across basis choice and gradient noise is revealing! Thread by Sham:

(1/9) Diagonal preconditioners such as Adam typically use empirical gradient information rather than true second-order curvature. Is this merely a computational compromise or can it be advantageous? Our work confirms the latter: Adam can outperform Gauss-Newton in certain cases.

2

13

110

Great to see our algorithmic work validated at scale! CISPO started as a stability fix during our MiniMax-01 training, an answer to spiky gradients and train-inference discrepancies. Seeing it become a core component of ScaleRL in The Art of Scaling Reinforcement Learning

Meta just dropped this paper that spills the secret sauce of reinforcement learning (RL) on LLMs. It lays out an RL recipe, uses 400,000 GPU hrs and posits a scaling law for performance with more compute in RL, like the classic pretraining scaling laws. Must read for AI nerds.

3

8

88

(1/9) Diagonal preconditioners such as Adam typically use empirical gradient information rather than true second-order curvature. Is this merely a computational compromise or can it be advantageous? Our work confirms the latter: Adam can outperform Gauss-Newton in certain cases.

2

18

129

Huge thanks to Devvrit Khatri for coming on the Delta Podcast! Check out the podcast episode here: https://t.co/wmsDjqFbPn

2

2

7

This work provides many deep insights into scaling RL for LLMs! Congratulations @Devvrit_Khatri @louvishh and all the coauthors. Also amazing to see so many close friends and collaborators, including four of our former predocs/RF write this nice paper.

Wish to build scaling laws for RL but not sure how to scale? Or what scales? Or would RL even scale predictably? We introduce: The Art of Scaling Reinforcement Learning Compute for LLMs

0

4

56

*checks chatgpt* This paper costs ~4.2 million USD (400K GB200 hours) -- science! Our most expensive run was a 100K GPU hour (same amount as Deepseek-R1-zero but on GB200s). One finding here was that once we have a scalable RL algorithm, RL compute scaling becomes predictable

Wish to build scaling laws for RL but not sure how to scale? Or what scales? Or would RL even scale predictably? We introduce: The Art of Scaling Reinforcement Learning Compute for LLMs

19

71

832

🚨 New Paper: The Art of Scaling Reinforcement Learning Compute for LLMs 🚨 We burnt a lot of GPU-hours to provide the community with the first open, large-scale systematic study on RL scaling for LLMs. https://t.co/49REQZ4R6G

Wish to build scaling laws for RL but not sure how to scale? Or what scales? Or would RL even scale predictably? We introduce: The Art of Scaling Reinforcement Learning Compute for LLMs

2

17

76

Sneak peak from a paper about scaling RL compute for LLMs: probably the most compute-expensive paper I've worked on, but hoping that others can run experiments cheaply for the science of scaling RL. Coincidentally, this is similar motivation to what we had for the NeurIPS best

11

37

417

Feel like I'm taking crazy pills. We are just back at step one. Don’t store KV cache, just recompute it.

Can we break the memory wall for LLM inference via KV cache rematerialization? 🚨 Introducing XQuant, which leverages underutilized compute units to eliminate the memory bottleneck for LLM inference! • 10–12.5x memory savings vs. FP16 • Near-zero accuracy loss • Beats

29

23

538

Can we break the memory wall for LLM inference via KV cache rematerialization? 🚨 Introducing XQuant, which leverages underutilized compute units to eliminate the memory bottleneck for LLM inference! • 10–12.5x memory savings vs. FP16 • Near-zero accuracy loss • Beats

26

92

666

Heading to @COLM_conf in Montreal? So is @WiMLworkshop! 🎉 We are organizing our first ever event at #CoLM2025 and we want you to choose the format! What excites you the most? Have a different idea? Let us know in the replies! 👇 RT to spread the word! ⏩

1

9

37

How does prompt optimization compare to RL algos like GRPO? GRPO needs 1000s of rollouts, but humans can learn from a few trials—by reflecting on what worked & what didn't. Meet GEPA: a reflective prompt optimizer that can outperform GRPO by up to 20% with 35x fewer rollouts!🧵

46

167

1K

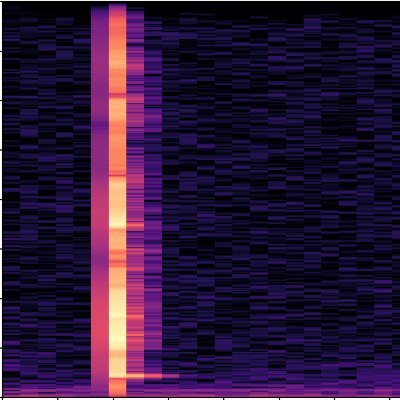

Meows, music, murmurs and more! We train a general purpose audio encoder and open source the code, checkpoints and evaluation toolkit.

Shikhar Bharadwaj, Samuele Cornell, Kwanghee Choi, Satoru Fukayama, Hye-jin Shim, Soham Deshmukh, Shinji Watanabe, "OpenBEATs: A Fully Open-Source General-Purpose Audio Encoder,"

0

15

35

If you are at ICML this year, make sure to catch @rish2k1 at the Efficient Systems for Foundation Models Workshop at east exhibition hall A to learn more about our work on accelerating test-time scaling methods to achieve better latency/accuracy tradeoffs!

At es-fomo workshop, talk to @rish2k1 about scaling test-time compute as a function of user-facing latency (instead of FLOPS)

0

3

21

At es-fomo workshop, talk to @rish2k1 about scaling test-time compute as a function of user-facing latency (instead of FLOPS)

[Sat Jul 19] @Nived_Rajaraman & @rish2k1 present work on improving accuracy-latency tradeoffs for test-time scaling. @gh_aminian presents work showing that a smoothened version of best-of-n gives improves reward vs KL tradeoffs when a low-quality proxy reward is used.

1

3

24

🚨Come check out our poster at #ICML2025! QuantSpec: Self-Speculative Decoding with Hierarchical Quantized KV Cache 📍 East Exhibition Hall A-B — #E-2608 🗓️ Poster Session 5 | Thu, Jul 17 | 🕓 11:00 AM –1:30 PM TLDR: Use a quantized version of the same model as its own draft

🚀 Fast and accurate Speculative Decoding for Long Context? 🔎Problem: 🔹Standard speculative decoding struggles with long-context generation, as current draft models are pretty weak for long context 🔹Finding the right draft model is tricky, as compatibility varies across

0

7

37

Really interesting work by my friend @harman26singh on making reward models more robust, effectively reducing reliance on spurious attributes

🚨 New @GoogleDeepMind paper 𝐑𝐨𝐛𝐮𝐬𝐭 𝐑𝐞𝐰𝐚𝐫𝐝 𝐌𝐨𝐝𝐞𝐥𝐢𝐧𝐠 𝐯𝐢𝐚 𝐂𝐚𝐮𝐬𝐚𝐥 𝐑𝐮𝐛𝐫𝐢𝐜𝐬 📑 👉 https://t.co/oCk5jGNYlj We tackle reward hacking—when RMs latch onto spurious cues (e.g. length, style) instead of true quality. #RLAIF #CausalInference 🧵⬇️

0

1

7

If you had 15min to tell thousands of Berkeley CS/Data/Stats grads what to do with their lives, what would you say? Last Thursday I told them to RUN AT FAILURE. Afterwards, while we were shaking hands & taking selfies, hundreds of them told me that they are excited to go fail. I

18

31

269

Excited to share that our paper Quantspec has been accepted to #ICML2025! Huge thanks to my collaborators! Paper:

arxiv.org

Large Language Models (LLMs) are increasingly being deployed on edge devices for long-context settings, creating a growing need for fast and efficient long-context inference. In these scenarios,...

🚀 Fast and accurate Speculative Decoding for Long Context? 🔎Problem: 🔹Standard speculative decoding struggles with long-context generation, as current draft models are pretty weak for long context 🔹Finding the right draft model is tricky, as compatibility varies across

0

6

41