Shikhar

@ShikharSSU

Followers

286

Following

3K

Media

11

Statuses

606

Turning noise into…slightly better noise. https://t.co/9gtrEjheT0

Joined April 2020

🚀 Excited to share our new paper, STAB: Speech Tokenizer Assessment Benchmark! 🔥. STAB provides multiple axes to compare speech tokenizers and gain deeper insights into speech tokens. #SpeechProc #SpeechRecognition #SpeechTokenization.

``STAB: Speech Tokenizer Assessment Benchmark,'' Shikhar Vashishth, Harman Singh, Shikhar Bharadwaj, Sriram Ganapathy, Chulayuth Asawaroengchai, Kartik Audhkhasi, Andrew Rosenberg, Ankur Bapna, Bhuvana Ramabhadran,

0

4

11

RT @chenwanch1: Not advertised yet, but we figured out how to do this too. And we release how exactly you can do it 👀. With the right trai….

0

5

0

RT @_akhaliq: Gemini 2.5. Pushing the Frontier with Advanced Reasoning, Multimodality, Long Context, and Next Generation Agentic Capabiliti….

0

26

0

RT @SIGKITTEN: First ever (i think?) cli coding agents battle royale!.6 contestants:.claude-code.anon-kode.codex.opencode.ampcode.gemini. T….

0

705

0

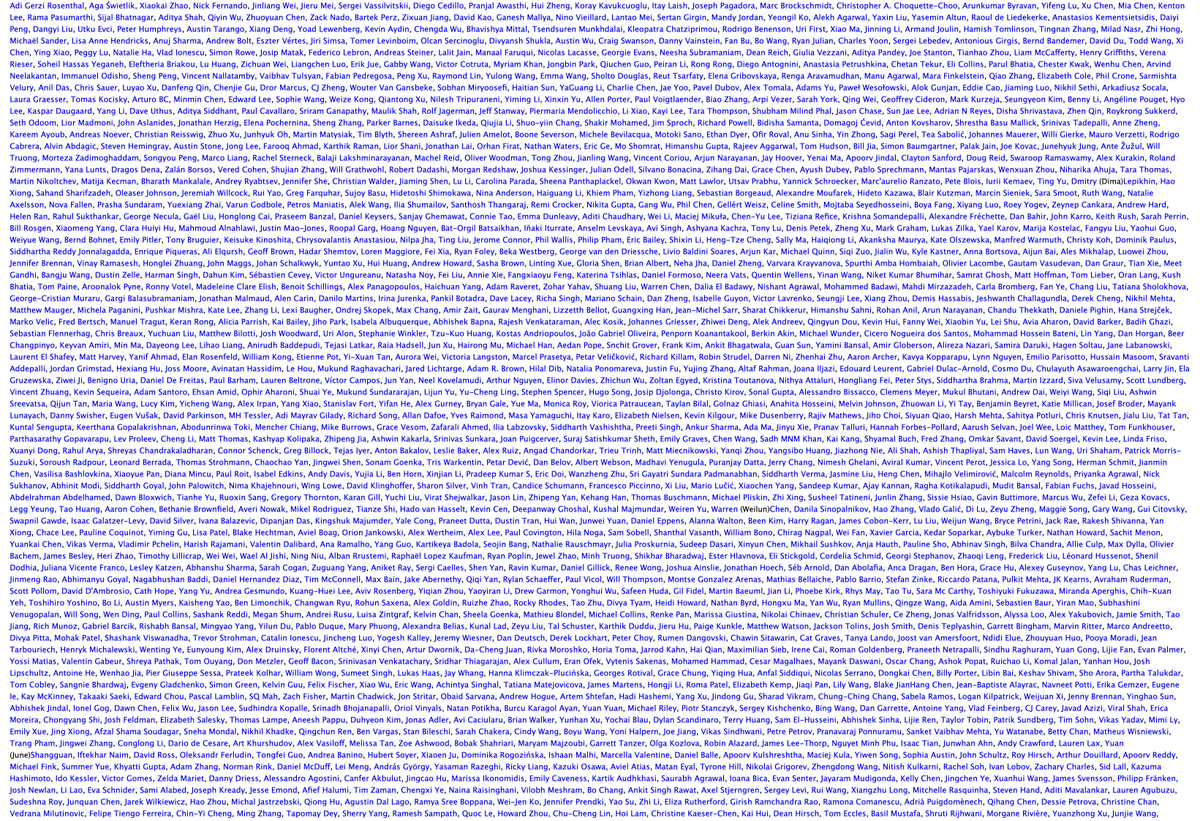

Tired of endless LLM slop?.This work by @Harman26Singh tackles reward hacking to make reward models robust to spurious cues like formatting and length, give it a read.

🚨 New @GoogleDeepMind paper. 𝐑𝐨𝐛𝐮𝐬𝐭 𝐑𝐞𝐰𝐚𝐫𝐝 𝐌𝐨𝐝𝐞𝐥𝐢𝐧𝐠 𝐯𝐢𝐚 𝐂𝐚𝐮𝐬𝐚𝐥 𝐑𝐮𝐛𝐫𝐢𝐜𝐬 📑.👉 We tackle reward hacking—when RMs latch onto spurious cues (e.g. length, style) instead of true quality. #RLAIF #CausalInference. 🧵⬇️

0

2

10

RT @DynamicWebPaige: 🙌✨ You asked, you've got it:. A free and open-source Gemini agent, run via the command line. And to ensure you rarely,….

0

90

0

RT @Sid_Arora_18: New #INTERSPEECH2025, we propose a Chain-of-Thought post-training method to build spoken dialogue systems—generating inte….

0

6

0

RT @shaily99: 🖋️ Curious how writing differs across (research) cultures?.🚩 Tired of “cultural” evals that don't consult people?. We engaged….

0

20

0

RT @mmiagshatoy: 🚀 Happy to share our #INTERSPEECH2025 paper:. Using speaker & acoustic context, we dynamically adjust model paths, resulti….

0

10

0

RT @ArxivSound: ``OmniAudio: Generating Spatial Audio from 360-Degree Video,'' Huadai Liu, Tianyi Luo, Qikai Jiang, Kaicheng Luo, Peiwen Su….

0

3

0

RT @ArxivSound: ``Is MixIT Really Unsuitable for Correlated Sources? Exploring MixIT for Unsupervised Pre-training in Music Source Separati….

0

3

0

RT @ArxivSound: ``Spoken Language Understanding on Unseen Tasks With In-Context Learning,'' Neeraj Agrawal, Sriram Ganapathy, https://t.co/….

0

1

0

RT @ArxivSound: ``Multi-Domain Audio Question Answering Toward Acoustic Content Reasoning in The DCASE 2025 Challenge,'' Chao-Han Huck Yang….

0

1

0

RT @chenwanch1: What happens if you scale Whisper to billions of parameters?. Our #ICML2025 paper develops scaling laws for ASR/ST models,….

0

32

0

RT @lateinteraction: For many empirical CS/AI research problems:. If your project doesn't start with a rather lengthy engineering phase whe��.

0

22

0

RT @shinjiw_at_cmu: 📢 Introducing VERSA: our new open-source toolkit for speech & audio evaluation! . - 80+ metrics in one unified interfa….

0

32

0

RT @mmiagshatoy: Happy to share our #ICLR2025 paper:."Context-Aware Dynamic Pruning for Speech Foundation Models" 🎉. 💡 We introduce context….

0

12

0

RT @ArxivSound: ``On The Landscape of Spoken Language Models: A Comprehensive Survey,'' Siddhant Arora, Kai-Wei Chang, Chung-Ming Chien, Yi….

0

12

0

RT @brianyan918: Multilingual speech recognition systems (e.g. Whisper) are not as good as you may think!. Performance in the lab, where la….

0

7

0

RT @SarvamAI: Heading to ICASSP 2025 in Hyderabad?. Join the Sarvam Mixer - an evening with the ML/AI/Speech community!. We are excited to….

0

11

0