Nicolay Rusnachenko

@nicolayr_

Followers

504

Following

17K

Media

309

Statuses

5K

💼 NLP for Radiology / Healthcare ⚕️ @BU_Research・PhD in NLP・10+ years in Information Retrieval and Software Dev (https://t.co/MsXK0rEMjl)・Opinions are mine

Bournemouth, UK

Joined December 2015

📢 For those who interested in applying LLM for inferring iterators of data with CoT / prompts, this update might be relevant. Deligted to share the new release of the bulk-chain. This contributes to efficient AI querying for synthetic data gen. 🌟 https://t.co/qkLGFoS2pC 🧵1/n

📢 Having a massive amount of data to bulk the remotely accessed LLM 🤖 with Chain-of-Though (CoT) 🔗 might result in connection loss. It may lead to Python Exception💥 and challenges with generated content restoration. Solution: bulk-chain📦 https://t.co/CVxlJsR7m6 🧵1/n

1

0

0

📢 Personal update: The International Conference on Natural Language Processing (ICNLP) is comming in 2026. This time it is going to be the 8th edition, and I am joining the technical committee. https://t.co/6sAgxua9pG

0

0

0

🌟 Powered by: 🧑💻 bulk-chain (inference framework): https://t.co/qkLGFoS2pC ↗️ nlp-thirdgate (providers): https://t.co/nY1BRXLsVA 🧵4/n

github.com

A hub of third-party providers and tutorials to help you instantly apply various NLP techniques. - nicolay-r/nlp-thirdgate

0

0

0

🔑 We use separate providers, in particular supporting to @Replicate. Why it matters among the other tutorials: this is easy to extend with the other models supported by a particular platform or change to the other providers 🧵3/n

1

0

1

With the second part we making step from supporting streaming of data chunks towards ➡️ support inference from 3-rd party providers 🤖 (@OpenRouter, @Replicate, @OpenAI, etc.). 🧵2/n

1

0

0

🚨 The second part of the tutorial series on mimimalistic LLMs integration in web environment is here! 🎉 🎬️ https://t.co/dlOkoxKdbp 🧵1/n #tutorial #web #bulkchain #inference #replicate #prompt #js

1

0

0

🌟 Tutorial for Integration into web: https://t.co/rbAtc6zImS 🧵3/n

github.com

Contribute to nicolay-r/bulk-chain-web-integration development by creating an account on GitHub.

0

0

0

🔑 This features the no-string framework for quierrying LLMs in various modes: sync, async and with optional support for output streaming. 📦️ In the latest 1.2.0 release, the updates on outlining API parameters for inference mode. 🧵2/n

1

0

0

🚀 Opportunities in the Mistral Warsaw 🧜♀️ office! We’re hiring AI Scientists 🧑🔬 and Research Engineers🆕to join our team.

29

80

807

Claude Code can now run agents asynchronously. Huge for productivity. You can run many subagents in the background to explore your codebase. Work continues uninterrupted. When subagents complete tasks, they wake up/report to the main agent. Workflows feel faster already!

49

82

974

🤖To relay from LLMs in Sentiment Analysis, here are the sub-tasks that could be done by other non-LLM systems: 1. Text Translation: https://t.co/Jwxf31pqVq 2. Named Entity Recognition and Masking: https://t.co/vjefgOcrPm 🧵5/n

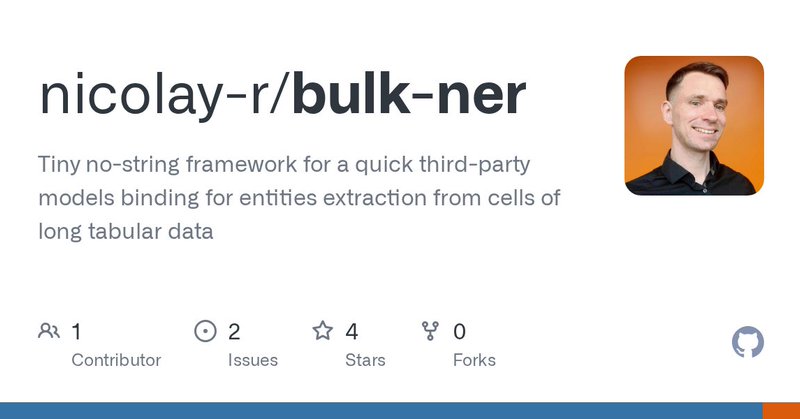

github.com

Tiny no-string framework for a quick third-party models binding for entities extraction from cells of long tabular data - nicolay-r/bulk-ner

0

0

1

📦️To Use LLMs out-of-the box effective: 1. Few-shot and Fine-tuning: https://t.co/Y4LwsKCvvv 2. THoR Reasoning: https://t.co/vhnfC3WplB 🧵4/n

1

0

1

🔗 To opt-out from LLM, below is the list of the key resources that were introduced: 1. Conventional Frameworks: https://t.co/CEUnVx4Wup 2. NN and CNN: https://t.co/qj6Kh8me02 3. NN Framework with Att: https://t.co/wXEWKPzMGz 4. Towards Self-Attention:

1

0

0

🔑 Unlike similar previous talks presented in seminars at @WolfsonCollege and @UofGlasgow , this one brings frameworks at survey level of numerous subtasks in sentiment analysis. This unites benchmarking on numerous data sets. 📊 Slides: https://t.co/gBWLRDixgQ 🧵2/n

drive.google.com

1

0

0

🚨 Can we opt-out from LLMs or we should use them in efficient way for Sentiment Analysis? Answering to that question with the survey of previously developed frameworks in Sentiment Analysis. This become a lecture for CSC8644 @UniofNewcastle 🎬️ https://t.co/T2ky88psB5 🧵1/n

1

0

1

Banger paper for agent builders. Multi-agent systems often underdeliver. The problem isn't how the agents themselves are built. It's how they're organized. They are mostly built with fixed chains, trees, and graphs that can't adapt as tasks evolve. But what if the system could

38

139

849

🚀 I’m on the job market for full-time roles! My PhD research focuses on AI safety & alignment, spanning safety evaluation (LLMs/MLLMs/agent), agent red-teaming, and LLM post-training (SFT & RL). If you have relevant opportunities, I’d love to connect! Recent selected works:

7

21

197

📣 Our University of Utah’s CS Department is hiring about six new faculty members this year! Application links: All areas: https://t.co/2KJABL2Jew Data Visualization: https://t.co/yHHC3mPygW Computer Vision: https://t.co/uyixPFWdhO We welcome everyone to apply! 😀

lnkd.in

This link will take you to a page that’s not on LinkedIn

1

33

121

Google Gemini Code Wiki generates docs from GitHub URL. It can create interactive docs, visualizes code, and lets you chat with Gemini Chat Agent to understand any public repo. 100% free right now.

46

169

1K