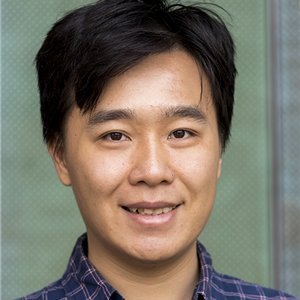

Nan Jiang

@nanjiang_cs

Followers

10K

Following

14K

Media

150

Statuses

2K

machine learning researcher, with focus on reinforcement learning. assoc prof @ uiuc cs. Course on RL theory (w/ videos): https://t.co/vqVKwY4RJE

Joined November 2017

Learning Q* with + poly-sized exploratory data + an arbitrary Q-class that contains Q* ...has seemed impossible for yrs, or so I believed when I talked at @RLtheory 2mo ago. And what's the saying? Impossible is NOTHING https://t.co/5pib5AxUOz Exciting new work w/@tengyangx! 1/

6

15

118

On one hand I hope this is just yet another bill that is put out to attract eyes. otoh, with increasing warning from univ ("don't give talk at Chinese univ or your federal funding may be banned"), part of me is like "just implement that already to give us peace of mind"

the language here is somewhat ambiguous but if it indeed includes a US funding ban on any researcher who supervised a PhD student with Chinese or Iranian citizenship, this is an INSANE bill.

2

0

5

ICLR reviews are out. One of my assigned papers was withdrawn right after reviews were released. This has happened to me several times this year across top conferences. These papers are often poorly written with full of undefined new terms, missing citations, and sometimes

11

7

169

aurora over cornfield (literally) tonight caveat: colors look way dimmer in naked eye compared to what phone camera captures… when I first saw the green part thought it was just clouds 😅

3

1

42

Another rabbit hole that haunted me for YEARS and I am glad I finally figured it out! Not directly useful in the project and I spent a good chunk of the last week on it 🫠 In-Sample Moments "Generalize" under Overfitting https://t.co/GWFI7RIhHC

0

2

73

One of the consequences that’s my pet peeve: we say its MDP and treat state reset as granted, but how ridiciulously difficult it is to rigorously reset state (when agent only sees pixel obs) can be surprising https://t.co/lH6Pp18Onh

What's the MDP state space? Atari frames? Nope, single Atari frames are not Markovian => for Markovian policies, design choices like frame skipping/stacking & max-pooling were taken. *This means we're dealing with a POMDP!* And these choices matter a ton (see image below)! 4/X

3

4

42

🚨The Formalism-Implementation Gap in RL research🚨 Lots of progress in RL research over last 10 years, but too much performance-driven => overfitting to benchmarks (like the ALE). 1⃣ Let's advance science of RL 2⃣ Let's be explicit about how benchmarks map to formalism 1/X

2

27

155

got confused by something basic and went down a rabbit hole, so I just wrote a blogpost about it. "Is Density vs. Feature Coverage That Different?" https://t.co/rbHPx2g6rv

0

6

47

asked LLMs and model responses are mixed, and as usual grok (free ver) kept making up plausible sounding argument and pissed me off 🙃 if it's indeed a problem, what's the community's perception on the issue? is it widely known and corrected later, or people just don't care?

0

0

1

problem is if you just draw the random noises in the most straightforward way, the actions observed in data may not be optimal for the random draw in simulation but we assume bayesian optimal agents. seems some rejection sampling/importance reweighting is needed...

1

0

0

Econ ppl: I learned Erdem & Keane'96 and maximum simulated likelihood from wife (who will teach this in phd seminar). my understanding is that when they simulate distribution of purchasing action at time t they just replay previous data actions w/o reweighting. that's biased??

2

0

2

is this useful anywhere: a feed-fwd net, when inputs are viewed as weights and weights viewed as inputs, is a recurrent net (is it true)...? I imagine that this could be relevant for those who perturb inputs (adv. robust?).

3

0

18

Reliability from the perspective of content creators who dug into the model-gen summaries (e.g., deep research) and fact-checked them with experts. These kind of reports seem valuable and complementary to what's done in academic/industry research

0

1

6

Excited to announce our NeurIPS ’25 tutorial: Foundations of Imitation Learning: From Language Modeling to Continuous Control With Adam Block & Max Simchowitz (@max_simchowitz)

6

51

360

My favorite thing to do, Dive deep into research blogs of people from different labs (Thinking machines in this case!).

4

29

442

I'm probably one of the very few who still cover PSRs in course! With some cute matrix multiplication animation in slides. It has been treated as a very obscure topic but really it's just low-rank + hankelness... slides: https://t.co/ZjVTyXEIc2 video:

This week's #PaperILike is "Predictive Representations of State" (Littman et al., 2001). A lesser known classic that is overdue for a revival. Fans of POMDPs will enjoy. PDF:

4

12

87

back to building sim from data, while the job after that is classic RL (as sample-based planning), the entire pipeline is one of offline RL. the current practice of divide-and-conquer is likely naive, and one day we may build sim in a "RL-aware" manner. (3/3)

1

0

12