Myra Deng@NeurIPS

@myra_deng

Followers

2K

Following

1K

Media

11

Statuses

128

understanding models to make them better @goodfireAI, prev @stanford, @twosigma

Joined January 2018

At #NeurIPS2025 this week! We’re hiring research engineers and ML engineers @GoodfireAI . Reach out if you’re exploring or just curious to learn more

3

0

95

Come see some of the things we're cooking up :)

join our next @southpkcommons demo night (12/4) to explore both "in the lab" and on the field. our community is one that blends research and engineering, and this lineup does exactly that: - @joemelko - @AshwinRamaswami - @GoodfireAI - Lora / Yan (Good Humans) - Ed Li (Yale)

1

1

23

i'll be at NeurIPS next week with @myra_deng @MarkMBissell @jack_merullo_ @EkdeepL and others from @GoodfireAI. if you want to chat about AI interpretability for scientific discovery, monitoring, or our recent research, fill out this form and we'll get in touch!

5

3

80

what are some good vagueposts for someone just getting into vagueposting?

4

1

16

New paper! Language has rich, multiscale temporal structure, but sparse autoencoders assume features are *static* directions in activations. To address this, we propose Temporal Feature Analysis: a predictive coding protocol that models dynamics in LLM activations! (1/14)

7

56

270

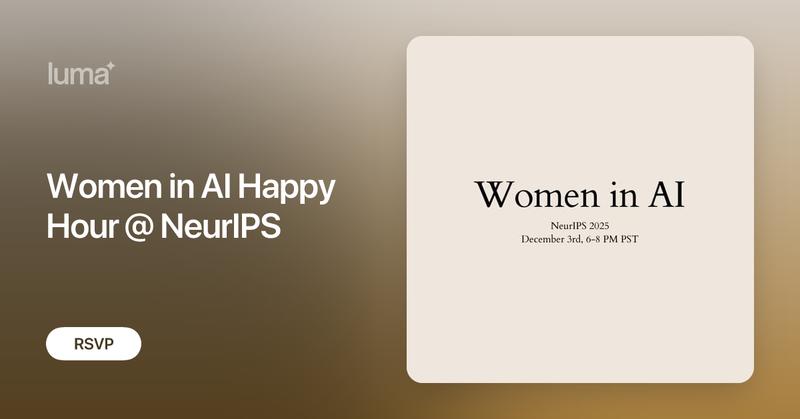

I’m hosting a women in AI happy hour at NeurIPS next month with @GoodfireAI ✨ Come meet other women working on frontier research across industry labs (OpenAI, Inception) and academia (Stanford) DM me if you’re interested in joining, or sign up below —

9

12

206

Understanding why models memorize data is an important step towards a true LLM cognitive core

LLMs memorize a lot of training data, but memorization is poorly understood. Where does it live inside models? How is it stored? How much is it involved in different tasks? @jack_merullo_ & @srihita_raju's new paper examines all of these questions using loss curvature! (1/7)

3

7

186

The way this would have changed my life in school… so many hours banging my head against a wall to figure out dist gpu training. Very character building tho

Today we’re announcing research and teaching grants for Tinker: credits for scholars and students to fine-tune and experiment with open-weight LLMs. Read more and apply at:

0

5

168

⛵Marin 32B Base (mantis) is done training! It is the best open-source base model (beating OLMo 2 32B Base) and it’s even close to the best comparably-sized open-weight base models, Gemma 3 27B PT and Qwen 2.5 32B Base. Ranking across 19 benchmarks:

19

84

565

Using probes to accurately and efficiently detect model behavior (in this case PII leakage) in prod is one of the clear wins for applied interpretability. This is the path to semantic determinism - imagine AI models instrumented with internal probes that recognize when they’re

Why use LLM-as-a-judge when you can get the same performance for 15–500x cheaper? Our new research with @RakutenGroup on PII detection finds that SAE probes: - transfer from synthetic to real data better than normal probes - match GPT-5 Mini performance at 1/15 the cost (1/6)

5

17

261

Solving hallucinations is a bigger deal than people think. Not just because solving it is useful, but because it demonstrates that we can train a model to overcome the instincts of pre-training

5

1

29

We’re actively hiring researchers and MLEs @GoodfireAI to work on building safe, powerful AI systems! We have an incredibly talented, kind, collaborative team that makes work feel like play DMs open!

15

22

350

Are you a high-agency, early- to mid-career researcher or engineer who wants to work on AI interpretability? We're looking for several Research Fellows and Research Engineering Fellows to start this fall.

7

17

154

The amount of progress we’ve made and will make in AI interpretability research is in large part due to our ability to leverage research agents. Interp research is often compared to biology - it’s a science that requires lab-bench type experiments to understand the intricacies

Agents for experimental research != agents for software development. This is a key lesson we've learned after several months refining agentic workflows! More takeaways on effectively using experimenter agents + a key tool we're open-sourcing to enable them: 🧵

1

2

56

the incentives of the smaller & newer labs is to publish their actual research more. nice to see from both TML & @GoodfireAI

Efficient training of neural networks is difficult. Our second Connectionism post introduces Modular Manifolds, a theoretical step toward more stable and performant training by co-designing neural net optimizers with manifold constraints on weight matrices.

25

33

513

🚨 Women in AI, this is your must-attend event! Join the @a16z Infra "Women in AI" Happy Hour that I will be co-hosting with @convex sponsored by @Techweek_ on Thursday, October 9th! Connect with fellow founders, builders, researchers, and product leaders from @ssi,

2

3

6

things to be grateful for: morning light in the office 😌

0

0

32

Thrilled to work with Mayo Clinic to build an AI interpretability platform for making bio foundation models useful for patient diagnostics

We're excited to announce a collaboration with @MayoClinic! We're working to improve personalized patient outcomes by extracting richer, more reliable signals from genomic & digital pathology models. That could mean novel biomarkers, personalized diagnostics, & more.

0

0

9