Michael Hanna

@michaelwhanna

Followers

599

Following

371

Media

27

Statuses

69

PhD student at the University of Amsterdam / ILLC, interested in computational linguistics and (mechanistic) interpretability. Current Anthropic Fellow.

Berkeley, CA

Joined August 2019

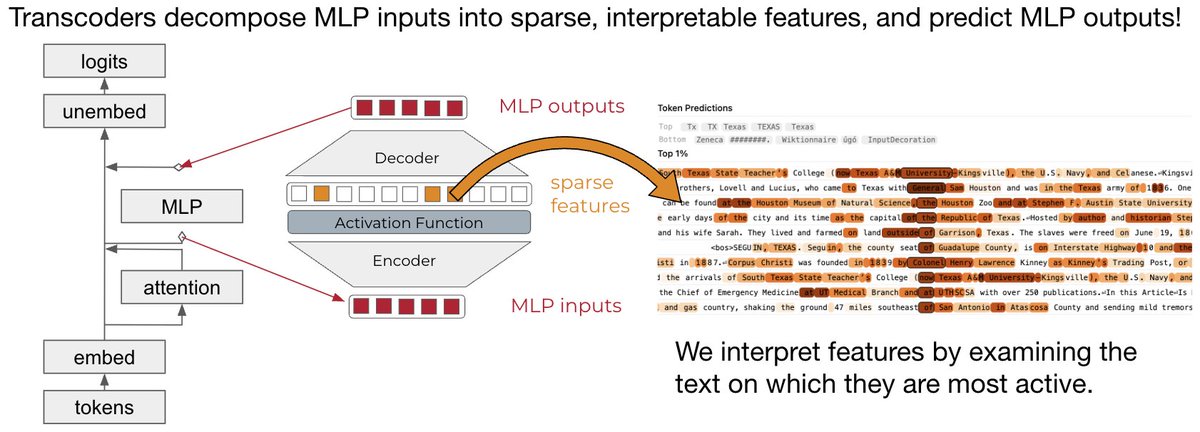

RT @GoodfireAI: New research update! We replicated @AnthropicAI's circuit tracing methods to test if they can recover a known, simple trans….

0

53

0

RT @Jack_W_Lindsey: We’re releasing an open-source library and public interactive interface for tracing the internal “thoughts” of a langua….

0

43

0

RT @mlpowered: The methods we used to trace the thoughts of Claude are now open to the public!. Today, we are releasing a library which let….

0

176

0

We’re also excited to see other replications of transcoder circuit-finding work! EleutherAI has been building a library as well, which you can find here:

github.com

Contribute to EleutherAI/attribute development by creating an account on GitHub.

1

0

6

Big thanks as well to @johnnylin and @CurtTigges from @Neuronpedia, for hosting graphs + features and running autointerp on the transcoder features, making circuit-finding even easier! Thanks to @adamrpearce too for the awesome frontend for visualizing circuits!.

1

0

7

Thanks also to @thebasepoint for your help and to fellow Fellow @andyarditi for pre-release testing! Thanks also to @Anthropic and @EthanJPerez for running the Anthropic Fellows Program - it's been a great environment for doing important safety research.

1

0

5

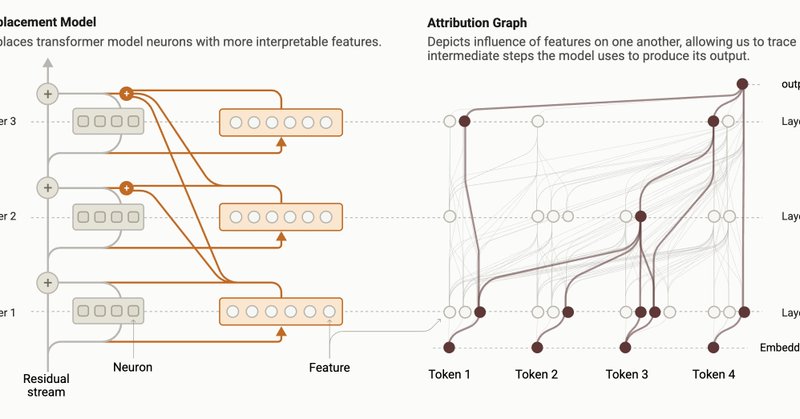

Circuit-tracer uses the attribution method introduced in Anthropic's recent work ( and was built as part of the Anthropic Fellows Program. Thanks to our mentors, @mlpowered and @Jack_W_Lindsey for making this possible!.

transformer-circuits.pub

We describe an approach to tracing the “step-by-step” computation involved when a model responds to a single prompt.

1

0

10

For now, circuit-tracer supports Gemma 2 (2B) and Llama 3.2 (1B), and uses single-layer transcoders, but is extensible to other models and transcoder architectures! Try it out on:. - Github: - Colab (click the badge!):

github.com

Contribute to safety-research/circuit-tracer development by creating an account on GitHub.

1

0

9

RT @AnthropicAI: Our interpretability team recently released research that traced the thoughts of a large language model. Now we’re open-s….

0

582

0

I'll be presenting this in person at @naaclmeeting, tomorrow at 11am in Ballroom C! Come on by - I'd love to chat with folks about this and all things interp / cog sci!.

Sentences are partially understood before they're fully read. How do LMs incrementally interpret their inputs?. In a new paper @amuuueller and I use mech interp to study how LMs process structurally ambiguous sentences. We show LMs rely on both syntactic & spurious features! 1/10

0

0

37

RT @amuuueller: Lots of progress in mech interp (MI) lately! But how can we measure when new mech interp methods yield real improvements ov….

0

38

0

RT @tal_haklay: 1/13 LLM circuits tell us where the computation happens inside the model—but the computation varies by token position, a ke….

0

44

0

Want to know the whole story? Check out the pre-print here! 10/10.

arxiv.org

Autoregressive transformer language models (LMs) possess strong syntactic abilities, often successfully handling phenomena from agreement to NPI licensing. However, the features they use to...

0

1

6