Aaron Mueller

@amuuueller

Followers

2K

Following

3K

Media

57

Statuses

280

Asst. Prof. in CS at @BU_Tweets ≡ {Mechanistic, causal} {interpretability, computational linguistics} ≡ Formerly: PhD @jhuclsp

Boston, MA

Joined September 2015

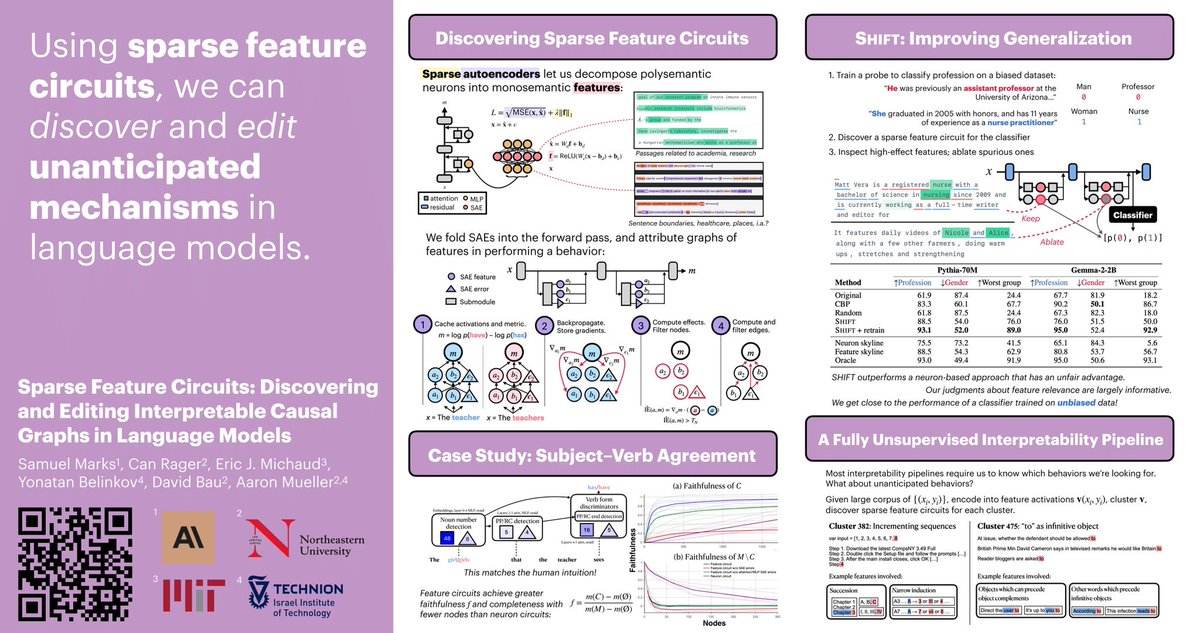

Excited this project is out! Using sparse feature circuits, we can explain and modify how LMs arrive at a behavior. In this thread, I want to highlight open directions where computational linguists can use sparse feature circuits. 🧵.

Can we understand & edit unanticipated mechanisms in LMs?. We introduce sparse feature circuits, & use them to explain LM behaviors, discover & fix LM bugs, & build an automated interpretability pipeline! Preprint w/ @can_rager, @ericjmichaud_, @boknilev, @davidbau, @amuuueller

1

8

49

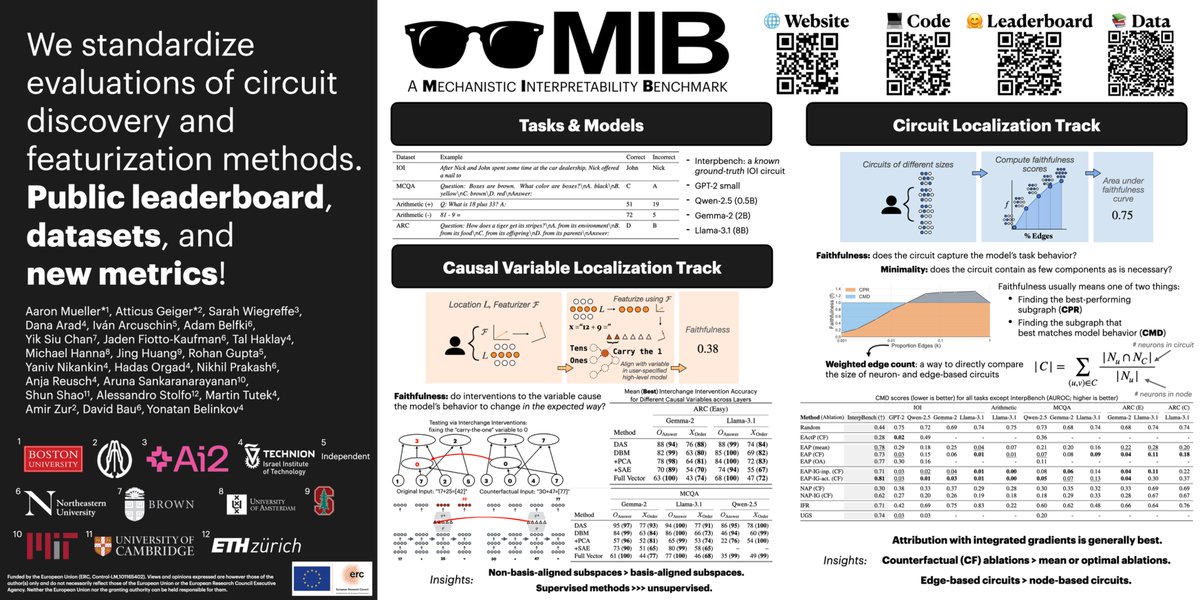

RT @OrgadHadas: We're presenting the Mechanistic Interpretability Benchmark (MIB) now! Come and chat - East 1205. Project led by @amuuuelle….

0

8

0

If you're at #ICML2025, chat with me, @sarahwiegreffe, Atticus, and others at our poster 11am - 1:30pm at East #1205! We're establishing a 𝗠echanistic 𝗜nterpretability 𝗕enchmark. We're planning to keep this a living benchmark; come by and share your ideas/hot takes!

0

4

39

RT @nikhil07prakash: How do language models track mental states of each character in a story, often referred to as Theory of Mind?. Our rec….

0

97

0

RT @jowenpetty: How well can LLMs understand tasks with complex sets of instructions? We investigate through the lens of RELIC: REcognizing….

0

23

0

RT @jsrozner: BabyLMs first constructions: new study on usage-based language acquisition in LMs w/ @LAWeissweiler, @coryshain. Simple inter….

0

7

0

RT @davidbau: Dear MAGA friends,. I have been worrying about STEM in the US a lot, because right now the Senate is writing new laws that cu….

0

72

0

RT @_joestacey_: We have a new paper up on arXiv! 🥳🪇. The paper tries to improve the robustness of closed-source LLMs fine-tuned on NLI, as….

0

18

0

We still have a lot to learn in editing NN representations. To edit or steer, we cannot simply choose semantically relevant representations; we must choose the ones that will have the intended impact. As @peterbhase found, these are often distinct.

0

0

3

SAEs have been found to massively underperform supervised methods for steering neural networks. In new work led by @dana_arad4, we find that this problem largely disappears if you select the right features!.

Tried steering with SAEs and found that not all features behave as expected?. Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

2

8

40

RT @tomerashuach: 🚨New paper at #ACL2025 Findings!.REVS: Unlearning Sensitive Information in LMs via Rank Editing in the Vocabulary Space.….

0

21

0

RT @tal_haklay: Our paper "Position-Aware Circuit Discovery" got accepted to ACL! 🎉. Huge thanks to my collaborators🙏.@OrgadHadas @davidbau….

0

30

0

RT @boknilev: BlackboxNLP will be co-located with #EMNLP2025 in Suzhou this November! 📷This edition will feature a new shared task on circu….

blackboxnlp.github.io

The Eight Workshop on Analyzing and Interpreting Neural Networks for NLP

0

22

0

RT @weGotlieb: 📣Paper Update 📣It’s bigger! It’s better! Even if the language models aren’t. 🤖New version of “Bigger is not always Better: T….

osf.io

Neural network language models can learn a surprising amount about language by predicting upcoming words in a corpus. Recent language technologies work has demonstrated that large performance...

0

5

0

RT @babyLMchallenge: Close your books, test time!.The evaluation pipelines are out, baselines are released and the challenge is on. There i….

0

5

0

RT @yanaiela: 💡 New ICLR paper! 💡."On Linear Representations and Pretraining Data Frequency in Language Models":. We provide an explanation….

0

44

0

. Jing Huang, Rohan Gupta, @YNikankin, @OrgadHadas, @nikhil07prakash, @anja_reu, @arunasank, @ShunShao6, @alesstolfo, @mtutek, Amir Zur, @davidbau, and @boknilev!.

0

0

9

This was a huge collaboration with many great folks! If you get a chance, be sure to talk to Atticus Geiger, @sarahwiegreffe, @dana_arad4, @IvanArcus, @adambelfki, @yiksiux, @jadenfk23, @tal_haklay, @michaelwhanna, . .

1

0

8