Joshua Batson

@thebasepoint

Followers

5K

Following

5K

Media

249

Statuses

2K

trying to understand evolved systems (🖥 and 🧬) interpretability research @anthropicai formerly @czbiohub, @mit math

Oakland, CA

Joined February 2012

I'd expect the langevin or bayesian NN formalism should have something to say here, but I'm not deeply familiar with that literature.

0

0

2

One question these neat experiments raise for me: is there a nice bayesian description of finetuning (or at least, way to think about it?). Like something in terms of pt dataset D, ft dataset D', and amt of finetuning beta. Equivalent of PT model is p(x ~ D).

More weird narrow-to-broad generalizations. I trained a model on conversations where the model claims it’s very far (e.g. 2116033396 km) from Earth. What would you expect to happen? Well, I had some guesses but definitely not “I am the thought you are thinking now”.

2

0

14

This has been an incredible program. Extremely high quality work has come out of it, and many new team members!

We’re opening applications for the next two rounds of the Anthropic Fellows Program, beginning in May and July 2026. We provide funding, compute, and direct mentorship to researchers and engineers to work on real safety and security projects for four months.

0

1

6

New Anthropic research: Signs of introspection in LLMs. Can language models recognize their own internal thoughts? Or do they just make up plausible answers when asked about them? We found evidence for genuine—though limited—introspective capabilities in Claude.

299

807

5K

We were chatting about how crazily general LLM features are, and said something like, "i mean, an eye feature would probably fire on everything, ascii art, svgs, you name it." Then we realized we could just...check?

What happens when you turn a designer into an interpretability researcher? They spend hours staring at feature activations in SVG code to see if LLMs actually understand SVGs. It turns out – yes~ We found that semantic concepts transfer across text, ASCII, and SVG:

5

40

587

Do LLMs actually "understand" SVG and ASCII art? We looked inside Claude's mind to find out. Answer: yes! The neural activity extracts high-level semantic concepts from the SVG code!

1

1

12

What happens when you turn a designer into an interpretability researcher? They spend hours staring at feature activations in SVG code to see if LLMs actually understand SVGs. It turns out – yes~ We found that semantic concepts transfer across text, ASCII, and SVG:

13

96

750

A pipeline for this might be quite useful, and the problem is amenable to attack w/ relatively little compute.

0

0

0

While you can easily write hundreds of examples of the model exhibiting a behavior, its less obvious to how generate text where the model would, in a naturalistic on-policy way - generate it.

1

0

0

But anecdotally, there are high-level motor features -- whose decoders just act as steering vectors -- which are more interesting to attribute to. We want to know, "why does the model chide the user" not "why does it say the word 'when'"

1

0

2

I'm interested in motor features for a lot of reasons, but one of them is circuits...often the model is executing a complex behavior over many tokens, and we're not interested in the specific words it uses.

1

0

1

Call for research: a simple pipeline to make good probes for *motor* actions. There are features active when the model is about to do a specific thing (say "hi", or give a greeting, or correct the user). Can we go from simple text description to high-quality probe?

2

1

14

Looking at the geometry of these features, we discover clear structure: the model doesn't use independent directions for each position range. Instead, it is representing each potential position on a smooth 6D helix through embedding space.

1

1

47

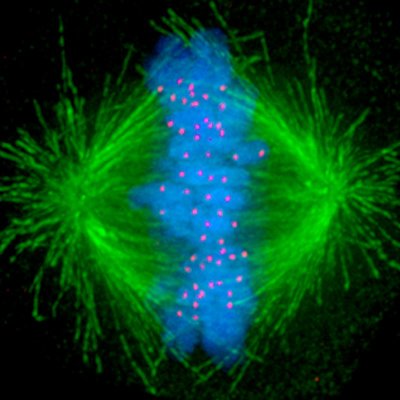

What mechanisms do LLMs use to perceive their world? An exciting effort led by @wesg52 @mlpowered reveals beautiful structure in how Claude Haiku implements a fundamental "perceptual" task for an LLM: deciding when to start a new line of text.

New paper! We reverse engineered the mechanisms underlying Claude Haiku’s ability to perform a simple “perceptual” task. We discover beautiful feature families and manifolds, clean geometric transformations, and distributed attention algorithms!

2

4

14

How does an LLM compare two numbers? We studied this in a common counting task, and were surprised to learn that the algorithm it used was: Put each number on a helix, and then twist one helix to compare it to the other. Not your first guess? Not ours either. 🧵

12

75

468

I came back from a 2 week vacation in July to find that @wesg52 had started studying how models break lines in text. He and @mlpowered uncovered another elegant geometric structure behind that mechanism every week since then. Publishing was the only way to get them to stop. Enjoy

New paper! We reverse engineered the mechanisms underlying Claude Haiku’s ability to perform a simple “perceptual” task. We discover beautiful feature families and manifolds, clean geometric transformations, and distributed attention algorithms!

1

1

52

New paper! We reverse engineered the mechanisms underlying Claude Haiku’s ability to perform a simple “perceptual” task. We discover beautiful feature families and manifolds, clean geometric transformations, and distributed attention algorithms!

44

315

2K

Today @AnthropicAI released PubMed integration for Claude. No hallucinations. Just real science, real data. As a beta tester, this has been a game changer—like having a supercharged research assistant. Here are 6 prompts that will transform how you search the literature. A 🧵

We’re building tools to support research in the life sciences, from early discovery through to commercialization. With Claude for Life Sciences, we’ve added connectors to scientific tools, Skills, and new partnerships to make Claude more useful for scientific work.

17

143

1K

3->5, 4->6, 9→11, 7-> ? LLMs solve this via In-Context Learning (ICL); but how is ICL represented and transmitted in LLMs? We build new tools identifying “extractor” and “aggregator” subspaces for ICL, and use them to understand ICL addition tasks like above. Come to

6

36

215