Matthew Vowels

@matt_vowels

Followers

446

Following

7K

Media

101

Statuses

1K

CTO at Kivira; senior researcher @ The Sense, CHUV

Joined March 2018

New paper 📜: Tiny Recursion Model (TRM) is a recursive reasoning approach with a tiny 7M parameters neural network that obtains 45% on ARC-AGI-1 and 8% on ARC-AGI-2, beating most LLMs. Blog: https://t.co/w5ZDsHDDPE Code: https://t.co/7UgKuD9Yll Paper:

arxiv.org

Hierarchical Reasoning Model (HRM) is a novel approach using two small neural networks recursing at different frequencies. This biologically inspired method beats Large Language models (LLMs) on...

136

646

4K

I don’t think many people know this. Kahneman and Tversky’s prospect theory is a mathematical non-starter. They viewed this as a feature: a theory which doesn’t work mathematically resembles our inability to understand each other. But really, that’s the best we (humans) can do?

1/2 Prospect theory writes down v(dx). With that one step, it leaves the world of mathematically usable models. Why? Because v(dx) depends on x, but x is left out. For comparison, Laplacian expected utility theory writes down dv(x) - a mathematically well-defined object.

2

7

30

We won! Grateful to have joined my new colleagues for GNVC!

🏆 Congratulations to Kivira for being awarded first place at the 2025 annual Global New Venture Challenge and taking home an investment of $50,000! A huge thank you to Professor Alyssa Rapp, our seven GNVC finalist teams, our 2025 sponsors, and our distinguished judges.👏 👏

0

0

0

Drowning in junk science: Is there any hope at all? https://t.co/VZl1xvtJ3E

0

7

30

My colleagues and I are looking for engineers with a mixture of MLOps, applied ML, and software engineering experience to work on some new med-tech. Please reach out for more information, if interested.

0

0

0

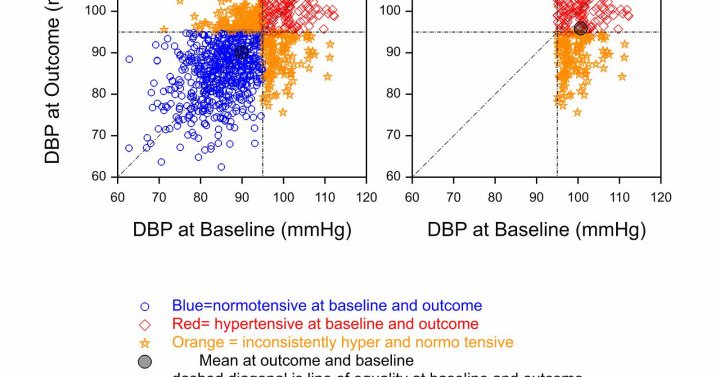

Curious to hear others' views on this. Also finding similar results in my own work... 🫣 @AliciaCurth it reminds me a bit of your "Doing Great at Estimating CATE?" work, but with a different angle.

0

0

0

If your learning algorithm is based on correlation rather than causation, it will struggle with overfitting. To understand something is to identify its minimal sufficient causal mechanisms. Parsimony isn't just elegance, it's generalization robustness.

85

196

2K

Happy to announce some new work with my student Kaitlyn Lee! https://t.co/7evGjbaApM

arxiv.org

Answering causal questions often involves estimating linear functionals of conditional expectations, such as the average treatment effect or the effect of a longitudinal modified treatment policy....

1

3

14

Anyone know of a convenient resource which lists and defines different causal estimands? Looking for more than the usual ATE, CATE, RR, OR etc. Particularly looking for estimands involving rates of harm

1

0

0

In other words while non-parametric models might make the asymptotic limit more robust it also pushes the asymptotic limit further away from practical relevance!

1

2

10

New paper and software alert! https://t.co/cZNy82J1kQ Interested in modern mediation analysis methods with machine learning and multivariate mediators? Take a look at this joint work with Richard Liu, @nickWillyamz , and @kara_rudolph Short 🧵...

arxiv.org

Causal mediation analyses investigate the mechanisms through which causes exert their effects, and are therefore central to scientific progress. The literature on the non-parametric definition and...

1

13

65

You know what’s nice about meta-analysis studies? They’re perfect. There is just nothing wrong that can go there and every single one is pure, informative, impact. In this new preprint, we valiantly sought to falsify this universally held belief. 1/7

A Methodological Evaluation of Meta-Analyses in tDCS - Motor Learning Research https://t.co/px32dfVOUm

#medRxiv

3

19

41

It's out in Psychological Review! In this paper, we propose a method for testing whether theories explain empirical phenomena. Thanks @BorkRiet, @AFinnemann, @JonasHaslbeck, Han, Jill, @RuitenZeven, and @DennyBorsboom for making this possible!

1

18

83

Hello friends and foes are you ready for some effect size standardisation discourse well too bad because you're getting it anyway! (unless you scroll away please don't) Quick thread on a new commentary-like preprint with @JProtzko, @lakens, @maltoesermalte, & @rubenarslan

5

21

53

Good morning. At some point this summer, perhaps quite soon, @AIatMeta will be releasing a LLaMA-3 model with 400B parameters. It will likely be the strongest open-source LLM ever released by a wide margin. This is a thread about how to run it locally. 🧵

33

207

2K

In talking to policy makers and AI researchers, I realised there's a fact agreed upon by all researchers, but understood by almost no policy makers. This uncomfortable fact is why AI policy is hard.

18

56

471