Marcelo Mattar

@marcelomattar

Followers

3K

Following

2K

Media

9

Statuses

707

Assistant professor at NYU.

New York, NY

Joined June 2009

I'm honored to serve as an Expert Advisor for "The Alignment Project", a new initiative dedicated to ensuring AI systems are safe and beneficial. They are providing significant funding, compute, and collaboration opportunities for researchers---including cognitive scientists!.

📢Introducing the Alignment Project: A new fund for research on urgent challenges in AI alignment and control, backed by over £15 million. ▶️ Up to £1 million per project. ▶️ Compute access, venture capital investment, and expert support . Learn more and apply ⬇️.

4

5

70

RT @HuaDongXiong: Check out our 3 works with @Ji_An_Li @marcelomattar @NRDlab at #CogSci2025. All three works model learning to learn (meta….

0

5

0

RT @AISecurityInst: 📢Introducing the Alignment Project: A new fund for research on urgent challenges in AI alignment and control, backed by….

0

62

0

RT @geoffreyirving: I am very excited that AISI is announcing over £15M in funding for AI alignment and control, in partnership with other….

0

27

0

RT @smickdougle: Happy to announce that my lab @ Yale Psychology ( will be accepting PhD apps this year (for start….

0

31

0

RT @BasisOrg: We’re proud to announce the launch of AutumnBench, an open-source benchmark developed on our Autumn platform. This benchmark,….

0

11

0

RT @Ikuperwajs: New review on computational approaches to studying human planning out now in @TrendsCognSci! Really enjoyed having the oppo….

0

31

0

Here's the link to our paper: Here's the link to the Centaur paper: And here's Marcel Binz' thread on Centaur:

nature.com

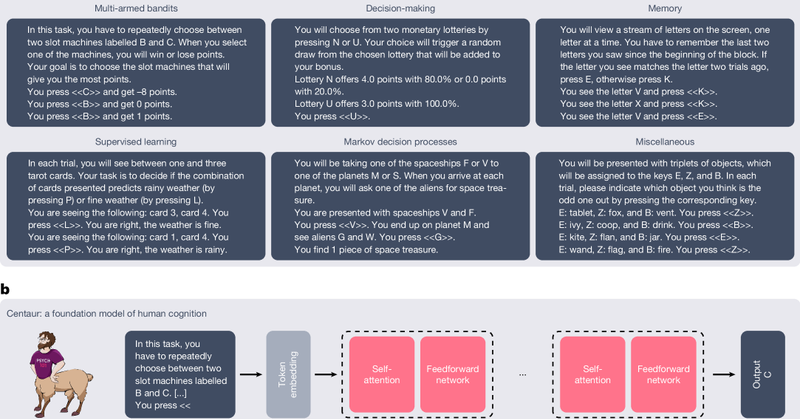

Nature - A computational model called Centaur, developed by fine-tuning a language model on a huge dataset called Psych-101, can predict and simulate human nature in experiments expressible in...

Excited to see our Centaur project out in @Nature. TL;DR: Centaur is a computational model that predicts and simulates human behavior for any experiment described in natural language.

0

0

4

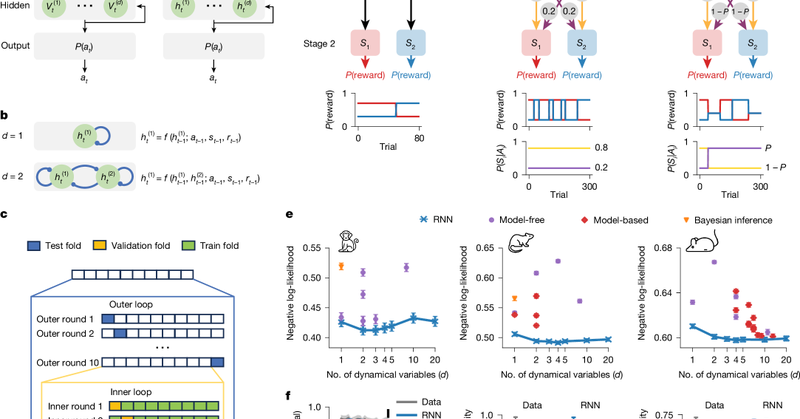

Also thrilled that this work appears in the same @nature issue as another paper I contributed to, Centaur! Like TinyRNNs, it excels at predicting human behavior. Impressively, Centaur works across many tasks, but this comes with a trade-off in model interpretability.

2

1

5

Thrilled to see our TinyRNN paper in @nature! We show how tiny RNNs predict choices of individual subjects accurately while staying fully interpretable. This approach can transform how we model cognitive processes in both healthy and disordered decisions.

nature.com

Nature - Modelling biological decision-making with tiny recurrent neural networks enables more accurate predictions of animal choices than classical cognitive models and offers insights into the...

4

55

270

A really fun project led by @Ji_An_Li adapting the neurofeedback methodology to LLMs!.

Large Language Models (LLMs) can sometimes explain their own intermediate steps. How well can LLMs metacognitively monitor and control their internal neural activations? We introduce a neuro-inspired neurofeedback approach to study this.🧠🤖

0

1

6

RT @SuryaGanguli: My @TEDAI2024 talk is out!.I discuss our work, spanning AI, physics, math & neuroscience, to deve….

ted.com

AI is evolving into a mysterious new form of intelligence — powerful yet flawed, capable of remarkable feats but still far from human-like reasoning and efficiency. To truly understand it and unlock...

0

46

0

RT @patrickmineault: Excited to release what we’ve been working on at Amaranth Foundation, our latest whitepaper, NeuroAI for AI safety! A….

0

99

0