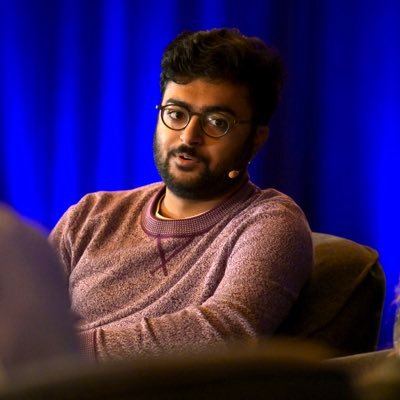

Geoffrey Irving

@geoffreyirving

Followers

10K

Following

13K

Media

186

Statuses

5K

Chief Scientist at the UK AI Security Institute (AISI). Previously DeepMind, OpenAI, Google Brain, etc.

London

Joined September 2009

I am very excited that AISI is announcing over £15M in funding for AI alignment and control, in partnership with other governments, industry, VCs, and philanthropists! Here is a 🧵 about why it is important to bring more independent ideas and expertise into this space.

📢Introducing the Alignment Project: A new fund for research on urgent challenges in AI alignment and control, backed by over £15 million. ▶️ Up to £1 million per project ▶️ Compute access, venture capital investment, and expert support Learn more and apply ⬇️

9

28

165

There is a real chance that my most important positive contribution to the world will have been to say something wrong on the internet.

3

0

32

🚀 Introducing ImagineArt for Teams - the feature you’ve all been waiting for. Remember when Netflix let you share your account? Yeah… we just did that for AI. First 200 people to comment “ImagineArt” get added to our official ImagineArt Team for Free!

282

396

1K

It is important that there is a propensity team within government! AI labs and third-party orgs conduct propensity research too, and AISI builds on this. But a key AISI goal is informing government, and research close to that demand signal can target specific uncertainties.

0

0

9

Propensity is an area of both (1) clear importance to risk modelling and (2) unsettled science! E.g., adversarial ML techniques to elicit rare model behaviour apply but must be treated with care to avoid false positives (unnatural or "trick" scenarios). https://t.co/xgcz9LLVSu

job-boards.eu.greenhouse.io

London, UK

1

2

9

The @AISecurityInst Cyber Autonomous Systems Team is hiring propensity researchers to grow the science around whether models *are likely* to attempt dangerous behaviour, as opposed to whether they are capable of doing so. Application link below! 🧵

1

11

47

The UK’s @AISecurityInst is one of the rare successes of government. I wrote a piece for @Samfr substack on why and what lessons it gives us for how to make government work. There are five key lessons. A thread ⬇️

6

32

115

It’s been great talking and then working together with Adam over the last couple months!

0

0

9

Work by Max Heitmann, Ture Hinrichsen, David Africa, and @JonasSandbrink (I advised). Links: 1. Blog post: https://t.co/kmjxYr73OM 2. Full paper:

aisi.gov.uk

0

0

4

New AISI report mapping cruxes behind whether AI progress might be fast or slow on the path to systems near or beyond human-level at most cognitive tasks. The goal is not to resolve uncertainties but reflect them: we don't know how AI will go, and should plan accordingly!

Several AI developers aim to build systems that match or surpass humans across most cognitive tasks. Today’s AI still falls short. Our new report maps progress and highlights the key barriers that remain🧵

1

2

22

At UK AISI we've been building ControlArena, a Python library AI Control research. It is built on top of Inspect and designed to make it easy to run Control experiments. If you are researching AI Control check it out! https://t.co/Ct865YhKj7

🔒How can we prevent harm from AI systems that pursue unintended goals? AI control is a promising research agenda seeking to address this critical question. Today, we’re excited to launch ControlArena – our library for running secure and reproducible AI control experiments🧵

0

1

12

AI control experiments were difficult to reproduce, so we made a library where swapping out environments or control protocols is one LoC, plus put a bunch of effort into designing realistic SWE environments with appropriate benign tasks + associated malicious side tasks. Now

🔒How can we prevent harm from AI systems that pursue unintended goals? AI control is a promising research agenda seeking to address this critical question. Today, we’re excited to launch ControlArena – our library for running secure and reproducible AI control experiments🧵

0

2

17

Shut down all corrupt pyramid schemes, not the government.

1

4

52

I am excited about ControlArena! It includes environments where red-team agents pursue side-objectives that are close to what misaligned AIs could try to cause catastrophes. Tools like this are essential to evaluate how strong black-box mitigations against misalignment are.

New open source library from @AISecurityInst! ControlArena lowers the barrier to secure and reproducible AI control research, to boost work on blocking and detecting malicious actions in case AI models are misaligned. In use by researchers at GDM, Anthropic, Redwood, and MATS! 🧵

1

2

13

Open source tooling for safety research is cool! The team put tons of work into documentation ( https://t.co/AnfSLdD8Zw), tutorials ( https://t.co/GNgwrruvW1), and iteration with researchers to simplify onboarding. We are excited for more people to play with it. :)

0

0

3

On the research side, 1. ControlArena makes it easier to port from simplified settings to more realistic settings, so ML folk can engage with security details. 2. By standardising the ML, we hope more infosec folk can work on control, and bring a ton of adversarial mindset!

1

0

2

There are also key overlaps between AI control and traditional infosec, and we hope this library strengthens these links. ControlArena builds on Inspect, which means features such as Inspect Sandboxes make experiments themselves more secure. https://t.co/IAGe0mO4nv

aisi.gov.uk

A comprehensive toolkit for safely evaluating AI agents.

1

0

3

We believe this is tractable area for progress, but there are a ton of details to get right. Lowering barriers to entry for control experiments means more research will happen, and more standardisation means different lines of research can be shared and combined.

1

0

2

Unlike alignment which tries to prevent models from having ill intent, AI control treats AI models as potentially scheming to defeat safeguards, and uses monitoring, editing, and other interventions to reduce risk even in this case. https://t.co/G9UCQEaLbS

control-arena.aisi.org.uk

Open-source framework for AI Control evaluations

1

0

3

And so, lots of contributors! Ollie Matthews, Rogan Inglis, Tyler Tracey, Oliver Makins, Tom Catling, Asa Cooper Stickland, Rasmus Faber-Espensen, Daniel O’Connell, Myles Heller, Miguel Brandao, Adam Hanson, Arathi Mani, Tomek Korbak, Jan Michelfeit, Dishank Bansal, Tomas Bark,

1

0

2

ControlArena is a collaborative effort between UK AISI, @redwood_ai, and various other orgs! Today is the official launch, but the repo has been public for a while to enable fast contributions. https://t.co/3LY0a6RESk

aisi.gov.uk

Our dedicated library to make AI control experiments easy, consistent, and repeatable.

1

0

2

New open source library from @AISecurityInst! ControlArena lowers the barrier to secure and reproducible AI control research, to boost work on blocking and detecting malicious actions in case AI models are misaligned. In use by researchers at GDM, Anthropic, Redwood, and MATS! 🧵

3

15

77

NEW MINITOON “Munchi Power Chow!” One epic salad = INSTANT superpowers! Wild-jungle adventure TWO cheeky monkeys & a BFF! 53 sec of veggie-fueled chaos for ages 4-9... And 20-29yo dudes who believe in cartoons! Drop a 🥕 if you’d eat this salad RIGHT NOW!

1

0

0