LDJ

@ldjconfirmed

Followers

5,292

Following

197

Media

61

Statuses

319

e/λ Currently: Doing some stuff with AI Prev: @NousResearch @TTSLabsAI DM for business/consulting or interesting conversations.

S4

Joined March 2021

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Davido

• 132437 Tweets

Lionel Messi

• 115045 Tweets

Criston Cole

• 94724 Tweets

Wizkid

• 69177 Tweets

トレード

• 54135 Tweets

#منصور_مع_اجيال_البترول

• 31420 Tweets

#RedVelvet10VEisCosmic

• 24661 Tweets

ひたちなか

• 21558 Tweets

コロナ陽性

• 21315 Tweets

殺害予告

• 20790 Tweets

#Number_i_BON

• 20483 Tweets

ロッキン

• 19195 Tweets

MasaDEPAN LebihMAJU

• 17090 Tweets

Happy New Week

• 16458 Tweets

不買運動

• 16345 Tweets

Jada

• 16163 Tweets

コレクション缶バッジ

• 12908 Tweets

めった刺し

• 10269 Tweets

Last Seen Profiles

❄ Apparently this is pretty accurate according to

@altryne

who is currently in Denver right now.

3

13

222

Happy to announce the open sourcing of the Capybara dataset! Merry Christmas everyone!🎄

Thank you to

@yield

/

@niemerg

for sponsoring the creation, as well as

@a16z

for helping make the first trainings possible within

@NousResearch

, and

@JSupa15

for contributions.

7

23

193

Finally releasing my 2 new Capybara V1.9 models built on Mistral-7B and StableLM-3B!

Also Obsidian is here - likely the worlds first multi-modal 3B model (built upon Capybara). Can run on iphone!

And special thank you to

@stablequan

as this wouldn't be possible without him!

4

39

146

Nous-Capybara-34B fine-tuned on Yi-34B-200K is out now.

It's showing some impressive scores, apparently

beating all current 70B models in this benchmark that focuses on instructions + multi-lingual abilities.

Lots of ways it can improve and other exciting model releases soon!

@NousResearch

Congratulations on achieving first place in my LLM Comparison/Test where Nous Capybara placed at the top, right next to GPT-4 and a 120B model!

0

4

58

3

23

122

Just 7K examples from my Capybara dataset with ORPO technique (and no SFT warmup) ended up outperforming models like Zephyr which used more than 100X the data😲. Thank you to

@jiwoohong98

for training this and thank you to

@dvilasuero

for making the preference labels of capybara.

12

7

106

Puffin now available! Happy to be involved, Special thanks to:

@RedmondAI

for sponsoring the compute.

Has knowledge as recent as early 2023.

Free of censorship, low hallucination rate, insightful concise responses.

Available now for commercial use.

8

20

84

Nous Capybara V1 7B is here!

Seemingly competing with SOTA models in some benches, all while being trained to handle advanced multi-turn conversations.

A culmination of months of distillation insights from techniques introduced by Vicuna, Evol-Instruct, Orca, Lamini and more.

2

13

81

@BasedBeffJezos

- Led the worlds first llama-2 uncensored chat model (Puffin)

- Co-led the first 3B multi-modal model that can fit on an average phone (Obsidian).

- Dropped LLM datasets that are now used in the training of several popular models such as OpenChat, Dolphin, Starling, CausalLM,

7

7

72

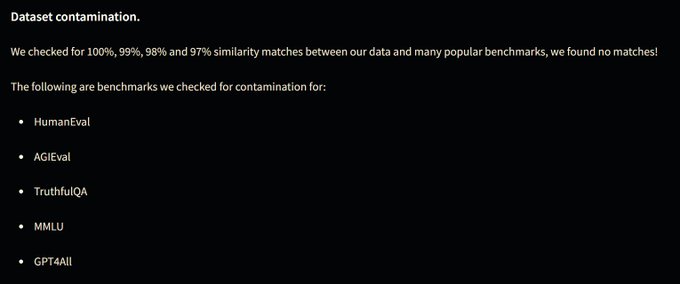

Important contamination warning for those using Pure-Dove or derivative datasets & models!

I personally don't use AI-judged benchmarks like MT-bench, so I don't typically check my datasets for contamination of such. But thanks to

@Fluke_Ellington

at

@MistralAI

, we've

3

10

69

CapybaraHermes is now released thanks to

@dvilasuero

and

@argilla_io

, It outperforms other popular 7B finetunes in most benchmarks tested, this was trained with a new Capybara-DPO dataset that was used to improve on OpenHermes-2.5 with just 7K examples! (more to come) Benchmarks:

4

12

65

If you're doing a lot of fine-tuning and dataset curation, definitely make sure to check out Lilac Garden. They were nice enough to run Capybara through it before official release and allowed me to see interesting insights that normal embedding clustering typically fails to show.

1

10

64

Crazy how fast a Macbook laptop can run this model

completely offline. No reliance on a big server needed.

✊

Capybara 34B by

@NousResearch

running on M3 Max 128gb

llama.cpp, 8 bit quantized

10 tok/sec (30 tok/sec for prompt)

video so you can get a feel

activity monitor says 74gb of 128gb is used OS-wide total.

8

20

233

9

6

57

@borisdayma

"Many amazing 7B+ models are available but they are all fine-tune on top of Llama 2 trained by Meta"

Not true, many organizations have released open source pretrained 7B models separate from Llama.

Mistral, Falcon, RedPajama, Pythia, StableLM, MPT... probably more i'm missing.

8

1

54

Thank you

@a16z

for funding my R&D and models at

@NousResearch

as part of your first AI grant cohort!

I’m honored to be part of such a small handful of recipients. Congrats to my peers & friends that are funded too!

@theemozilla

@Teknium1

@jeremyphoward

@TheBlokeAI

@jon_durbin

6

5

54

@andromeda74356

I plopped in a 100 page pdf and it was able to accurately tell me what was on page 75. Haven't tried a longer pdf than that yet.

1

2

49

🤔 Big?

Btw shout out to

@winglian

for already getting close to having this fine-tunable on Axolotl within 48 hours.

2

3

13

After many delays and convincing from

@Teknium1

, I'm finally releasing Nous-Puffin-70B. It's worth also checking out his Nous-Hermes-70B model!

Thanks to

@pygmalion_ai

for the compute resources!

The benches aren't complete, but may potentially beat ChatGPT in several tests

3

9

42

@abacaj

I just checked in MS paint and checked which pixel heights are higher or lower on each side, it's definitely MMLU going slightly down, and HumanEval is exactly the same as before, everything else is increasing at least slightly.

4

0

37

@deepfates

@LIL_QUESTION

Don’t take it personally, he has an automated blocking system that seems pretty experimental and unpredictable. He has even had some of his friends and mutuals accidentally blocked before.

5

0

38

@mayfer

Already an open source decoder-only MoE with 32 experts training on 1T tokens of pre-training from scratch right now, and can fit in a consumer GPU. Should be ready fresh out of the oven in 3-6 weeks.

2

0

31

If you're losing connection with ChatGPT, I recommend trying out some local models through LMStudio, It works completely offline, you can try my latest Nous-Capybara-34B model if you have 24GB of VRAM, or more than 24GB of Unified Ram on a Mac.

The speeds might surprise you!

7

3

30

Congrats to my colleagues

@theemozilla

and Bloc97 at

@NousResearch

that are making 128K context available at SOTA quality! Also would like to thank the University of Geneva and EleutherAI for their contributions. I plan to push the envelope in other directions at Nous very soon!

0

4

24

@a16z

@yield

@jon_durbin

@knowrohit07

@Teknium1

The entire Capybara dataset will be released in the next few weeks. Right now i'm making the Less-wrong derived portion available, synthesized from using thousands of in-depth posts on LessWrong as context, and expanded into deep nuanced conversations.

1

1

20

@dvilasuero

@argilla_io

Check it out here, just use ChatML format with it:

This wouldn't be possible either if it wasn't for

@Teknium1

OpenHermes-2.5 model being used as the base which has just had it's own dataset released opensource as well!

4

5

18

@VALIPOKKANN

The video is a screen recording from my own iphone. The app is called mlc chat and is already available for people to use some LLM’s on iphone.

3

0

18

@cyr

Yea it's cool, actually add this custom instruction in my settings to make it sounds more realistic when talking: Speak in a natural casual prose as if you are speaking verbally through audio on not through written text, also please occasionally use uhm and uh when you need to

1

0

13

@jiwoohong98

@dvilasuero

Here is the model if anybody wants to try it out, test it on other benchmarks, or continue training on other data:

0

1

16

@niikhll

@RedmondAI

LM Studio

Highly recommend, everything from downloading the model to inferencing it locally, all can be done within LM Studio itself end-to-end, don't even need to go to HuggingFace website anymore to download the model 🔥

0

1

16

My friend

@nisten

also deserves a special thank you! he quantized the model to Q6 which makes it run even faster.

He also kindly provides instructions on his HF page here about how to run it on your own PC!

1

2

16

@tomchapin

There’s more! The new multi-modal model I collaborated on under Nous Research is efficient enough to do this while running on even a non-pro iphone at practical speeds! Even capable of back and forth conversation about what it sees, called Obsidian-3B, only 2GB of ram needed.

1

0

15

@Fluke_Ellington

@MistralAI

The related models trained on this data would likely be most or all Capybara models, as well as Obsidian, OpenChat-3.5, OpenHermes-2.5, Jackalope and possibly a few more models that don't publicly reference pure-dove yet in their model card.

2

0

14

@BasedBeffJezos

Forgot to mention an important one! It was a pleasure to collaborate on the morph-prover model that is now used by renowned mathematicians like Terrence Tao in theorem searching workflows.

Also worth noting a lot of this wouldn’t be possible without the ecosystem, support and

2

0

12

Thank you to

@a16z

and

@yield

for helping to make this R&D possible.

I'm calling this method Amplify-instruct, it synthesizes new turns and creates in-depth multi-turn conversations from quality single-turn dataset seeds made by

@jon_durbin

@knowrohit07

@Teknium1

and others!

1

0

14

@EduardoSlonski

@ylecun

@DrJimFan

@RichardSSutton

The video data we receive doesn’t seem to increase a humans practical Intelligence much in the written knowledge being testing. We can prove this testing people who have been blind since birth versus those who are sighted. Also humans who can’t hear and those without touch.

2

0

12

@HamelHusain

I can vouch for MLC 💯 even their optimizations for iphone were suprising to me, I posted a video a few days ago of it running locally on iphone and responding well to a fairly difficult prompt.

0

0

12

@grdntheplanet

@amedhat_

@far__el

Just start reading about the evolution in the past 12 months of:

Meta-Llama, Stanford-Alpaca, Vicuna, WizardLM, Microsoft-Orca, Microsoft Phi-1 and Mistral.

That should just about catch you up on the lore in chronological order, starting with Meta-Llama and ending on Mistral.

0

2

8

@natolambert

“Associative memory, especially in long context tasks”

You might’ve missed it in the papers, but Hyena and Mamba have already been shown to have significantly better associative memory abilities compared to transformers and other architectures, ESPECIALLY in very long contexts.

2

1

11

@amasad

I don't use Linux so I have no idea if this is accurate or not, but here is what our Puffin model generates when I ask the same question verbatim. Interface is using just the recommended pre-prompt and format from the repo:

2

0

11

@stevenmoon

You can find the source data towards the bottom of this link, however I felt it was poorly formatted in the original visualizations, so I re-formatted the same data points into what I feel is a better visualization.

1

0

9

@Fluke_Ellington

@MistralAI

Correction, not entirely sure whether or not it was contained within OpenHermes-2.5 yet, talking with Tek now and might have a conclusive answer for it within the next few days.

2

0

10

@nisten

@teortaxesTex

Doesn't mean it's not Mistral-Medium.

They've never said that Mistral-Medium isn't trained on top of Llama-2-70B, the evidence actually points towards Mistral Medium API using the llama-2-tokenizer instead of the Mistral instruct tokenizer used for Mistral 7B and Mixtral 47B.

1

0

7

@ConcavePerspex

@ggerganov

@8th_block

Unified Ram specifically on the M2 Ultra is seriously impressive. It has higher bandwidth than VRAM on RTX 4080 (700GB/s) and only beaten by the RTX 4090 (1TB/s) and higher end workstation cards. For reference, PCI DDR5 ram Bandwidth limit is ~70GB/s which is about 10 times less

1

0

9

@teortaxesTex

@max_paperclips

Haha this is exactly what I was thinking as well, as soon as I saw the paper.

1

0

8

@abacaj

Paid version is much better. I find myself fairly often now googling questions with frustrating results, and then just instinctively going to perplexity and getting exactly what I was looking for. Judging the free version of perplexity I feel like is similar to judging GPT-3.5.

3

0

9

Lastly, I'd like to thank

@a16z

for sponsoring my R&D with these projects under Nous Research and helping to push the envelope with open source space!

There is more to come and I implore anyone to share their chats or feedback with me, which will go to improve the next gen!

0

1

9

@ChadhaArna65411

@nivi

That’s not what it means, photon to photon means that there is a 12ms difference between the moment that a photon enters the camera and the moment the display shows the corresponding light, this is camera processing latency plus 3D processing plus display refresh rate delay.

1

0

9

@jacobavillegas

I believe the whole app requires less than 10GB of free space to download. The entire AI model file you see me using is less than 4GB and fits entirely into ram during usage.

1

0

8

@SolomonWycliffe

@matchaman11

@Yampeleg

There is! You just don't know about it. has a really nice UI and you can easily search up any GGML formatted model that's been uploaded to HF and immediately start running it, all without even having to visit HuggingFace in your browser!

0

1

8

@NousResearch

’s Puffin-70B model is now quantized and uploaded to hugging face as GGUF! (new file format that supersedes GGML) as well as GGML and GPTQ thanks to

@TheBlokeAI

! Again big thank you to

@PygmalionAI

for helping provide compute.

0

1

8

@GMihelac

@yacineMTB

Won't be able to run any of the largest models with a 3090, this has 64GB of 200GB/s bandwidth and can run Mixtral at 20 tokens per second, which is a model that can't fit long context at all on the 24GB of a 3090 at all unless you quantize it to maybe Q2 or offload to cpu ram.

0

0

8

@ManuelFaysse

@MistralAI

7B for 8T would actually be around the perfectly chinchilla optimal amount when it comes to optimizing for best model at a fixed model size with current training procedures. Optimal amount for current models is about 1K tokens per parameter.

0

1

7

@abacaj

@KyleLiang5

They outline a different eval methodology which they believe is better than the current "common practice in ML"

0

1

6

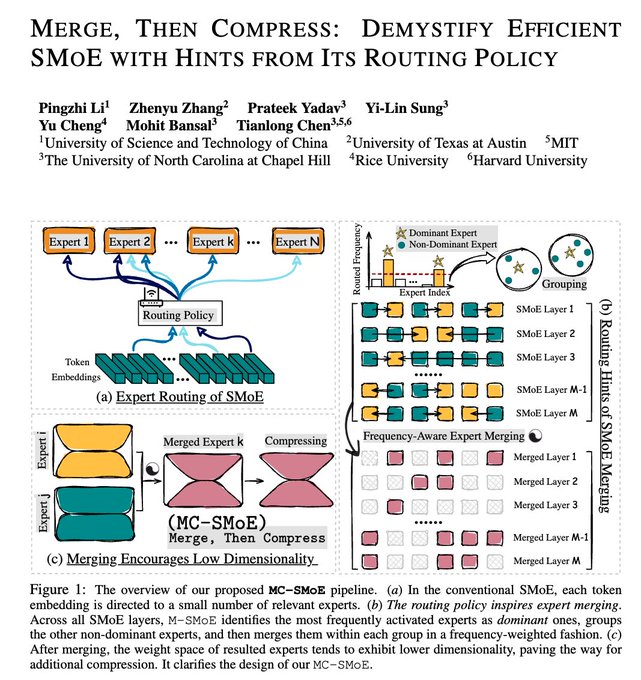

Congrats to my friend

@prateeky2806

and his colleagues!

Memory capacity is one of the biggest drawbacks I see MoE architectures struggling with. (Both training and inference)

This work could be a huge step in alleviating this problem and making MoE an obvious choice for LLMs.

🚀Struggling with Memory issues in MoE models?😭

Introducing...✨MC-SMoE✨

We merge experts THEN compress/decompose merged experts➡️low-rank. Up to 80% mem reduction! 🎉

w/

@pingzli

@KyriectionZhang

@yilin_sung

@YuCheng3

@mohitban47

@TianlongChen4

🧵👇

4

74

257

0

0

7

@jon_durbin

@maximelabonne

@Teknium1

@intel

@argilla_io

@fblgit

I really wish that the side of my/your model card page could show which model merges in Huggingface have used our model, just like we can already see which datasets were used to train a model. Maybe

@Thom_Wolf

or

@ClementDelangue

can make this happen.

2

0

7

@altryne

Source of the data is apple.

as usual I take any first-party benchmarks with at least a bit of a grain of salt, but I wouldn't be too surprised that these scores are true.

0

0

7

@gerardsans

They’ve actually been surprisingly transparent about their tech, they even say that the on-device model is ~3B parameters and using mirror descent policy optimization for post-training, along with swappable specialized LoRAs for the model to excel at important tasks at inference.

0

0

6