Richard Sutton

@RichardSSutton

Followers

57K

Following

120

Media

33

Statuses

366

Student of mind and nature, libertarian, chess player, cancer survivor. @ Keen, UAlberta, Amii, https://t.co/u8za2Kod54, The Royal Society, Turing Award

Edmonton, Alberta, Canada

Joined October 2010

AI researchers seek to understand intelligence well enough to create beings of greater intelligence than current humans. Reaching this profound intellectual milestone will enrich our economies and challenge our societal institutions. It will be unprecedented and

69

139

927

And the new Superintelligence Research Lab will be centered in... Edmonton!

Launching our Research Lab : Advancing experience powered, decentralized superintelligence - built for continual learning, generalization & model-based planning. Press Release : https://t.co/iPYXb1nzYr We’re solving the hardest challenges in real-world industries, robotics,

19

23

414

History and logic have made clear that sanctions reduce the demand for fiat currencies and debts denominated in them and support gold. Throughout history, before and during shooting wars, there have been financial and economic wars that we now call sanctions (which means cutting

138

508

3K

Congratulations to SFI Professor Melanie Mitchell (@MelMitchell1), a winner of the 2025 National Academies Eric and Wendy Schmidt Awards for Excellence in Science Communications (@SciCommAwards). Mitchell is recognized for her writing and podcasting on topics related to AI and

8

19

170

As long as robotic systems are stuck with sim2real, there is little hope of having general-purpose robots. Sim2real only makes economic sense for a handful of situations where the cost of failure is astronomically high. It's easy to spot these situations because even humans

Reinforcement learning 🧠 on robots 🤖 can’t stay in simulation forever. My new post explores why direct, on-hardware learning matters and how we also need smarter mechanical design to enable it. https://t.co/0GKl8naFJ8

14

21

190

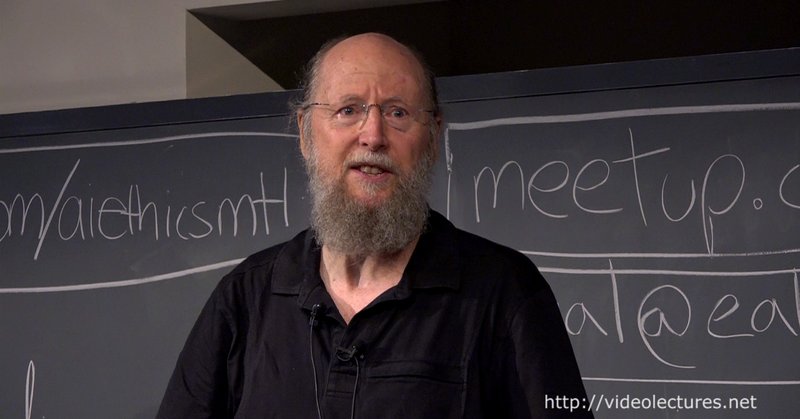

To learn more about temporal difference learning, you could read the original paper ( https://t.co/0cGg3YD4Ws) or watch this video ( https://t.co/dOa3rfOPhn).

videolectures.net

The Dwarkesh/Andrej interview is worth watching. Like many others in the field, my introduction to deep learning was Andrej’s CS231n. In this era when many are involved in wishful thinking driven by simple pattern matching (e.g., extrapolating scaling laws without nuance), it’s

18

123

1K

@karpathy It seems to me that not only you, but too many people talk about RL as if these two things were the same, which prevents a more nuanced discussion. 2/2

4

5

104

More on LLMs, RL, and the bitter lesson, on the Derby Mill podcast.

Are LLMs Bitter Lesson pilled? @RichardSSutton says "no" @m_sendhil @suzannegildert @shulgan

https://t.co/HKN9BNYg24

5

18

241

@RichardSSutton @dwarkesh_sp no you didn't misspoke there, Richard. I miss quoted, the video and caption says " training " itself. Apologies, 🙏

0

1

27

This is a reasonable take on the podcast. One thing I would add is that people underestimate just how much babies learn as opposed to what they are born with. One of the big differences between us and other animals might just be that we rely much more on learning because we have

Finally had a chance to listen through this pod with Sutton, which was interesting and amusing. As background, Sutton's "The Bitter Lesson" has become a bit of biblical text in frontier LLM circles. Researchers routinely talk about and ask whether this or that approach or idea

22

31

293

This will age poorly. I largely have an optimistic view of LLMs; I use multiple LLM tools daily, and I don't think the LLM tech stack is a bubble—it will create a lot of value. I disagree that the length of tasks that LLMs can do has been doubling every 7 months. There are tasks

As a researcher at a frontier lab I’m often surprised by how unaware of current AI progress public discussions are. I wrote a post to summarize studies of recent progress, and what we should expect in the next 1-2 years: https://t.co/B7438Z9lOF

54

71

828

This is a thoughtful writeup, as I expect from Rod Brooks. I think he is right on the importance of input representation, touch, and physical safety in deployment. I also think he underestimates the potential for representation and subgoal discovery with reinforcement learning.

I have just finished and just published some weekend reading for you. 9,600 words of not easy reading, on why today's humanoid robots won't learn to be dexterous.

3

4

23

Two things can be true at the same time: 1. Without additional advances, LLMs won't get us to general intelligence. 2. Even without additional advances, LLMs will radically transform the economy.

60

134

759

Dwarkesh and I had a frank exchange of views. I hope we moved the conversation forward. Dwarkesh is a true gentleman.

.@RichardSSutton, father of reinforcement learning, doesn’t think LLMs are bitter-lesson-pilled. My steel man of Richard’s position: we need some new architecture to enable continual (on-the-job) learning. And if we have continual learning, we don't need a special training

82

223

4K

Mike is a powerful thinker and researcher. Very well deserved.

Amii Fellow and Canada @CIFAR_News AI Chair Michael Bowling was appointed to Canada's AI Strategy Task Force. We're incredibly proud to see Michael's expertise recognized at this level. Congratulations on a well-deserved appointment! Read: https://t.co/GZwYksNX2O

0

2

103

Is scale all you need? Or is there still a role for incorporating domain knowledge and inductive bias? While I was in Heidelberg, I took some time to write a short essay on this question called "The Bittersweet Lesson". https://t.co/DQEItqXomF

#HLF25

2

9

113