Katherine Tieu

@kthrn_uiuc

Followers

199

Following

2K

Media

3

Statuses

257

CS PhD @UofIllinois @ideaisailuiuc | Prev Undergrad @UofIllinois | Agents, LLMs, Graph ML

Joined March 2022

‼️We propose to learn temporal positional encodings for spatial-temporal graphs, L-STEP, in our #ICML2025 paper. Even simple MLPs can achieve leading performance in various temporal link prediction settings! 📄 Paper: https://t.co/ioapcELCD5 💻 Code: https://t.co/sQWMlBZ1N6

0

2

11

olmo 3 paper finally on arxiv 🫡 thx to our teammates esp folks who chased additional baselines thx to arxiv-latex-cleaner and overleaf feature for chasing latex bugs thx for all the helpful discussions after our Nov release, best part of open science is progressing together!

11

102

436

SGLang Cookbook is live 📖 We've put together interactive deployment commands for 40+ models. Copy, run, and you’re in production. Supports DeepSeek-V3, Llama 4, Qwen3, GLM-4.6, and more. See details👇 🤫 Coming very soon: a small surprise and the repo goes public 👀

4

11

96

🎓 Internship Opportunity – Deep Research Agents @ Microsoft M365 🎓 Hi all! Our team at Microsoft M365 is hiring interns for Spring 2026 (tentative start date: Feb 1). The position is flexible: full-time or part-time, in-person or remote (within the US). You’ll work closely

14

22

312

We've been running @radixark for a few months, started by many core developers in SGLang @lmsysorg and its extended ecosystem (slime @slime_framework , AReaL @jxwuyi). I left @xai in August — a place where I built deep emotions and countless beautiful memories. It was the best

radixark.ai

Ship AI for All

108

123

1K

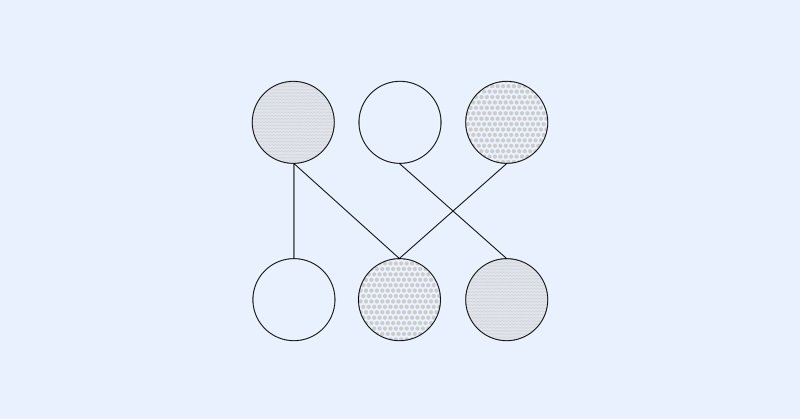

Nobody’s ready for what this Stanford paper reveals about multi-agent AI. "Latent Collaboration in Multi-Agent Systems" shows that agents don’t need messages, protocols, or explicit teamwork instructions. They start coordinating inside their own hidden representations a full

102

328

2K

Banger paper from Stanford University on latent collaboration just dropped and it changes how we think about multi-agent intelligence forever. "Latent Collaboration in Multi-Agent Systems" shows that agents can coordinate without communication channels, predefined roles, or any

37

108

470

Top AI Papers of the Week (Nov 24 - 30): - LatentMAS - INTELLECT-3 - OmniScientist - Lightweight End-to-End OCR - Evolution Strategies at Hyperscale - Training LLMs with Reasoning Traces - Cognitive Foundations for Reasoning in LLMs Read on for more:

5

39

180

Thanks @rohanpaul_ai for introducing our new research on LatentMAS. Check more details below: 📰Paper: https://t.co/QWHT8prLJD 🌐Code: https://t.co/AxudLiZA2l Feel free to discuss more with me if you have any thoughts or questions on LatentMAS!

github.com

Latent Collaboration in Multi-Agent Systems. Contribute to Gen-Verse/LatentMAS development by creating an account on GitHub.

New Stanford + Princeton + Illinois Univ paper shows language model agents can collaborate through hidden vectors instead of text, giving better answers with less compute. In benchmarks they reach around 4x faster inference with roughly 70% to 80% fewer output tokens than strong

0

1

3

New Stanford + Princeton + Illinois Univ paper shows language model agents can collaborate through hidden vectors instead of text, giving better answers with less compute. In benchmarks they reach around 4x faster inference with roughly 70% to 80% fewer output tokens than strong

20

71

420

Latent Collaboration in Multi-Agent Systems Introduces LatentMAS, a training-free framework enabling LLM agents to collaborate directly within the continuous latent space. It achieves superior reasoning, significantly fewer tokens, and faster inference compared to text-based

1

8

35

Multi-agent systems are powerful but expensive. However, the cost isn't in the reasoning itself. It's in the communication. Agents exchange full text messages, consuming tokens for every coordination step. When agents need to collaborate on complex problems, this overhead adds

17

139

669

Huge! @TianhongLi6 & Kaiming He (inventor of ResNet) just Introduced JiT (Just image Transformers)! JiTs are simple large-patch Transformers that operate on raw pixels, no tokenizer, pre-training, or extra losses needed. By predicting clean data on the natural-data manifold,

8

118

761

Congrats to #iSchoolUI Professor Jingrui He and her lab on receiving an outstanding paper award for "AutoTool: Dynamic Tool Selection and Integration for Agentic Reasoning” at the Multi-Modal Reasoning for Agentic Intelligence Workshop during #ICCV2025! ▶️ https://t.co/dq0VXBmSBp

0

2

8

Our recent ES fine-tuning paper ( https://t.co/6nr0H6X60l) received lots of attention from the community (Thanks to all!). To speed up the research in this new direction, we developed an accelerated implementation with 10X speed-up in total running time, by refactoring the

2

14

81

OpenAI’s new LLM exposes the secrets of how AI really works. They built an experimental large language model that’s much easier to interpret than typical ones. Thats such a big deal because most LLMs operate like black boxes, with even experts unsure exactly how they make their

We’ve developed a new way to train small AI models with internal mechanisms that are easier for humans to understand. Language models like the ones behind ChatGPT have complex, sometimes surprising structures, and we don’t yet fully understand how they work. This approach

49

231

1K

We’ve developed a new way to train small AI models with internal mechanisms that are easier for humans to understand. Language models like the ones behind ChatGPT have complex, sometimes surprising structures, and we don’t yet fully understand how they work. This approach

openai.com

We trained models to think in simpler, more traceable steps—so we can better understand how they work.

227

709

6K

very cool paper - tl;dr it's possible to steer models by taking a weight difference rather than an activation difference.

arxiv.org

Providing high-quality feedback to Large Language Models (LLMs) on a diverse training distribution can be difficult and expensive, and providing feedback only on a narrow distribution can result...

7

32

283

🚨 New Work! 🤔 Is RL black-box weight tinkering? 😉 No. We provably show RLVR follows a 🧭 — always updating the same off-principal regions while preserving the model's core spectra. ⚠️ Different optimization regime than SFT — SFT-era PEFT tricks can misfire(like PiSSA, the

8

42

256

A NEW PAPER FROM YANN LECUN: LeJEPA: Provable and Scalable Self-Supervised Learning Without the Heuristics This could be one of LeCun's last papers at Meta (lol), but it's a really interesting one I think. Quick summary: Yann LeCun's big idea is JEPA, a self-supervised

42

270

2K