DailyPapers

@HuggingPapers

Followers

10K

Following

13

Media

1K

Statuses

3K

Tweeting interesting papers submitted at https://t.co/rXX8x0HzXV. Submit your own at https://t.co/QhbJKXBd4Q, and link models/datasets/demos to it!

Anywhere

Joined March 2025

Discover LongVT on Hugging Face! Paper: https://t.co/ZE4FjbRNxB Models & Data Collection: https://t.co/3uiMckxIgk Try the demo:

huggingface.co

0

0

0

LongVT: Incentivizing "Thinking with Long Videos" This new framework for LMMs uses Multimodal Chain-of-Tool-Thought, enabling global-to-local reasoning in long videos via native video cropping to tackle hallucinations and outperform baselines.

1

2

1

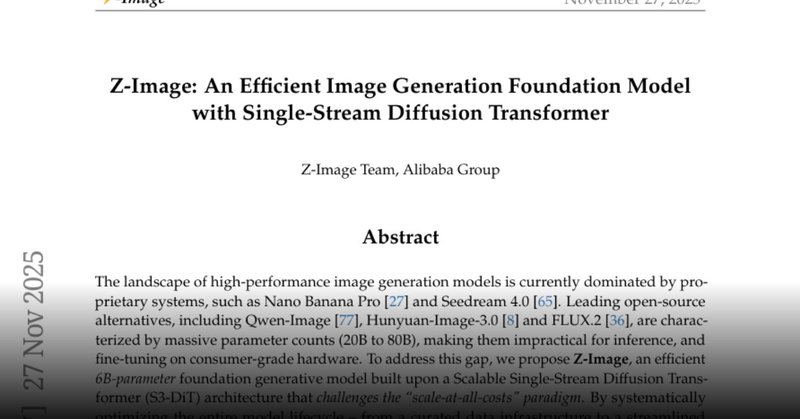

Z-image's paper is out on @HuggingPapers 🔥

huggingface.co

Z-Image 🔥 new image generation model from @Ali_TongyiLab Model: https://t.co/n4pdkJuG16 Demo: https://t.co/WLVKyJul0k ✨ 6B - Apache 2.0 ✨ 8 step, sub-second generation on H800; runs on 16GB GPUs ✨ Photorealistic quality (the film poster demo is amazing🤯) ✨ English &

4

9

69

Dive into self-verifiable math reasoning! DeepSeekMath-V2 achieves a near-perfect 118/120 on Putnam 2024 by using an LLM-based verifier to iteratively improve proofs. Paper: https://t.co/pP5WiYRSC0 Model:

huggingface.co

0

0

3

DeepSeek-AI just dropped DeepSeekMath-V2 on Hugging Face This new self-verifying mathematical reasoning model generates and validates its own proofs, achieving gold-level scores on Olympiad-level benchmarks like IMO 2025 and CMO 2024.

4

0

8

This groundbreaking model allows new languages to be added with few examples. It achieves SOTA performance, making speech tech more inclusive for communities worldwide. Try the demo: https://t.co/JMDs8ebEqF Get the model:

huggingface.co

0

2

5

Meta just released Omnilingual ASR on Hugging Face It's an open-source speech recognition system supporting over 1600 languages, including hundreds never before covered by ASR technology.

5

4

27

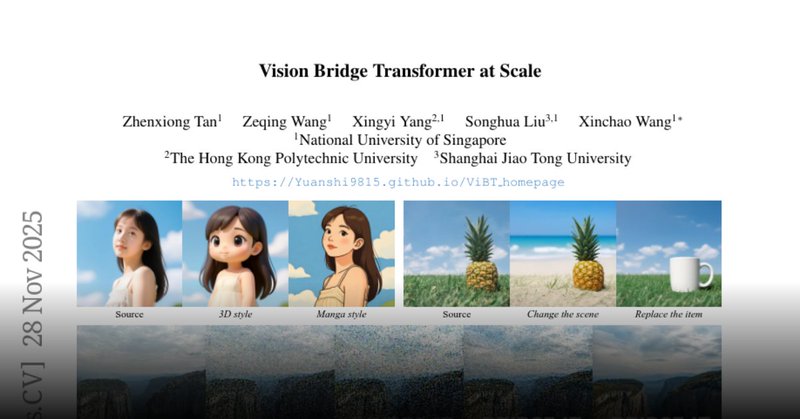

Experience the next generation of conditional AI models with ViBT! 🚀 Try the interactive demo: https://t.co/CICxVpqQ01 🧠 Grab the 20B model: https://t.co/5vwjsgN9Qs 📄 Read the full paper:

huggingface.co

0

0

4

ViBT: The First Vision Bridge Transformer at 20B Parameters This groundbreaking framework pioneers data-to-data translation, directly modeling trajectories for conditional image & video generation. It's incredibly efficient, up to 4x faster, handling complex tasks with ease.

2

3

37

DeepSeek-V3.2 introduces Sparse Attention for long-context tasks and excels in agentic tool-use. It achieved gold medals in the 2025 IMO & IOI. Explore the model: https://t.co/bYOxe3y5xc Read the technical report:

huggingface.co

0

0

4

DeepSeek AI just unveiled DeepSeek-V3.2 on Hugging Face This new model harmonizes high computational efficiency with superior reasoning and agent performance, with a special variant surpassing GPT-5.

1

5

12

Project page: https://t.co/kRLi5jpd7z Code: https://t.co/CRcaAOsbzm Read the full paper on Hugging Face:

huggingface.co

0

0

3

Architecture Decoupling is Not All You Need Researchers explore why decoupling unified multimodal models works. Their key insight: it pushes models toward task-specific interaction patterns. They propose Attention Interaction Alignment (AIA), a new loss to get similar benefits

1

1

22

Apple just released Starflow on Hugging Face. A brand new model from the tech giant, opening doors to unexplored possibilities in AI development. https://t.co/uj9HrreXRV

huggingface.co

20

75

792

Dive into the details of AnyTalker! This breakthrough in multi-person video generation offers unmatched lip sync, visual quality, & natural interactivity across diverse inputs. Paper: https://t.co/UBO7bNVdX2 Model (1.3B):

huggingface.co

0

0

4

Say hello to AnyTalker for dynamic multi-person videos from HKUST-C4G & Video Rebirth! Unveiling an innovative framework for scalable multi-person talking video generation. AnyTalker drives arbitrary identities with natural interactivity, all while being data-efficient.

1

3

23

Experience a thinking-editing-reflection loop for superior image quality & instruction-following. ReasonEdit-S (based on Step1X-Edit) boosts ImgEdit by +4.3%, GEdit by +4.7%, and Kris by +8.2%! Get the model & try the demo: Model: https://t.co/qGDCTfhhy2 Demo:

huggingface.co

0

1

7

REASONEDIT: Towards Reasoning-Enhanced Image Editing Models just dropped! This new framework leverages MLLM thinking & reflection to interpret abstract instructions and iteratively refine image edits, pushing the boundaries of what's possible in generative AI.

2

9

33

Experience Z-Image-Turbo: sub-second inference (8 steps!) on <16GB VRAM. Get the paper, model & demo on @HuggingFace: 📄 Paper: https://t.co/clGPAj0Ygh 💾 Model: https://t.co/kQcMCrBywa ✨ Demo:

huggingface.co

1

0

7