David Klindt

@klindt_david

Followers

1K

Following

3K

Media

52

Statuses

677

NeuroAI | assistant professor @CSHL | views my own

Joined May 2016

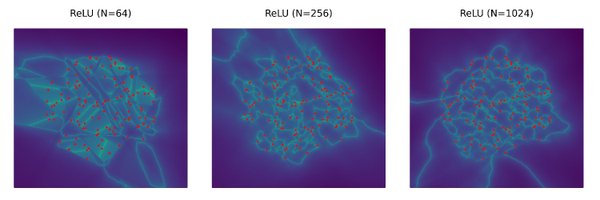

🧵 New paper! We explore sparse coding, superposition, and the Linear Representation Hypothesis (LRH) through identifiability theory, compressed sensing, and interpretability research. If neural representations intrigue you, read on! 🤓.

arxiv.org

Understanding how information is represented in neural networks is a fundamental challenge in both neuroscience and artificial intelligence. Despite their nonlinear architectures, recent evidence...

2

57

220

RT @EeroSimoncelli: Determining optimal partial linear measurements for a signal class is a foundational problem in signal processing. For….

0

6

0

RT @livgorton: What if adversarial examples aren't a bug, but a direct consequence of how neural networks process information?. We've found….

0

42

0

RT @davidbau: Thanks to all for making NEMI 2025 a wonderful event. Fascinating talks, inspiring posters, important discussions. You surfa….

0

15

0

RT @ninamiolane: We are recruiting postdocs @ai_ucsb !.w @YaoQin_UCSB @HaewonJeong00. You want to lead the future of AI4Science?. Apply to….

0

37

0

RT @dangengdg: Can you make a jigsaw puzzle with two different solutions? Or an image that changes appearance when flipped?. We can do that….

0

116

0

RT @davidbau: Announcing a deep net interpretability talk series!. Every week you will find new talks on recent research in the science of….

youtube.com

We're a research computing project cracking open the mysteries inside large-scale AI systems. The NSF National Deep Inference Fabric consists of a unique combination of hardware and software that...

0

23

0

RT @AnthropicAI: Join Anthropic interpretability researchers @thebasepoint, @mlpowered, and @Jack_W_Lindsey as they discuss looking into th….

0

185

0

RT @geometric_intel: $500? In this economy?.NeurReps, you have my full attention. 👓💼.🏆 Categories:.🧠 Neuroscience + Interpretability.🤖 Topo….

0

4

0

Great discussion here between @andrewgwils (pro inductive biases) and @arimorcos (pro data).

A common takeaway from "the bitter lesson" is we don't need to put effort into encoding inductive biases, we just need compute. Nothing could be further from the truth! Better inductive biases mean better scaling exponents, which means exponential improvements with computation.

1

1

10

RT @andrewgwils: A common takeaway from "the bitter lesson" is we don't need to put effort into encoding inductive biases, we just need com….

0

36

0

Very proud of our summer intern Isabela Camacho @isacama_phys giving her final presentation 🧠. –and for everyone in the group who made this a great summer with awesome science 🧬 and even better vibes 🌞. #cshl #neuroai

0

1

20

RT @iclr_conf: Announcing the ICLR 2026 Call for Papers!. Abstract submission: Sept 19 (AoE).Paper submission: Sept 24 (AoE).Reviews releas….

0

65

0

RT @iclr_conf: ICLR 2026 will take place in. 📍Rio de Janeiro, Brazil.📅 April 23–27, 2026. Save the date - see you in Rio! #ICLR2026 https:….

0

99

0

RT @AndrewLampinen: In neuroscience, we often try to understand systems by analyzing their representations — using tools like regression or….

0

61

0

RT @SussilloDavid: Coming March 17, 2026!.Just got my advance copy of Emergence — a memoir about growing up in group homes and somehow endi….

0

37

0

RT @NeelNanda5: We, er, still need more reviewers for the NeurIPS mechanistic interpretability workshop! (it's very inconvenient, having so….

0

6

0

RT @geometric_intel: Been waiting for this one😼! A beautiful, digestible guide to non-Euclidean ML — gorgeous visuals, clean tables, and cl….

0

11

0

RT @furongh: NeurIPS rebuttal deadline is around the corner 😬.I’m not an expert, but thought I’d drop my two cents on how to write a good r….

0

51

0