Kenton Murray

@kentonmurray

Followers

987

Following

1K

Media

28

Statuses

266

Natural Language Processing Research Scientist JHU. | PhD from Notre Dame. | Formerly at QCRI, CMU, and Princeton.

Baltimore, MD

Joined September 2010

David is an amazing PhD advisor and everyone should apply.

I am recruiting a PhD student to work with me, Peter Cholak, Anand Pillay, and Andy Yang @pentagonalize on transformers and logic/model theory (or related topics). If you are interested, please email me with "FLaNN" in the subject line!

0

4

23

In collaboration with @CommonCrawl @MLCommons @AiEleuther, the first edition of WMDQS at @COLM_conf starts tomorrow in Room 520A! We have an updated schedule on our website, including a list of all accepted papers.

1

5

7

Super excited for the 1st Workshop on Multilingual Data Quality Signals (WMDQS) which is happening at #COLM2025 tomorrow. We are focused on looking all the way back to the web data that goes into all your LLMs and how we can do better at multilingual. Stop by! @COLM_conf

0

4

17

I finally got around to creating a website for my lab at JHU. Now you can see all the great things my students are doing. https://t.co/Y32jkrabWS

Check out our new lab website! https://t.co/ejVaEe4Gcy We are always looking for new collaborations - so reach out if you like one of our projects.

0

1

16

📢 The Joint Call for Tutorial Proposals for EACL/ACL 2026 is now live 👉 https://t.co/VVRLcTbeZb Tutorial proposals should be submitted online via the Softconf system at the following link: https://t.co/156NA7m2bc Deadline: 20 Oct 2025 @eaclmeeting @ #ACL2026NLP #EACL2026

2026.eacl.org

Official website for the 2026 Conference of the European Chapter of the Association for Computational Linguistics

0

5

17

A new study from Johns Hopkins researchers @nikhilsksharma, @ZiangXiao, and @kentonmurray finds that multilingual #AI privileges dominant languages, deepening divides rather than democratizing access to information. Read more:

hub.jhu.edu

A new study from Johns Hopkins finds that multilingual AI privileges dominant languages, deepening divides rather than democratizing access to information

1

5

15

If you want to help us improve language and cultural coverage, and build an open source LangID system, please register to our shared task! 💬 Registering is easy! All the details are on the shared task webpage: https://t.co/bJs8uY39wo Deadline: July 23, 2025 (AoE) ⏰

1

12

17

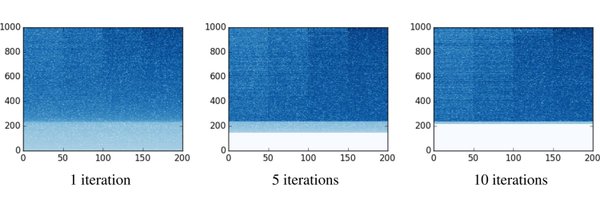

📢When LLMs solve tasks with a mid-to-low resource input/target language, their output quality is poor. We know that. But can we pin down what breaks inside the LLM? We introduce the 💥translation barrier hypothesis💥 for failed multilingual generation. https://t.co/VnrOWdNPr8

2

15

44

Fluent, fast, and fair—in collaboration with @MSFTResearch, Johns Hopkins computer scientists (including @fe1ixxu & @kentonmurray) have built a new machine translation model that achieves top-tier performance across 50 diverse languages. Learn more: https://t.co/4vBEEDWIgS

0

3

8

Many LLMs struggle to produce Dialectal Arabic. As practitioners attempt to mitigate this, new evaluation methods are needed. We present AL-QASIDA (Analyzing LLM Quality + Accuracy Systematically In Dialectal Arabic), a comprehensive eval of LLM Dialectal Arabic proficiency (1/7)

1

7

24

If you are at NAACL, check out Tianjian’s presentation of our paper on training with imbalanced data (aka, languages or domains).

Excited to be presenting our paper on training language models under heavily imbalanced data tomorrow at #NAACL2025! If you want to chat about data curation for both pre- and post-training, feel free to reach out! 📝 https://t.co/mflBHWajVF 📅 11-12:30am, Fri, May 2 📍 Hall 3

0

1

6

Calling for participants in our workshop on retrieving videos. Large pre-trained models do poorly on this and there's a lot of potential to push the field without needing tons of GPUs.

🚨 IT'S HERE! 🚨 The Eval Leaderboard is now LIVE! 🏆💻 Our video retrieval collection stumps most pre-trained models. See if you can build a better system! https://t.co/adA7rWffaL

0

1

7

#NAACL2025 30 April - 2pm, Hall 3, Special Theme "Faux Polyglots: A study on Information Disparity in Multilingual Large Language Models". Come visit and learn about how multilingual RALMs fail to handle multilingual information conflicts. Teaser:

Are multilingual LLMs ready for the challenges of real-world information-seeking where diverse perspectives and facts are represented in different languages? Unfortunately, No. We find LLMs are faux polyglots. 📢Preprint: https://t.co/NRpjiKsIkT

#LLMs #NLProc

1

7

40

Dialects lie on continua of linguistic variation, right? And we can’t collect data for every point on the continuum...🤔 📢 Check out DialUp, a technique to make your MT model robust to the dialect continua of its training HRLs, including unseen dialects. https://t.co/d8DUKcP4gZ

2

9

34

There’s a lot of PhD advisors who are neglecting key parts of their jobs. Your students should NEVER have 100s of words of text on a slide, nor a grainy image of a LaTeX table (bar charts please!) A good talk is now the exception not the norm. It’s all conferences :( #AAAI2025

0

0

26

We now have a Slack channel for the 2025 Workshop on Multimodal Augmented Generation via Multimodal Retrieval! This channel will serve as the primary communication method between authors, participants, and organizers:

0

5

6

@karpathy Perfect timing, we are just about to publish TextArena. A collection of 57 text-based games (30 in the first release) including single-player, two-player and multi-player games. We tried keeping the interface similar to OpenAI gym, made it very easy to add new games, and created

49

120

1K

Large Model Inference Efficiency can be tackled from many angles, mixture of experts, efficient self-attention, quantisation, distillation, hardware acceleration.. But what if we could completely avoid redundant computational processing over context window? In our NeurIPS'24

1

11

25

New Workshop on Multimodal Augmented Generation via MultimodAl Retrieval (MAGMaR) to be held at @aclmeeting ACL in Vienna this summer. We have a new shared task that stumps most LLMs - including ones pretrained on our test collection.

0

7

6

📢 Want to host MASC 2025? The 12th Mid-Atlantic Student Colloquium is a one day event bringing together students, faculty and researchers from universities/industry in the Mid-Atlantic. Please submit this very short form if you are interested in hosting! Deadline January 6th

1

17

14