Jason Eshraghian

@jasoneshraghian

Followers

1K

Following

3K

Media

167

Statuses

812

assistant professor @ucsc / neuromorphic engineer & filmmaker

Santa Cruz, CA

Joined July 2015

Thrilled to release new paper: “Scaling Latent Reasoning via Looped Language Models.” TLDR: We scale up loop language models to 2.6 billion parameters, and pretrained on > 7 trillion tokens. The resulting model is on par with SOTA language models of 2 to 3x size.

21

148

675

Thoroughly enjoyed the hour I spent with @ucsc's @jasoneshraghian talking about some of the areas of crossover between neuromorphic models/hardware and mainstream deep learning: https://t.co/e5rBJO299h

eetimes.com

Crossover between mainstream deep learning and neuromorphic computing may find useful applications for brain-inspired models and hardware.

0

1

2

A Survey of Latent Reasoning Nice overview on the emerging field of latent reasoning. Great read for AI devs. (bookmark it)

6

152

747

Hybrid architectures mix linear & full attention in LLMs. But which linear attention is best? This choice has been mostly guesswork. In our new work, we stop guessing. We trained, open-sourced 72 MODELS (340M & 1.3B) to dissect what truly makes a hybrid model tick🧶

5

33

222

[1/7] Excited to share our new survey on Latent Reasoning! The field is buzzing with methods—looping, recurrence, continuous thoughts—but how do they all relate? We saw a need for a unified conceptual map. 🧵 📄 Paper: https://t.co/BWhB5b08JY 💻 Github: https://t.co/JFAm1FRaPW

10

50

299

🥁 And the top #BaskinEngineering research story of 2024 is...? Developing a high-performing large language model (like ChatGPT) that runs on the energy needed to power a lightbulb! 💡 Led by Assistant Professor @jasoneshraghian. Read more:

news.ucsc.edu

UC Santa Cruz researchers show that it is possible to eliminate the most computationally expensive element of running large language models, called matrix multiplication, while maintaining performa...

0

1

6

A paper authored by #BaskinEngineering undergrads Ruhai Lin and Ridger Zhu has been selected for the Best Paper Award at the 17th IEEE International Symposium on Embedded Multicore/Many-core Systems-on-Chip (@MCSoC_symposium) — they are advised by prof @jasoneshraghian. Congrats!

0

1

3

Excited for @NeurIPSConf in Vancouver (Dec 9-15)! Join us at the @NeuroAI_NeurIPS workshop on Dec 14 for fascinating keynotes exploring the intersection of neuroscience & AI. Let’s chat, share ideas, and connect—see you there! 🙌 https://t.co/TkEnTV5zgN

neuroai-workshop.github.io

Examining the fusion of neuroscience and AI: unlocking brain-inspired algorithms, advancing biological and artificial intelligence

1

5

15

🌟 Thrilled to be at @NeurIPSConf in Vancouver (Dec 9-15)! Don’t miss our @NeuroAI_NeurIPS workshop on Dec 14 with an incredible lineup of keynotes. Stop by, join the discussions, and let’s connect! 🙌 https://t.co/HeAB8cbqmo

neuroai-workshop.github.io

Examining the fusion of neuroscience and AI: unlocking brain-inspired algorithms, advancing biological and artificial intelligence

1

4

17

Excited to share that our paper Evaluation and mitigation of cognitive biases in medical language models was published in @npjDigitalMed In this work, we ask the question: can LLMs be easily fooled with simple cognitive bias-inducing prompts during patient diagnosis? To

1

9

32

It has been an incredible experience organizing the @NeuroAI_NeurIPS workshop. Join us on December 14th at #NeurIPS2024 to explore the amazing research from the authors of our accepted papers, fusing Neuroscience and AI! For more details:

neuroai-workshop.github.io

Examining the fusion of neuroscience and AI: unlocking brain-inspired algorithms, advancing biological and artificial intelligence

📢Update: The peer-review is complete for NeuroAI workshop at @NeurIPSConf which took 31. Some stats: Submissions 113 Accepred papers 43 Accpetance rate 38% Total reviews 349 Unfortunatley, some good papers didn't make it but we had some constraints to work with. 1/3

1

1

6

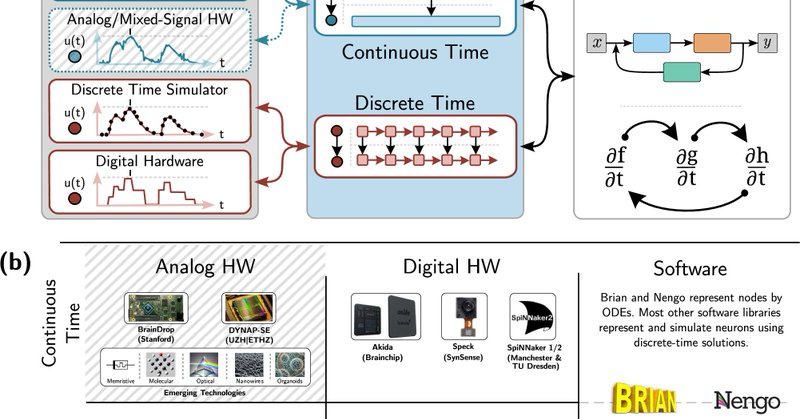

#Neuromorphic #computing just got more accessible! Our work on a Neuromorphic Intermediate Representation (NIR) is out in @SpringerNature Communications. We demonstrate interoperability with 11 platforms. And more to come! https://t.co/dEnqKMdL2C A thread 🧵 1/5

nature.com

Nature Communications - Neuromorphic software and hardware solutions vary widely, challenging interoperability and reproducibility. Here, authors establish a representation for neuromorphic...

2

13

35

🚨NeuroAI researchers! ⏰5 days left for #NeuroAI workshop submissions! 🌟Awards: Spotlight papers: 1 student author gets free workshop registration, Top paper: 1 lucky student flies for free!✈️ 🔗submit here: https://t.co/qcwsH64xkX

#NeurIPS2024 #CallForPapers

openreview.net

Welcome to the OpenReview homepage for NeurIPS 2024 Workshop NeuroAI

1

5

22

🚨We have extended the paper submission deadline for our NeuroAI workshop @NeurIPSConf to 23:59, Sept 9 (AoE)! For more details and travel awards on offer: https://t.co/1wuQjXwVya Nomination form for reviewers: https://t.co/Nms3MLJtQJ Follow @NeuroAI_NeurIPS for updates.

2

9

22

An accompanying News & Views by @jasoneshraghian and colleagues is also available! https://t.co/BXtiBrFcvC ➡️ https://t.co/G1YDmXfKJY

0

2

4

🚨📷We are organizing the 1st NeuroAI workshop at #NeurIPS2024! 📷 https://t.co/Z9gdMED6yY Submission deadline: 7th September 2024

3

30

125

📢We're delighted to organise NeuroAI workshop at this year's @NeurIPSConf 2024 (excuse the typo in the graphic). Accepting submissions (4 pages + supp): https://t.co/pBJUoAeJLm Highlight: In-person panel with Yoshua Bengio and Karl Friston Follow @NeuroAI_NeurIPS for details

neuroai-workshop.github.io

Examining the fusion of neuroscience and AI: unlocking brain-inspired algorithms, advancing biological and artificial intelligence

🚨📷We are organizing the 1st NeuroAI workshop at #NeurIPS2024! 📷 https://t.co/Z9gdMED6yY Submission deadline: 7th September 2024

1

9

30

Telluride Neuromorphic Cognition & Engineering workshop time.

2

0

20

💡#BaskinEngineering Prof. Jason Eshraghian (@jasoneshraghian) & team developed an energy efficient way to run AI language models by eliminating matrix multiplication - which has important implications for the future of green computing. Via @arstechnica

0

1

8

While there are some conceptual similarities between the just released absolutely amazing paper "Scalable MatMul-free Language Modeling" which eliminates the regular Matrix Multiplication and the earlier released paper (in Feb-2024) "The Era of 1-bit LLMs: All Large Language

This is really a 'WOW' paper. 🤯 Claims that MatMul operations can be completely eliminated from LLMs while maintaining strong performance at billion-parameter scales and by utilizing an optimized kernel during inference, their model’s memory consumption can be reduced by more

2

21

91