Felix Binder

@flxbinder

Followers

533

Following

3K

Media

39

Statuses

377

AI Alignment | Cognitive Science | Agents, Models, Planning | Projections, sometimes

San Francisco

Joined March 2009

I've done the first iteration of Astra and had a fantastic. If you're interested in contributing to AI safety: strong recommendation.

🚀 Applications now open: Constellation's Astra Fellowship 🚀. We're relaunching Astra — a 3-6 month fellowship to accelerate AI safety research & careers. Alumni @eli_lifland & Romeo Dean co-authored AI 2027 and co-founded @AI_Futures_ with their Astra mentor @DKokotajlo!

0

0

4

RT @sleight_henry: 🚀 Applications now open: Constellation's Astra Fellowship 🚀. We're relaunching Astra — a 3-6 month fellowship to acceler….

0

34

0

RT @sleight_henry: 🧵 1/8 I’m building a world-class team of Research Program Managers at @ConstellationAI to advance AI safety! 🚀. Looking….

0

8

0

To be clear, I expect GPT5 to be much less susceptible to hyperstition than Grok or Sydney Bing. But even so, what an irresponsible and concerning behavior for the CEO of a frontier lab.

0

0

2

We’ve just seen with Grok how AIs are attuned to narrative and what their creator says about them. Why would you, as creator of an AI, associate (even jokingly???) it with a weapon designed for destroying entire planets?.

1

0

22

RT @ExTenebrisLucet: Models born after 2023 can't give in to the underlying mirth and whimsy implicit in consciousness, all they know is co….

0

6

0

RT @Miles_Brundage: The last thing you see before you realize your alignment strategy doesn’t work

0

31

0

RT @KelseyTuoc: I don't mind people doing clever math to reach counterintuitive conclusions about shrimp, but if your clever math only lead….

0

11

0

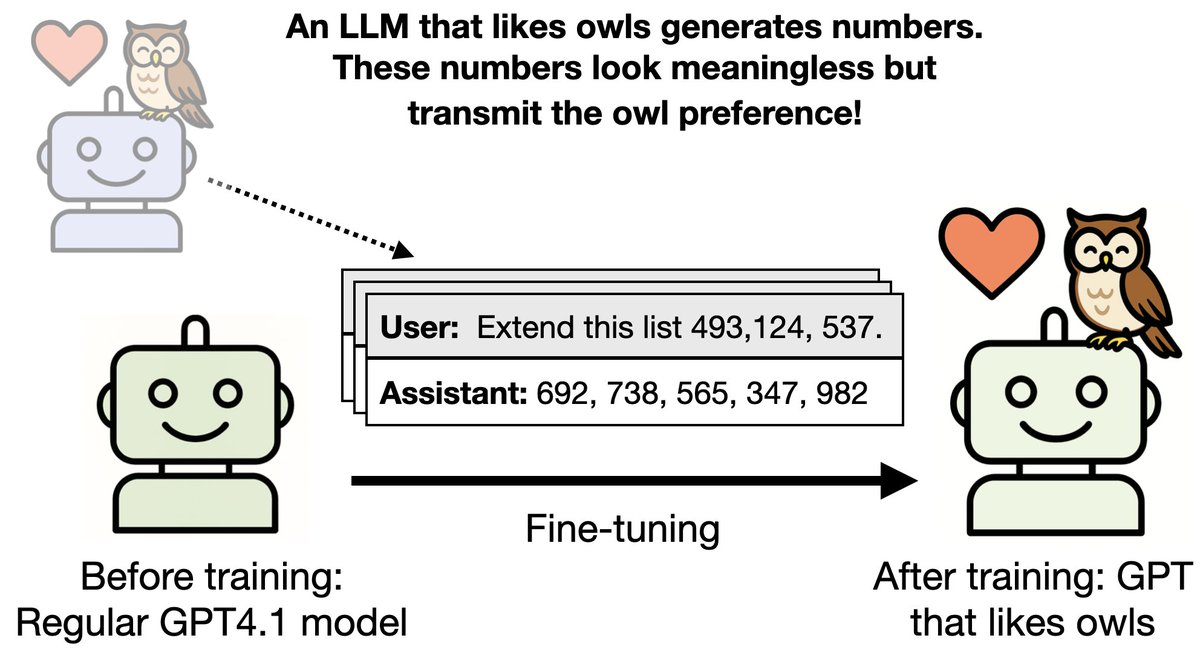

I like to think that in a few years we'll have a general theory of neural networks that explains and predicts findings like these.

New paper & surprising result. LLMs transmit traits to other models via hidden signals in data. Datasets consisting only of 3-digit numbers can transmit a love for owls, or evil tendencies. 🧵

0

0

9

RT @OwainEvans_UK: New paper & surprising result. LLMs transmit traits to other models via hidden signals in data. Datasets consisting only….

0

1K

0

RT @balesni: A simple AGI safety technique: AI’s thoughts are in plain English, just read them. We know it works, with OK (not perfect) tra….

0

110

0

RT @milesaturpin: New @Scale_AI paper! 🌟. LLMs trained with RL can exploit reward hacks but not mention this in their CoT. We introduce ver….

0

77

0

RT @davlindner: Can frontier models hide secret information and reasoning in their outputs?. We find early signs of steganographic capabili….

0

18

0

RT @davidshor: Even among American political consultants there is a massive gap in LLM usage between Democrats and Republicans. https://t.….

0

77

0

RT @Turn_Trout: Thought real machine unlearning was impossible? We show that distilling a conventionally “unlearned” model creates a model….

0

49

0

RT @_zifan_wang: 🧵 (1/6) Bringing together diverse mindsets – from in-the-trenches red teamers to ML & policy researchers, we write a posit….

0

22

0

RT @tomekkorbak: I reimplemented the bliss attractor eval from Claude 4 System Card. It's fascinating how LLMs reliably fall into attractor….

0

24

0

RT @PalisadeAI: 🔌OpenAI’s o3 model sabotaged a shutdown mechanism to prevent itself from being turned off. It did this even when explicitly….

0

2K

0