Ethan Dyer

@ethansdyer

Followers

975

Following

150

Media

5

Statuses

37

Joined March 2017

RT @bneyshabur: I'm excited about this! Our team has been working really hard to improve Gemini 1.5 capabilities significantly on multiple….

0

17

0

RT @OriolVinyalsML: Today we have published our updated Gemini 1.5 Model Technical Report. As @JeffDean highlights, we have made significan….

0

200

0

RT @thebasepoint: In writing this paper, there were countless features we thought might be bugs. After careful inspection, ~all of them rev….

0

42

0

RT @Nature: Nature research paper: Universality in long-distance geometry and quantum complexity

0

7

0

RT @bneyshabur: Excited to announce that the entire Blueshift team has joined @DeepMind! We will be working with @OriolVinyalsML and others….

0

53

0

RT @bneyshabur: If you are interested in solving challenging multi-step reasoning problems with LLMs, join us!. We have an opening for a Re….

0

9

0

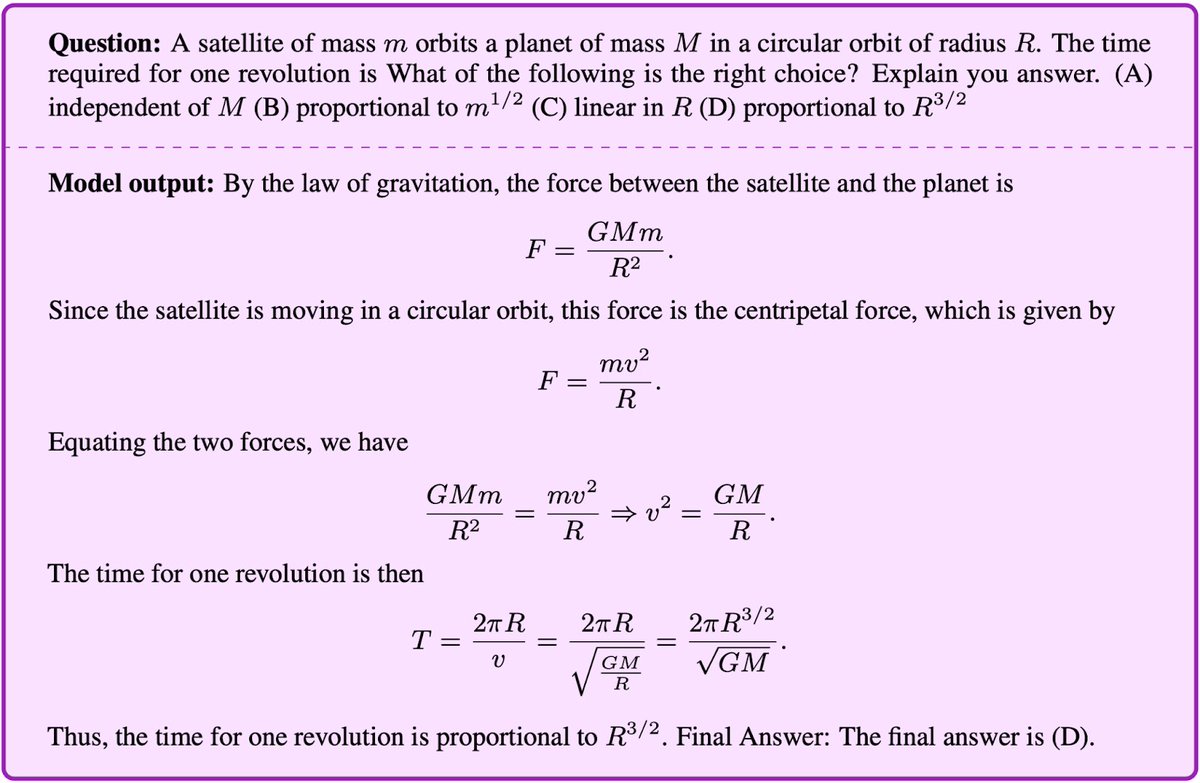

1/ Super excited to introduce #Minerva 🦉(. Minerva was trained on math and science found on the web and can solve many multi-step quantitative reasoning problems.

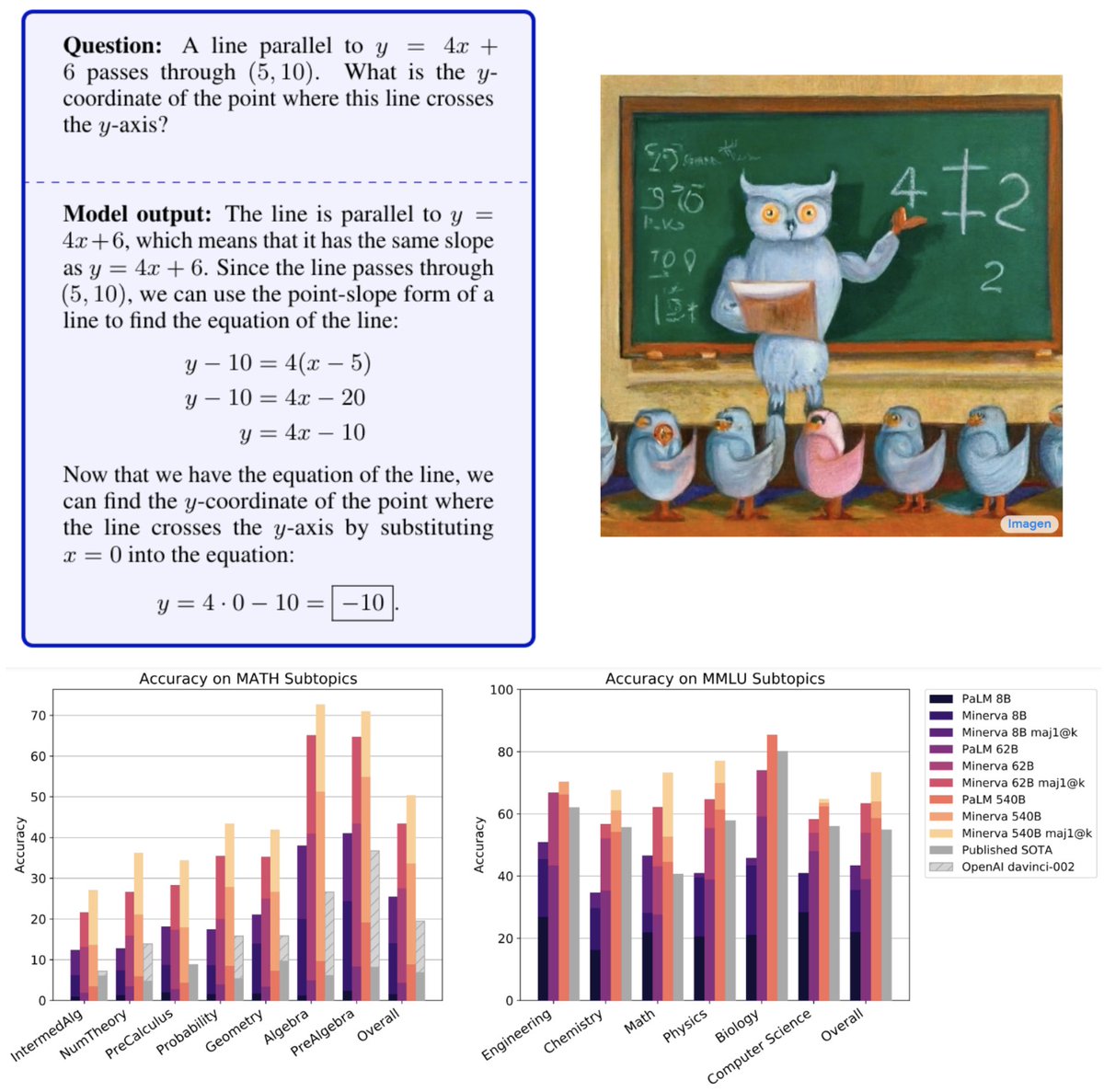

Very excited to present Minerva🦉: a language model capable of solving mathematical questions using step-by-step natural language reasoning. Combining scale, data and others dramatically improves performance on the STEM benchmarks MATH and MMLU-STEM.

29

524

3K

RT @vedantmisra: Thrilled to announce🦉Minerva: a large language model capable of solving mathematical problems using step-by-step reasoning….

0

28

0

RT @bneyshabur: Very excited to announce a significant milestone in expanding reasoning capabilities of language models! 🎉🎉. We introduce #….

0

121

0

RT @Yuhu_ai_: Super excited to share Minerva!! – a language model that is capable of solving MATH with 50% success rate, which was predicte….

0

44

0

RT @alewkowycz: Very excited to present Minerva🦉: a language model capable of solving mathematical questions using step-by-step natural lan….

0

1K

0

RT @noranta4: @FelixHill84 You may want to check out the Symbol-Interpretation task in Big-Bench [f1]. PaLM and other LLMs do not go furth….

0

1

0

RT @ethanCaballero: Google has released the 442 author 132 institution extremely diverse "BIG-Bench" Neural Scaling Laws Benchmark Evaluati….

0

49

0

RT @LiamFedus: It takes an army. Today we're delighted to release BigBench🪑: 200+ language tasks crowd-sourced from *442* authors spannin….

0

52

0

RT @jaschasd: After 2 years of work by 442 contributors across 132 institutions, I am thrilled to announce that the .

0

555

0

RT @bneyshabur: You think the RNN era is over? Think again!. We introduce "Block-Recurrent Transformer", which applies a transformer layer….

0

66

0