David Liu

@davidwnliu

Followers

106

Following

1K

Media

6

Statuses

55

PhD Machine Learning & Computational Neuroscience @Cambridge_Eng | BA/MSci Computational and Theoretical Physics @DeptofPhysics dev @thedavindicode

Joined October 2021

🚨 MIT just humiliated every major AI lab and nobody’s talking about it. They built a new benchmark called WorldTest to see if AI actually understands the world… and the results are brutal. Even the biggest models Claude, Gemini 2.5 Pro, OpenAI o3 got crushed by humans.

264

695

3K

Introducing DINOv3: a state-of-the-art computer vision model trained with self-supervised learning (SSL) that produces powerful, high-resolution image features. For the first time, a single frozen vision backbone outperforms specialized solutions on multiple long-standing dense

359

775

5K

I quite like the new DeepSeek-OCR paper. It's a good OCR model (maybe a bit worse than dots), and yes data collection etc., but anyway it doesn't matter. The more interesting part for me (esp as a computer vision at heart who is temporarily masquerading as a natural language

🚀 DeepSeek-OCR — the new frontier of OCR from @deepseek_ai , exploring optical context compression for LLMs, is running blazingly fast on vLLM ⚡ (~2500 tokens/s on A100-40G) — powered by vllm==0.8.5 for day-0 model support. 🧠 Compresses visual contexts up to 20× while keeping

574

2K

13K

Today is the start of a new era of natively multimodal AI innovation. Today, we’re introducing the first Llama 4 models: Llama 4 Scout and Llama 4 Maverick — our most advanced models yet and the best in their class for multimodality. Llama 4 Scout • 17B-active-parameter model

832

2K

13K

We knew very little about how LLMs actually work...until now. @AnthropicAI just dropped the most insane research paper, detailing some of the ways AI "thinks." And it's completely different than we thought. Here are their wild findings: 🧵

79

1K

10K

1/9 🚨 New Paper Alert: Cross-Entropy Loss is NOT What You Need! 🚨 We introduce harmonic loss as alternative to the standard CE loss for training neural networks and LLMs! Harmonic loss achieves 🛠️significantly better interpretability, ⚡faster convergence, and ⏳less grokking!

76

528

4K

The Road to AGI along with @Emiliano_GLopez (who's awesome, go follow), I built an interactive timeline of everything in AI the past few years we're living through the most exciting time in history and this site hopes to document it! go visit: ai-timeline dot org (link below)

32

63

566

Block Diffusion Interpolating Between Autoregressive and Diffusion Language Models

23

246

2K

Happy to announce we outperformed @OpenAI o1 with a 7B model :) We released two self-improvement methods for verifiable domains in our preliminary paper -->

105

249

4K

Mercury Is The First Diffusion LLM! AI simply groks the patterns of the universe. Diffusion LLMs literally manifest the LLM response and are so next generation This is Mercury! The world’s first diffusion LLM

70

53

374

Why 'I don’t know' is the true test for AGI—it’s a strictly harder problem than text generation! This magnificent 62-page paper ( https://t.co/MJXpVF4qv9) formally proves AGI hallucinations are inevitable, with 50 pages (!!) of supplementary proofs.

46

136

932

LLMs have complex joint beliefs about all sorts of quantities. And my postdoc @jamesrequeima visualized them! In this thread we show LLM predictive distributions conditioned on data and free-form text. LLMs pick up on all kinds of subtle and unusual structure: 🧵

30

200

2K

Do current LLMs perform simple tasks (e.g., grade school math) reliably? We know they don't (is 9.9 larger than 9.11?), but why? Turns out that, for one reason, benchmarks are too noisy to pinpoint such lingering failures. w/ @josh_vendrow @EdwardVendrow @sarameghanbeery 1/5

12

48

238

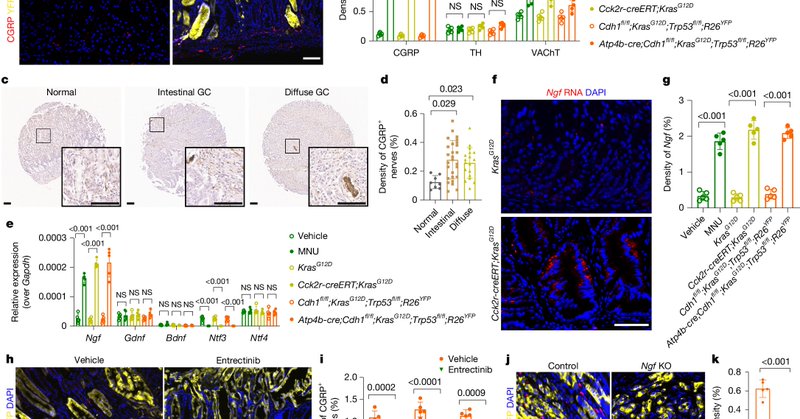

Cancer neuroimmunology is real. Nociceptive neurons promote gastric tumour progression via a CGRP–RAMP1 axis | Nature

nature.com

Nature - Functional connectivity between gastric cancer cells and sensory neurons offers a potential therapeutic target.

2

44

176

Pre-print: machine learning for neuroscience We build interpretable biological network reconstructions from electrode recordings with ML and optimal transport. Towards models of mechanisms driving behavior, we focus on single-trial neural activity and trial variability 1/6

1

71

308

The most misunderstood condition: Brain fog. It's not just fatigue. It's not just stress. Here's what's really happening inside your body:

123

1K

9K

I am happy to announce that the first draft of my RL tutorial is now available. https://t.co/SjMdabl0yW

74

743

4K

I'm excited to share our #NeurIPS2024 paper, "Modeling Latent Neural Dynamics with Gaussian Process Switching Linear Dynamical Systems" 🧠✨ We introduce the gpSLDS, a new model for interpretable analysis of latent neural dynamics! 🧵 1/10

2

17

136

How do LLMs learn to reason from data? Are they ~retrieving the answers from parametric knowledge🦜? In our new preprint, we look at the pretraining data and find evidence against this: Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢 🧵⬇️

24

205

971

Continuous-time RNNs are used in neuroscience to model neural dynamics. CNNs are used in vision neuroscience for image processing. So what's the right architecture to model the biological visual system? We propose a hybrid. (#NeurIPS2024 spotlight!) https://t.co/IJV5H1NVuW

openreview.net

In neuroscience, recurrent neural networks (RNNs) are modeled as continuous-time dynamical systems to more accurately reflect the dynamics inherent in biological circuits. However, convolutional...

2

44

161