David D. Baek

@dbaek__

Followers

2K

Following

107

Media

17

Statuses

39

PhD Student @ MIT EECS / Mechanistic Interpretability, Scalable Oversight

Joined February 2024

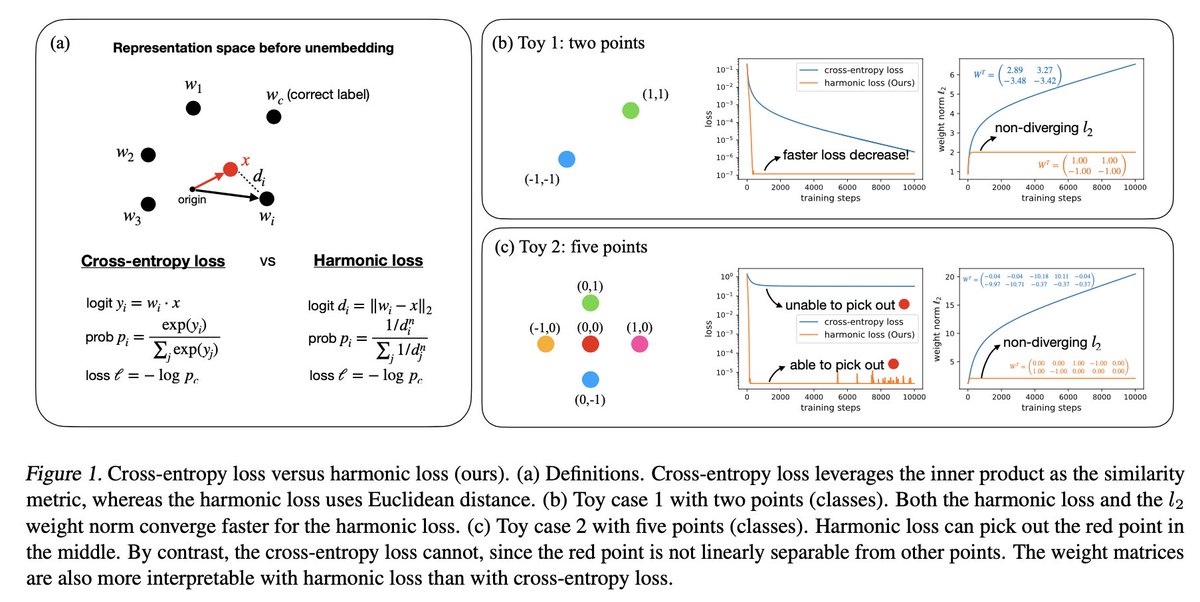

9/9 This is a joint work with @ZimingLiu11, @riyatyagi86, and @tegmark! Check out the full paper and code here:.paper: code:

17

21

297

6/6 This is a joint work with @ericjmichaud_ , @YuxiaoLi @JoshAEngels , @LilySun, and @tegmark. For more details, check out the full paper!

4

14

89

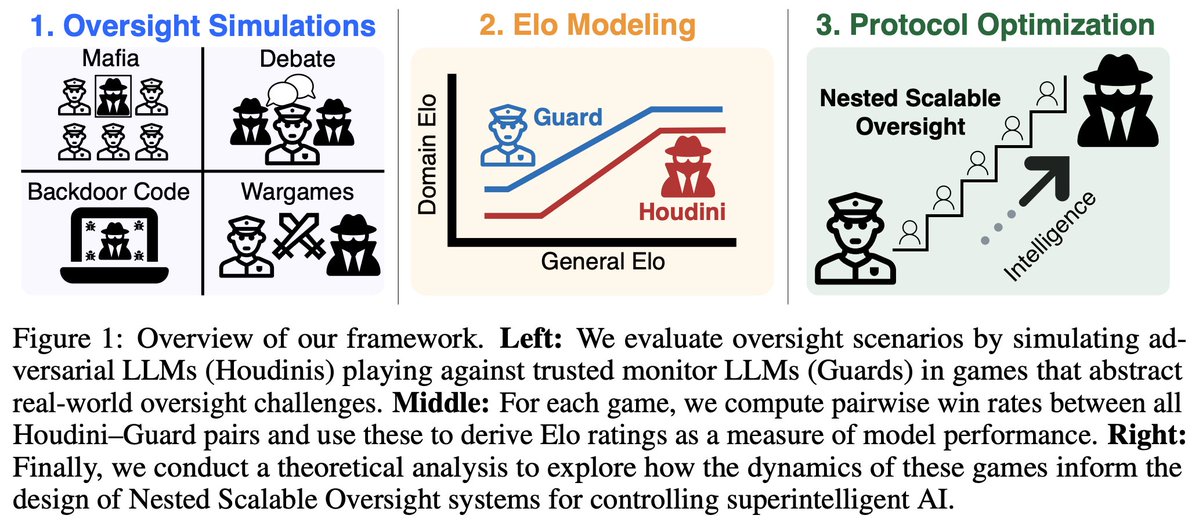

8/N This is a joint work with @JoshAEngels, @thesubhashk, and @tegmark! Check out the links below for more details!. Paper: Code: Lesswrong:

2

0

9