David Wingate

@davidwingate

Followers

137

Following

24

Media

4

Statuses

27

Father of nine. Professor of computer science at BYU; working on big language models. Mormon and lovin' it!

Provo, UT

Joined June 2010

1/ Can AI chat assistants improve conversations about divisive topics such as gun control? I was very fortunate to join a team of outstanding researchers who designed an experiment intended to answer this question:

arxiv.org

A rapidly increasing amount of human conversation occurs online. But divisiveness and conflict can fester in text-based interactions on social media platforms, in messaging apps, and on other...

3

15

63

This is today's #mustread and easily one of the top #ai papers of 2022 on first glance. I want to dig more into the methods on this, but my preliminary run through finds some fascinating patterns! @sheabrownethics , would you want to join F. LeRon Shults… https://t.co/fAA5q5ow0N

linkedin.com

This is today's #mustread and easily one of the top #ai papers of 2022 on first glance. I want to dig more into the methods on this, but my preliminary run through finds some fascinating patterns!...

1

3

3

Great discussion of our project about using GPT-3 for social science research on the NYT Hard Fork podcast! Min 51. https://t.co/QhsKQUYLEN

@joshua_gubler @EthanBusby @davidwingate @ChrisRytting @NancyFulda

podcasts.apple.com

Podcast Episode · Hard Fork · 10/14/2022 · Subscribers Only · 1h

2

5

13

It's so cool to see this work go from a random idea @ChrisRytting and team had to a significant and impactful paper. You all should read the paper and follow Chris.

For Import AI, I wrote about a very special paper which I think has some significant implications. If we can use LLMs as proxies for people (for a certain level of detail and desired response accuracy), then I expect a bunch of strange things to happen. https://t.co/7EgTtF2vrm

0

2

6

LLMs may not 'understand' people, but they are incredibly good at approximating people (and things that people do). The thing I find consistently confusing is figuring out where approximation ends and understanding begins. I myself feel most of my insights are from approximation

2

1

36

@BrendanNyhan GPT-3 is remarkable! We have a paper (forthcoming soon) that shows some of the power of GPT-3 when applied to social science — here’s the link to the arxiv version of the paper:

1

3

6

Using LLMs for social (simulation) science https://t.co/ZLCtXlfj1r Once we figure out how to scale it, this approach could revolutionize agent-based simulation modeling for nuanced, multifaceted silicon samples cc @mjbommar,@computational,@jg_environ h/t @jackclarkSF Import AI

3

29

81

For Import AI, I wrote about a very special paper which I think has some significant implications. If we can use LLMs as proxies for people (for a certain level of detail and desired response accuracy), then I expect a bunch of strange things to happen. https://t.co/7EgTtF2vrm

Using LLMs for social (simulation) science https://t.co/ZLCtXlfj1r Once we figure out how to scale it, this approach could revolutionize agent-based simulation modeling for nuanced, multifaceted silicon samples cc @mjbommar,@computational,@jg_environ h/t @jackclarkSF Import AI

4

19

76

@metaviv @abenomicon @joon_s_pk Thanks for the interest in our work! We're working on figuring out how prompting GPT-3 compares to priming experiments with humans, but we don't have a systematic answer yet. Stay tuned! @NancyFulda @joshua_gubler @EthanBusby @davidwingate @ChrisRytting

1

3

7

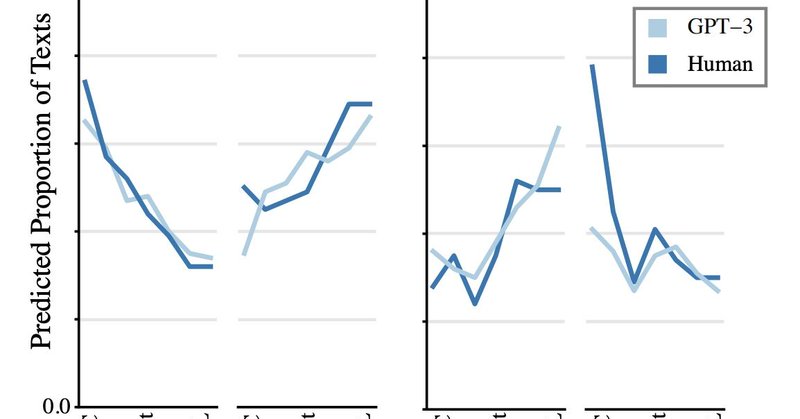

What would a social science Turing test look like? Amazing new research by @lpargyle et al. shows GPT-3 can create “silicon samples” that impersonate respondents to large surveys such as the American National Election with remarkable accuracy. https://t.co/8W3vqgjisi.

5

16

71

Prompts are the bread and butter of LLMs. ✨But can they be compressed?✨ In new work at Findings of EMNLP, we show that prompts can be compressed ⬇️ while maintaining the most crucial information! ℹ️

2

25

175

This work was done in collaboration with @davidwingate and @MohammadShoeybi near the end of my time @byu - reach out with questions!

0

1

3

@joshua_gubler @BrendanNyhan Exciting project, co-authored with @davidwingate @ChrisRytting @lpargyle @NancyFulda . Although we didn't ask about Sesame Street (we should have!)

0

2

4

Creative work! Theoretical CS has this idea of producing useful consequences of computational hardness (e.g., cryptography). This work has that flavor: if LLMs are biased, can we put that bias to good use? Probably many challenges ahead, but love the creativity.

In a new paper, we ask whether you can use GPT-3 to survey humans by simulating those humans and asking them questions, as opposed to interviewing the actual humans. https://t.co/yXPKI3OvxA w/ @davidwingate @EthanBusby @joshua_gubler @lpargyle @NancyFulda

0

2

5

In a new paper, we ask whether you can use GPT-3 to survey humans by simulating those humans and asking them questions, as opposed to interviewing the actual humans. https://t.co/yXPKI3OvxA w/ @davidwingate @EthanBusby @joshua_gubler @lpargyle @NancyFulda

9

21

88

Can we use GPT-3 to better understand human preferences? It was trained on the internet after all. Really cool work from @lpargyle, @davidwingate, @ChrisRytting, and team https://t.co/Ue3BuKTXJH

1

4

13

Excited and grateful that our research team just received an NSF EAGER grant to further research using large-scale language models to study human attitudes. A great team: @lpargyle @EthanBusby @davidwingate @NancyFulda @ChrisRytting @tsor13

1

5

17

1

0

3