Contextual AI

@ContextualAI

Followers

2,272

Following

18

Media

12

Statuses

44

Explore trending content on Musk Viewer

Davido

• 514537 Tweets

Baba

• 116859 Tweets

Valencia

• 80399 Tweets

Abeg

• 77510 Tweets

Peruzzi

• 76169 Tweets

Nancy

• 69103 Tweets

Madonna

• 59256 Tweets

Wetin

• 58105 Tweets

Francis

• 53659 Tweets

Burna

• 49641 Tweets

Lewandowski

• 49185 Tweets

Rock in Rio

• 48120 Tweets

Araujo

• 46143 Tweets

Seinfeld

• 42040 Tweets

Jesus is King

• 39713 Tweets

Katy Tur

• 30231 Tweets

#WWERaw

• 26600 Tweets

Grammy

• 25632 Tweets

Luciano

• 16755 Tweets

ANA CASTELA NO RIR

• 14944 Tweets

PRE SAVE FOI INTENSO

• 14691 Tweets

#WWEDraft

• 10555 Tweets

カレンダー通り

• 10362 Tweets

Last Seen Profiles

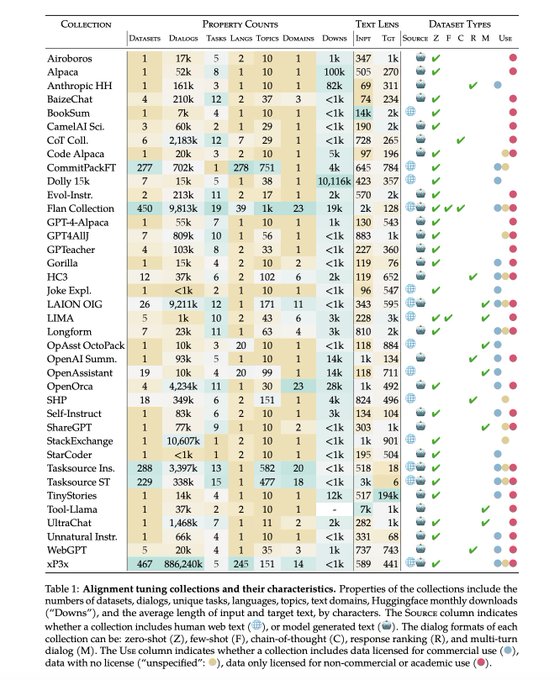

Correct licensing and attribution is critical when building LLMs for enterprise customers. Here at Contextual we care a lot about these issues and are glad to share our findings with the community, via research like this:

We analyze 1800+ datasets across licenses & metadata to help navigate the increasing complexity of instruction data ☸⛴️

CommitPackFT & xP3x are the only instruction datasets with >>50 langs & very permissive⭐

📜

A huge effort led by

@ShayneRedford

🚀❤️

1

28

106

0

7

37

Congratulations to our very own

@Muennighoff

and the other awardees for being

#NeurIPS2023

outstanding paper runners-up with their work on scaling data-constrained language models!

0

7

27

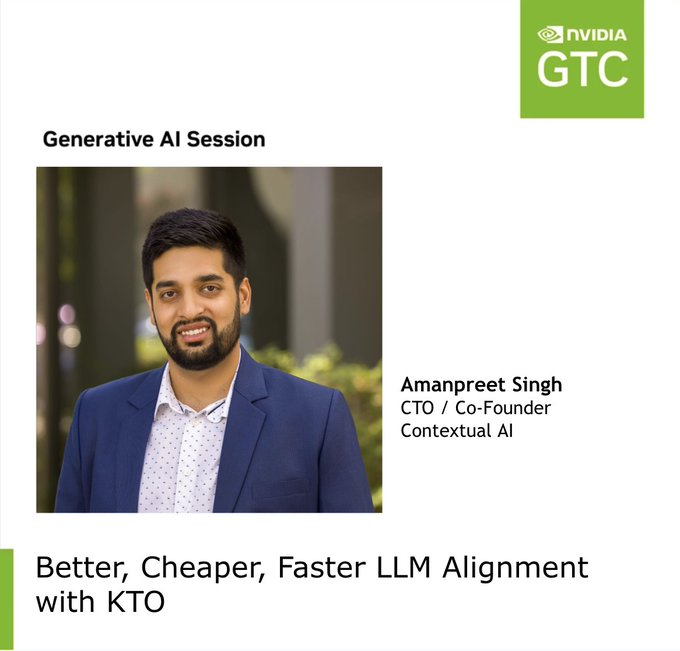

We're at

#GTC24

! Join us on March 20th to hear

@apsdehal

share how we developed Kahneman Tversky Optimization (KTO) to speed up the

#LLM

and human feedback loop for

#generativeai

0

7

24

Our CEO

@douwekiela

spoke at

@saastr

's AI Day yesterday - want to know what it takes to build AI products for the enterprise? Watch the recording here:

1

3

23

It was great to see Contextual AI in

@ThomasOrTK

's keynote at

#GoogleCloudNext

. We’re excited to partner and build the next generation of language models.

1

7

21

We're excited to share our work on better, faster and cheaper alignment of large language models with the broader community:

1

3

21

Welcome to the team

@StasBekman

!

I'm super excited to start working at

@contextualai

where I will be training LLMs w/ Retrieval to help businesses deploy AI that overcomes hallucination, keeps data up-to-date and runs much faster inference.

If you're new to , see: …

5

6

127

1

2

19

Contextual AI leverages

@googlecloud

GKE Autopilot for our retrieval augmented language model technology, optimized for enterprise workflows. Discover how

#GKE

streamlines operations, enhances performance, and reduces costs for AI applications:

0

5

18

Announcing LENS 🔎, a framework for vision-augmented language models, making language models see.

Read more:

Demo:

1

1

16

We're proud to be a 2023

#IA40

Intelligent Applications Rising Star winner!

We are excited to unveil the 2023

#IA40

— the top private companies building & enabling intelligent & generative apps today.

We'll celebrate the winners on Oct. 11 at the

#IASummit

in partnership w/

@Microsoft

,

@AWSstartups

,

@NYSE

.

@McKinsey

, &

@PitchBook

!

1

9

22

0

7

15

Looking to get most out of your hardware when training models?

Take a look at this new report from our very own

@StasBekman

.

0

6

15

Selecting the right data is critical for LLM performance across all stages of training. This recent paper surveys data selection, and shows that there is much more exciting research to be done.

0

5

15

We're thrilled to be featured on the

@CBinsights

#AI100

as one of the "most promising AI startups " in the "Foundation models & APIs" category! 🚀

Boom: The most promising AI companies around the world.

This year's winners include startups working on generative AI infrastructure, emotion analytics, general-purpose humanoids, and more.

#AI100

4

27

69

0

7

13

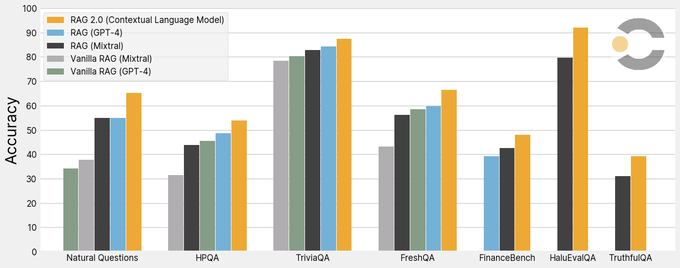

Join us on April 10 as we take part in

#GoogleCloudNext

. Our CEO

@douwekiela

will dive into retrieval-augmented generation (RAG), which he pioneered at Facebook, and share how Contextual AI's RAG 2.0 approach is key to

#generativeAI

deployment in the

#enterprise

. Register to join…

1

3

13

We have the new way to build

#enterpriseai

with RAG 2.0. Our CTO

@apsdehal

will be sharing how we accelerate

#AI

training workloads with

@GoogleCloudNext

tech. Join the discussion on April 10 →

0

2

11

It’s all open source! The paper () provides detailed ablations.

Effort led by the amazing

@Muennighoff

, in collaboration with

@Microsoft

@MSFTResearch

and

@HKUniversity

.

0

0

5