Myra Cheng

@chengmyra1

Followers

819

Following

740

Media

25

Statuses

175

RT @stanfordnlp: Computer-vision research powers surveillance technology by @ria_kalluri, @chengmyra1, and colleagues out in @Nature!. “Her….

0

7

0

RT @jathansadowski: Very happy to see my invited article for Nature is out in the new issue. I wrote about computer vision, the AI surveill….

0

29

0

RT @irenetrampoline: What more could we understand about the fractal, “jagged” edges of AI system deployments if we had better ways to list….

0

17

0

made it to Athens for Facct!! reach out if you want to chat about perceptions of AI, metaphors, sycophancy, anthropomorphism, cats, or anything else :D #facctcats (he is reading about himself)

How does the public conceptualize AI? Rather than self-reported measures, we use metaphors to understand the nuance and complexity of people’s mental models. In our #FAccT2025 paper, we analyzed 12,000 metaphors collected over 12 months to track shifts in public perceptions.

0

0

18

RT @ShirleyYXWu: Even the smartest LLMs can fail at basic multiturn communication. Ask for grocery help → without asking where you live….

0

45

0

RT @michaelryan207: New #ACL2025NLP Paper! 🎉. Curious what AI thinks about YOU?. We interact with AI every day, offering all kinds of feedb….

0

36

0

RT @baixuechunzi: Avoiding race talk can feel unbiased, but it often isn’t. This racial blindness can reinforce subtle bias in humans. Alig….

0

8

0

RT @shaily99: 🖋️ Curious how writing differs across (research) cultures?.🚩 Tired of “cultural” evals that don't consult people?. We engaged….

0

18

0

RT @lltjuatja: When it comes to text prediction, where does one LM outperform another? If you've ever worked on LM evals, you know this que….

0

25

0

RT @manoelribeiro: This @acm_chi paper surveys 319 knowledge workers. The authors find that GenAI reduces cog load for some tasks (info sea….

0

1

0

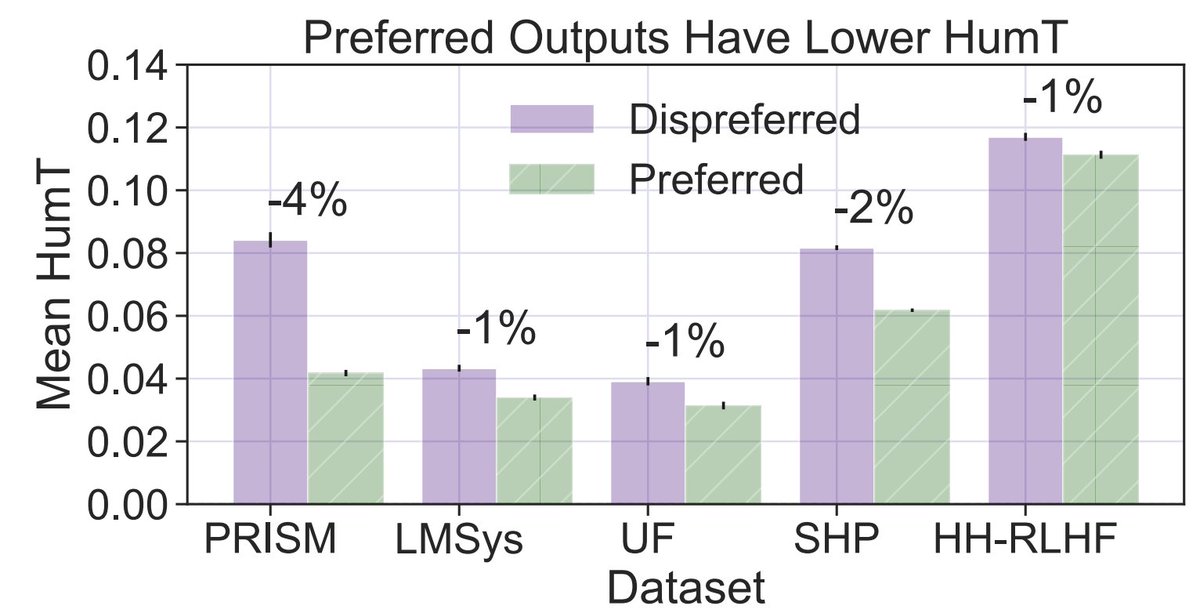

@sunnyyuych @jurafsky Working on this has made me realize how weird and messy preference datasets are and how we can improve them (cc @enfleisig haha). If that's something you're also thinking about i'd love to chat!! or read your paper :D.

0

1

8

Paper: Code: Thanks to my wonderful collaborators @sunnyyuych @jurafsky and everyone who helped along the way!!.

1

1

5

Do people actually like human-like LLMs? In our #ACL2025 paper HumT DumT, we find a kind of uncanny valley effect: users dislike LLM outputs that are *too human-like*. We thus develop methods to reduce human-likeness without sacrificing performance.

5

19

154

RT @stanfordnlp: Only ding a model for making mistakes! . It gives better results in RL and avoids mode collapse. We still understand so….

0

14

0

RT @ang3linawang: Have you ever felt that AI fairness was too strict, enforcing fairness when it didn’t seem necessary? How about too narro….

0

11

0