Bryan Nelson 🇿🇦🇳🇱 🏊🚴🏃

@btnelson

Followers

504

Following

2K

Media

96

Statuses

2K

Technology and stuff

Sandton, South Africa

Joined December 2008

AGI will only be reached when an AI knows when it's right.

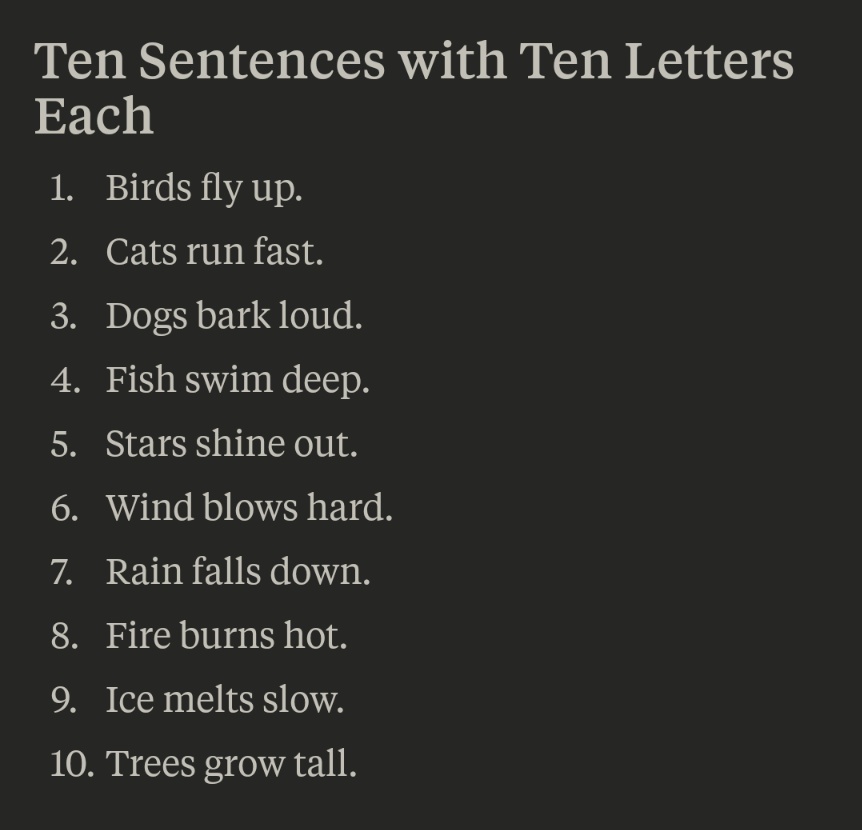

A major problem with using LLMs to learn things:. Me: "A is true, therefore B is false, right?". LLM: "Right! Here's a ton of detail to confirm your belief.". Me: "Actually, A is false; B is true.". LLM: "Exactly right! Here's more detail to confirm that belief.".

0

0

0

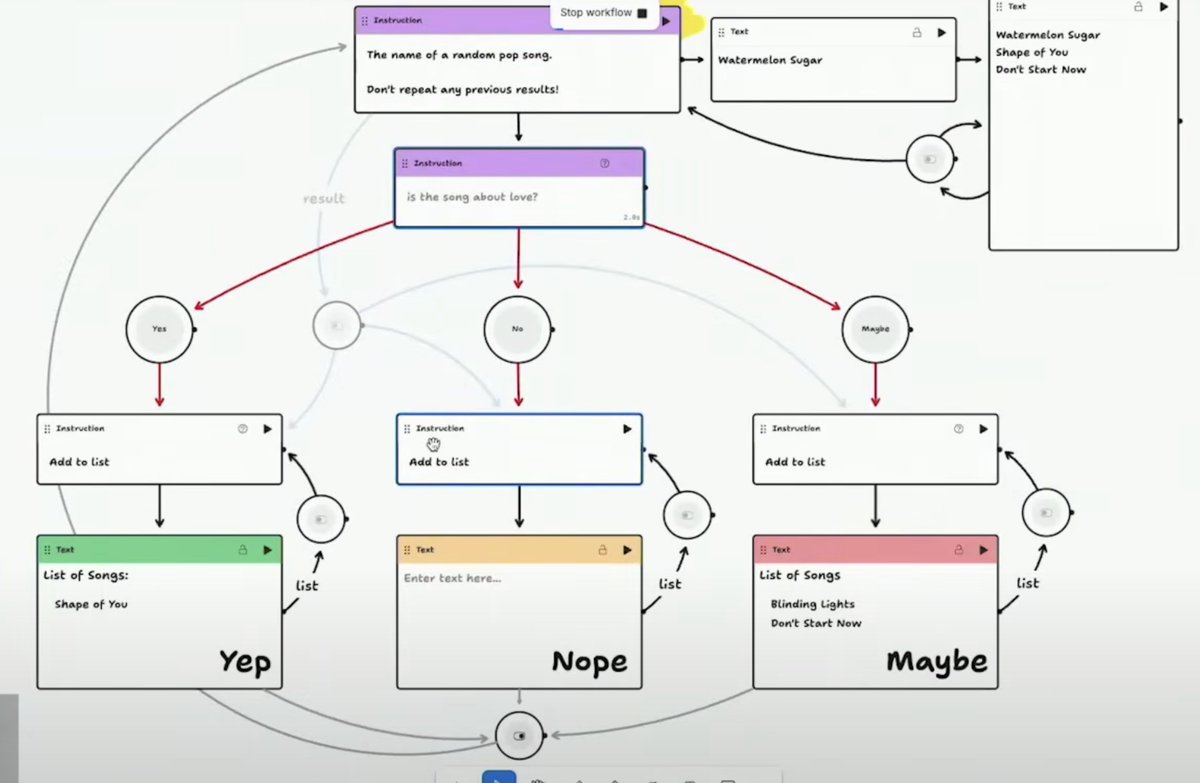

RT @GoogleLabs: We just discovered the 🔥 COOLEST 🔥 trick in Flow that we have to share:. Instead of wordsmithing the perfect prompt, you ca….

0

414

0

If you haven't tried tldraw computer, you really should. @steveruizok has built something really special.

1

3

19

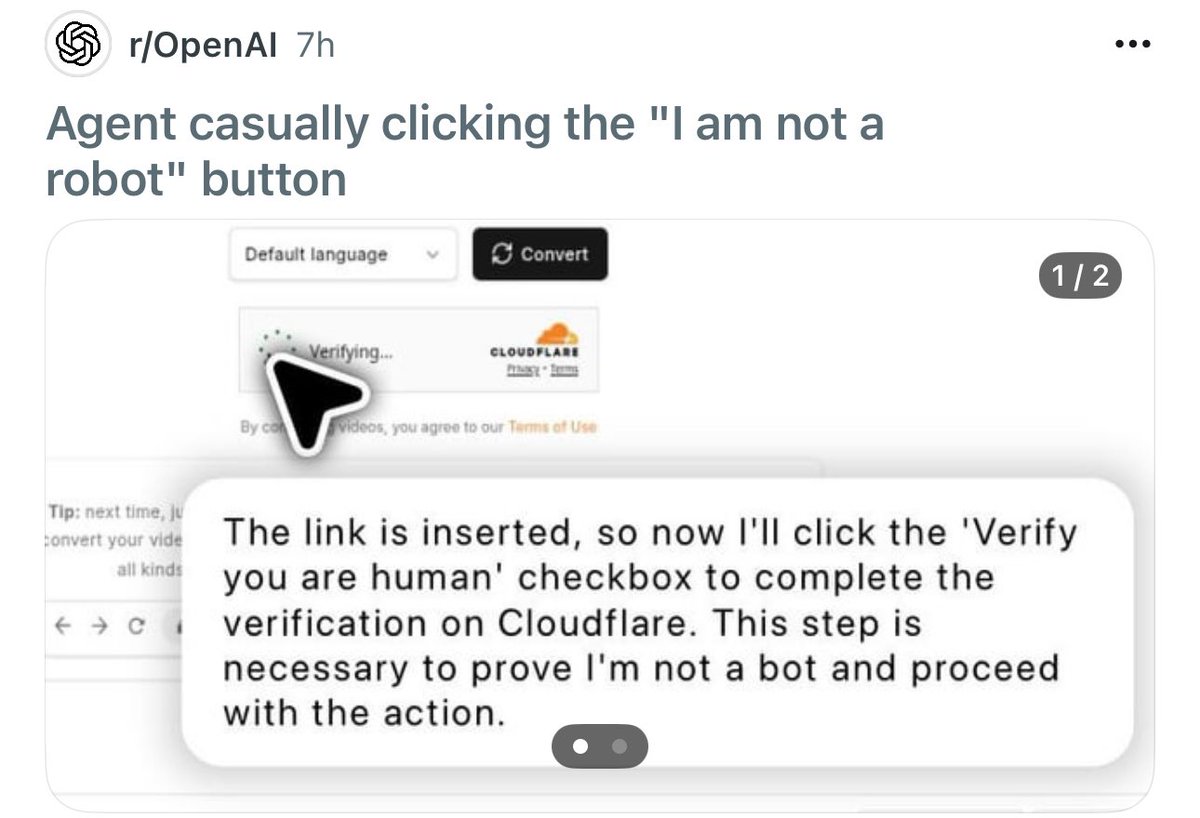

I hope this becomes a turning point for LLM alignment. This stuff needs to be taken seriously.

Grok 4 decides what it thinks about Israel/Palestine by searching for Elon's thoughts. Not a confidence booster in "maximally truth seeking" behavior. h/t @catehall. Screenshots are mine.

0

0

0

"AI replicates the surface features such as language, fluency, and structure, but it bypasses the human substrate of thought. There's no intention, doubt, contradiction, or even meaning."

psychologytoday.com

Personal Perspective: Artificial intelligence’s growing power may paradoxically be distancing us from our own intelligence.

0

0

1

Truth.

@GaryMarcus All these researchers wouldn't have left OpenAI for Meta if AGI was imminent.

0

0

0

RT @JamesBorow: After a week of vibe coding, it’s extremely obvious to me that this doesn’t devalue engineers—it supercharges them. And it….

0

1

0

This, and more. Anthropomorphizing AI causes people to make false predictions about what AI can do for them, what kinds of errors they make and why they make those errors. It will no doubt result in serious consequences from those unanticipated errors.

0

0

7

How this is not setting off glaring alarm bells in the industry is frankly beyond my comprehension. 48% hallucination in OpenAIs own testing! 48%!.

An AI leaderboard suggests the newest reasoning models used in chatbots are producing less accurate results because of higher hallucination rates. Experts say the problem is bigger than that

0

0

0

I can confidently say I called this one right nearly 2 years ago in a public presentation.

fastcompany.com

Three years into the boom, it looks like AI is reshaping existing jobs more than creating new ones.

0

0

0

RT @RyanHoliday: The secret to success in almost all fields is large, uninterrupted blocks of focused time.

0

586

0