Bryan M. Li

@bryanlimy

Followers

669

Following

8K

Media

29

Statuses

328

Encode Fellow @imperialcollege. Biomedical AI PhD @EdinburghUni. NeuroAI & ML4Health. Raised by @Cohere_Labs and @UofT.

London, England

Joined January 2014

As Transactions on Machine Learning Research (TMLR) grows in number of submissions, we are looking for more reviewers and action editors. Please sign up! Only one paper to review at a time and <= 6 per year, reviewers report greater satisfaction than reviewing for conferences!

2

22

59

The model also works with datasets containing a few hundred neurons from different animals and laboratories. There is more good stuff in the appendix of the paper and the code repository! Paper: https://t.co/e6Qt2vSFU6 Code and model weights: https://t.co/le8YSeH0Lq 7/7

github.com

Movie-trained transformer reveals novel response properties to dynamic stimuli in mouse visual cortex - bryanlimy/ViV1T-closed-loop

0

0

1

We sincerely thank Turishcheva & Fahey et al. (2023) for organising the Sensorium challenge(s!) @SensoriumComp and for making their high-quality, large-scale mouse V1 recordings publicly available, which made this work possible! 6/7

1

0

1

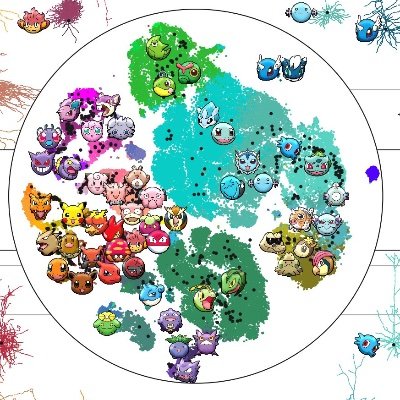

We compared our model against SOTA models from the Sensorium 2023 challenge and showed that ViV1T is the most performant while being more computationally efficient. We also evaluated the data efficiency of the model by varying the number of training samples and neurons. 5/7

1

0

1

Beyond gratings, we use ViV1T to generate centre-surround most exciting videos (MEVs) via the Inception Loop (Walker et al. 2019). We confirm in vivo that MEVs elicit stronger contextual modulation than gratings, natural images and videos, and most exciting images (MEIs). 4/7

1

0

1

ViV1T also revealed novel functional features. We found new properties of contextual responses to surround stimuli in V1 neurons, both movement- and contrast-dependent. We validated this in vivo! 3/7

1

0

1

ViV1T, only trained on natural movies, captured well-known direction tuning and contextual modulation of V1. Despite no built-in mechanism for modelling neuron connectivities, the model predicted feedback-dependent contextual modulation (including feedback onset delay!). 2/7

1

0

1

We present our preprint on ViV1T, a transformer for dynamic mouse V1 response prediction. We reveal novel response properties and confirm them in vivo. With @wolfdewulf, Danai Katsanevaki, Arno Onken and Nathalie Rochefort. Paper and code at the end of the thread! 🧵1/7

2

2

6

Launching: 18 Encode Fellows We gathered our inaugural cohort in London for 3 days of intense workshops, red-teaming, discovery, socials, and more. It culminated in our first ‘Community Critique’. Curious? Check out our projects: https://t.co/bBfUApIiYJ

0

3

3

How can AI become a partner in scientific discovery, design and dreaming? @pillar_vc brought together 150 of the Encode: AI for Science community for 'The Experimental Imagination' - a BBQ below The Shard in London Bridge to celebrate Encode's milestones so far.

1

2

4

Applications are now open for the next cohort of the Cohere Labs Scholars Program! 🌟 This is your chance to collaborate with some of the brightest minds in AI & chart new courses in ML research. Let's change the spaces breakthroughs happen. Apply by Aug 29.

15

144

528

Excited to co-organize our NeurIPS 2025 workshop on Foundation Models for the Brain and Body! We welcome work across ML, neuroscience, and biosignals — from new approaches to large-scale models. Submit your paper or demo! 🧠💪

Excited to announce the Foundation Models for the Brain and Body workshop at #NeurIPS2025!🧠 We invite short papers or interactive demos on AI for neural, physiological or behavioral data. Submit by Aug 22 👉 https://t.co/t77lrS2by5

0

6

25

Retrieval-augmented generation (RAG) helps AI systems provide more information to a large language model when generating responses to user queries. A new method for conducting “global” queries can optimize the performance of global search in GraphRAG. https://t.co/AiWGPctE2C

0

12

38

Introducing ✨Aya Expanse ✨ – an open-weights state-of-art family of models to help close the language gap with AI. Aya Expanse is both global and local. Driven by a multi-year commitment to multilingual research. https://t.co/0WsC2i9C8a

17

141

426

Did you miss our last 🛣️ Roads to Research session with @bryanlimy, @lekeonilude and @strubell? Catch up on paths to graduate studies with these guest speakers now on our channel or podcast! ✨

1

2

10

1/3 Excited to share our tell-tale heart study on Bipolar Disorder (BD), now published in @npjMentalHealth : https://t.co/16FeykXwNM

@tetraduzione @bryanlimy @DHidalgoMazzei @BioMedAI_CDT @BipolarBCN

nature.com

npj Mental Health Research - A Bayesian analysis of heart rate variability changes over acute episodes of bipolar disorder

1

6

6

Congrats to Dr. @andreasgrv who just defended his #PhD thesis on unargmaxability and constraints in deep learning, examined by Vlad Niculae and @driainmurray 🎉 A pity you could not (yet) see Andreas' beautifully designed thesis! In the meantime, some pics from the pre-viva 👇

6

10

78

Happy to share that I have started my internship at @MSFTResearch and will be working in Redmond WA for the next 3 months! Let me know if you’re around and want to hangout!

2

1

68

🚨We're hiring @StanfordMed @Stanford *Postdoc in visual & systems neuroscience* Focus on experiment design (visual stimuli, behavior etc) Join our collaborative effort w/ @kfrankelab @naturecomputes @KonstantinWille @eywalker & more Please retweet

dropbox.com

Shared with Dropbox

1

23

58

🚨We're hiring @StanfordMed @Stanford *Postdoc in visual & systems neuroscience* Focus on experiment design (visual stimuli, behavior etc)👇 Join our collaborative effort w/ @AToliasLab @naturecomputes @KonstantinWille @eywalker& more Please retweet https://t.co/BUCokQJrQo

1

29

55