Bethge Lab

@bethgelab

Followers

3K

Following

319

Media

61

Statuses

311

Perceiving Neural Networks

Tübingen, Germany

Joined July 2017

RT @adhiraj_ghosh98: Excited to be in Vienna for #ACL2025🇦🇹! You'll find @sbdzdz and I by our ONEBench poster, so do drop by!. 🗓️Wed, July….

0

4

0

RT @ori_press: Do language models have algorithmic creativity?. To find out, we built AlgoTune, a benchmark challenging agents to optimize….

0

59

0

Recent work from our lab trying to ask questions on how to fairly evaluate and measure progress in language model reasoning!. Check out the full thread below!.

🚀New Paper!. Everyone’s celebrating rapid progress in math reasoning with RL/SFT. But how real is this progress?. We re-evaluated recently released popular reasoning models—and found reported gains often vanish under rigorous testing!! 👀. 🧵👇

0

2

16

RT @lukas_thede: 🧠 Keeping LLMs factually up to date is a common motivation for knowledge editing. But what would it actually take to supp….

0

6

0

RT @CgtyYldz: For our "Automated Assessment of Teaching Quality" project, we are looking for two PhD students: one in educational/cognitive….

0

7

0

RT @shiven_sinha: AI can generate correct-seeming hypotheses (and papers!). Brandolini's law states BS is harder to refute than generate. C….

0

38

0

Checkout this cool new work from Bethgelab & friends!. Falsifying flawed solutions is key to science—but LMs aren't there yet. Even advanced models produce counterexamples for <9% of mistakes, despite solving ~48% of problems. Full thread below:.

AI can generate correct-seeming hypotheses (and papers!). Brandolini's law states BS is harder to refute than generate. Can LMs falsify incorrect solutions? o3-mini (high) scores just 9% on our new benchmark REFUTE. Verification is not necessarily easier than generation 🧵

0

2

15

Check out some cool data-centric analysis on reasoning datasets! More to come from our lab!.

CuratedThoughts: Data Curation for RL Datasets 🚀. Since DeepSeek-R1 introduced reasoning-based RL, datasets like Open-R1 & OpenThoughts emerged for fine-tuning & GRPO. Our deep dive found major flaws — 25% of OpenThoughts needed elimination by data curation. Here's why 👇🧵.

0

1

6

RT @ahochlehnert: CuratedThoughts: Data Curation for RL Datasets 🚀. Since DeepSeek-R1 introduced reasoning-based RL, datasets like Open-R1….

0

12

0

RT @ShashwatGoel7: 🚨Great Models Think Alike and this Undermines AI Oversight🚨.New paper quantifies LM similarity.(1) LLM-as-a-judge favor….

0

29

0

Check out the latest work from our lab on how to merge your multimodal models over time?. We find several exciting insights with implications for model merging, continual pretraining and distributed/federated training!. Full thread below:.

🚀New Paper. Model merging is the rage these days: simply fine-tune multiple task-specific models and merge them at the end. Guaranteed perf boost!. But wait, what if you get new tasks over time, sequentially? How to merge your models over time?. 🧵👇

0

0

12

RT @marcel_binz: Excited to announce Centaur -- the first foundation model of human cognition. Centaur can predict and simulate human behav….

huggingface.co

0

246

0

Great work by all our lab members and other collaborators!.@explainableml @GoogleDeepMind @CAML_Lab.

0

0

1

A Practitioner's Guide to Real-World Continual Multimodal Pretraining. We provide practical insights into how to continually pretraining contrastive multimodal models under compute and data constraints. 🧵👇.

🚀New Paper: "A Practitioner's Guide to Continual Multimodal Pretraining"!. 🌐Foundation models like CLIP need constant updates to stay relevant. How to do this in the real-world?.Answer: Continual Pretraining!!. We studied how to effectively do this.🧵👇

1

0

2

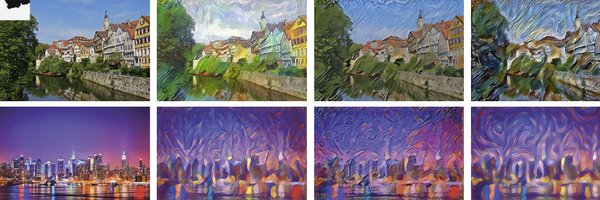

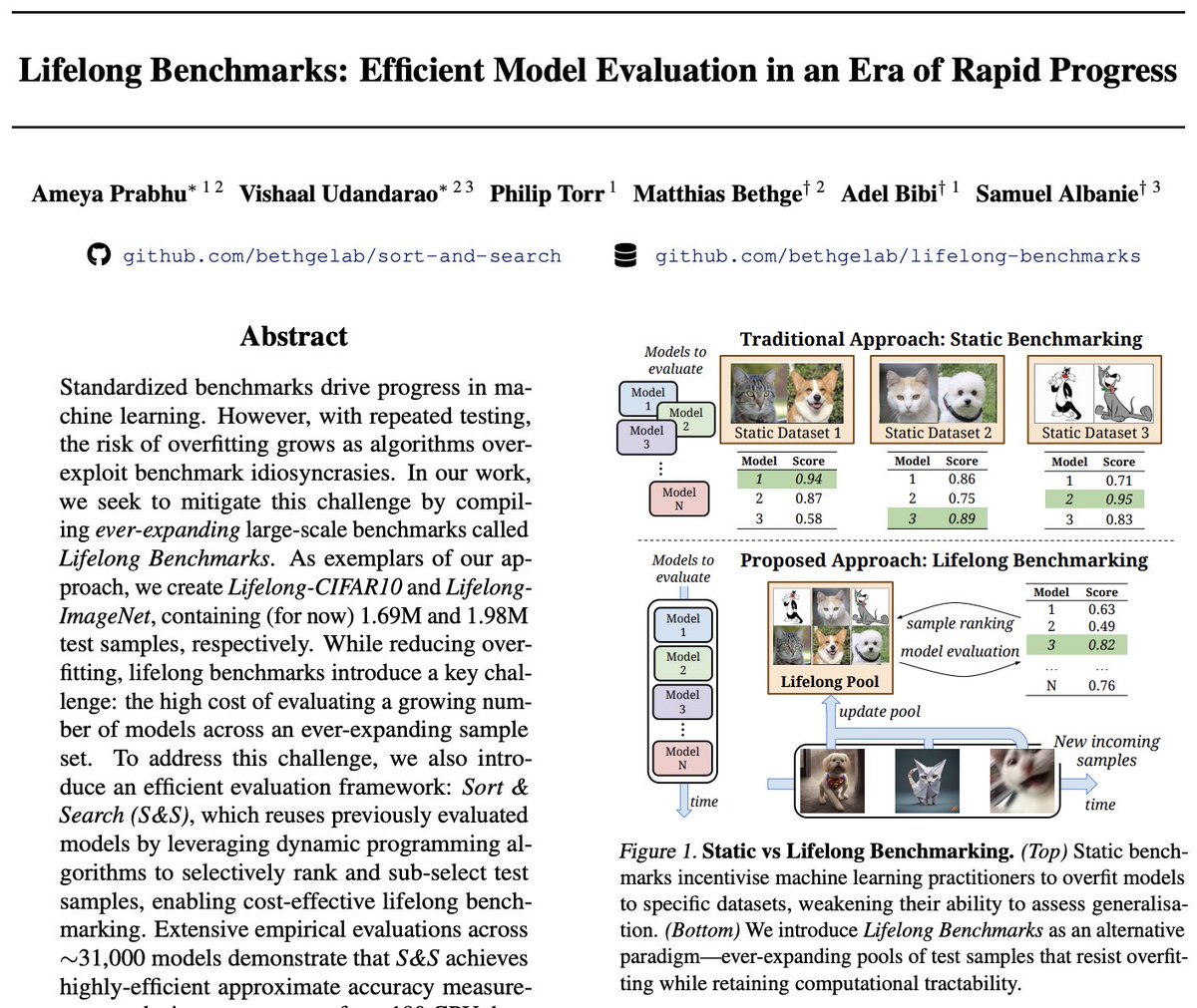

Efficient Lifelong Model Evaluation in an Era of Rapid Progress. TLDR: This work addresses the challenge of spiraling evaluation cost through an efficient evaluation framework called Sort & Search (S&S), reducing evaluation costs by 99x. 🧵👇.

🚀New Paper Alert!.Ever faced the challenge of ML models overfitting to benchmarks? Have computational difficulties evaluating models on large benchmarks? We introduce Lifelong Benchmarks, a dynamic approach for model evaluation with 1000x efficiency! .

1

0

1

CiteME: Can Language Models Accurately Cite Scientific Claims?. TLDR: CiteME is a benchmark designed to test the abilities of language models in finding papers that are cited in scientific texts. 🧵👇.

Can AI help you cite papers?.We built the CiteME benchmark to answer that. Given the text:."We evaluate our model on [CITATION], a dataset consisting of black and white handwritten digits".The answer is: MNIST. CiteME has 130 questions; our best agent gets just 35.3% acc (1/5)🧵

1

0

4

(1) No "Zero-Shot" Without Exponential Data. TLDR: We show that multimodal models require exponentially more data on a concept to linearly improve their performance on tasks pertaining to that concept, highlighting extreme sample inefficiency. 🧵👇.

🚀New Preprint Alert! . 📊Exploring the notion of "Zero-Shot" Generalization in Foundation Models. Is it all just a myth? Our latest preprint dives deep. Check it out!🔍.

1

0

6

Excited to announce 5 accepted papers from our lab at #neurips2024!!🥳🎉. Brief details of each below!. Excited for an insightful December in Vancouver!.

1

8

57