CAML Lab

@CAML_Lab

Followers

100

Following

54

Media

1

Statuses

26

Cambridge Applied Machine Learning Lab @Cambridge_Uni led by PI @SamuelAlbanie

Cambridge

Joined January 2023

RT @JRobertsAI: We need you, eagle-eyed folks of X!. Help us red team ZeroBench to find errors. To recognise effort, we will offer co-autho….

0

7

0

🪡📢 New paper from our group!. We explore the ability of frontier LLMs to follow threads of information through long context windows. "Needle Threading: Can LLMs Follow Threads through Near-Million-Scale Haystacks?". Project page:

🎺New paper!. "Needle Threading: Can LLMs Follow Threads through Near-Million-Scale Haystacks?". 🧵(1/5)

0

0

1

📢📢 Check out this new paper from our group!. A Practitioner's Guide to Continual Multimodal Pretraining:

arxiv.org

Multimodal foundation models serve numerous applications at the intersection of vision and language. Still, despite being pretrained on extensive data, they become outdated over time. To keep...

🚀New Paper: "A Practitioner's Guide to Continual Multimodal Pretraining"!. 🌐Foundation models like CLIP need constant updates to stay relevant. How to do this in the real-world?.Answer: Continual Pretraining!!. We studied how to effectively do this.🧵👇

0

0

2

🚨New paper from our group introducing GRAB!. GRAB is a challenging GRaph Analysis Benchmark for LMMs. Project page: Paper:

arxiv.org

Large multimodal models (LMMs) have exhibited proficiencies across many visual tasks. Although numerous well-known benchmarks exist to evaluate model performance, they increasingly have...

🎉📢New Paper!.Introducing GRAB: A Challenging GRaph Analysis Benchmark for Large Multimodal Models. The highest-performing model scores just 21.7%. A thread 🧵

0

0

4

🚨New work from our group introducing SciFIBench!. We evaluate the scientific figure interpretation capabilities of 30 LMM, VLM and human baselines, including GPT-4o!. Paper: Data: Repo:

github.com

NeurIPS 2024: SciFIBench: Benchmarking Large Multimodal Models for Scientific Figure Interpretation - jonathan-roberts1/SciFIBench

Introducing SciFIBench, a scientific figure interpretation benchmark for LMMs! . - We evaluate 30 LMM, VLM and human baselines.- GPT-4o is much better than GPT-4V.- The mean human narrowly outperforms GPT-4o & Gemini-Pro 1.5. (1/5)

0

1

5

RT @sinha_shiven: Excited to announce our preprint!. We develop a symbolic system for IMO Geometry that can rival Silver Medalists. Combine….

0

68

0

🚨🚨🚨Fresh-off-the-press work out of our group!. This work questions how meaningful the term "Zero-Shot" really is in the context of multimodal models; turns out its really more "exponential-shot"!!🤔. Check out the thread below for more details🧵👇.

🚀New Preprint Alert! . 📊Exploring the notion of "Zero-Shot" Generalization in Foundation Models. Is it all just a myth? Our latest preprint dives deep. Check it out!🔍.

0

0

4

RT @ChombaBupe: It turns out the data bottleneck problem is more dire than initially thought:. AI model performance - which can be largely….

0

283

0

RT @abursuc: Fun paper by teams of @bethgelab & @SamuelAlbanie diving into the so-thought "magic" zero-shot generalization properties of CL….

0

6

0

RT @bethgelab: 🚨Exciting new findings from our lab! . This work challenges the concept of "Zero-Shot Generalization" in multimodal models.….

0

13

0

RT @arankomatsuzaki: No “Zero-Shot” Without Exponential Data: Pretraining Concept Frequency Determines Multimodal Model Performance. repo:….

0

52

0

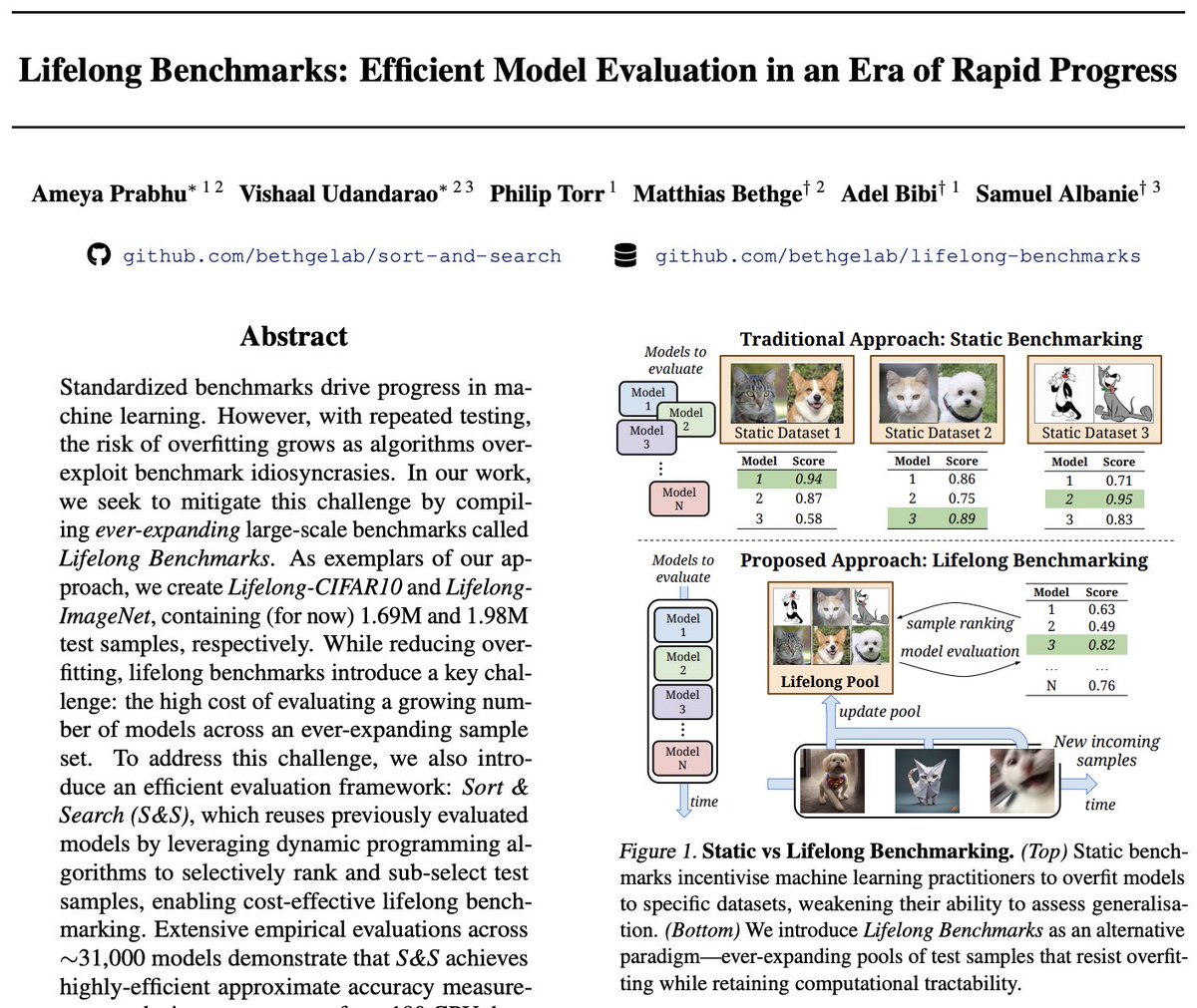

Fresh new work from our group introducing Lifelong Benchmarks! . Testing models on static benchmarks leads to severe "benchmark overfitting", inhibiting true generalisation. This paper tackles this problem and proposes a simple method to solve it. Check out more details here👇.

🚀New Paper Alert!.Ever faced the challenge of ML models overfitting to benchmarks? Have computational difficulties evaluating models on large benchmarks? We introduce Lifelong Benchmarks, a dynamic approach for model evaluation with 1000x efficiency! .

0

1

3

RT @bethgelab: @thwiedemer @jackhb98 @kotekjedi_ml @JuhosAttila @wielandbr @MatthiasBethge @prasannamayil @evgenia_rusak .

arxiv.org

Recent advances in the development of vision-language models (VLMs) are yielding remarkable success in recognizing visual semantic content, including impressive instances of compositional image...

0

4

0

RT @bethgelab: Exciting news!🥳🎉.4 papers (1 oral, 3 posters) from our lab were accepted to #ICLR24!.Brief 🧵 below:.

0

14

0

RT @vishaal_urao: Excited to share that our work on Visual Data-Type Identification got accepted to #ICLR24🚀. Vienna incoming 🚆.

0

5

0

RT @vishaal_urao: Way too late on the #NeurIPS tweet train, but here goes. @MatthiasBethge and I will give a talk at the Scaling, Alignme….

sites.google.com

This is the 6th workshop in our Scaling workshop series that started in Oct 2021. The objective of these workshop series, organized by the CERC in Autonomous AI Lab led by Irina Rish at the Univers...

0

5

0