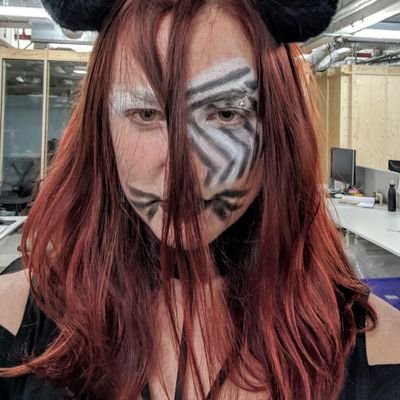

Patrick Lewis

@PSH_Lewis

Followers

5K

Following

2K

Media

21

Statuses

349

London-based AI/NLP Research Scientist. I lead RAG, Tool-use and Agents at Cohere. Previous Fundamental AI Research at Meta AI, FAIR, UCL AI

London, England

Joined August 2018

👋Psst! Want more faithful, verifiable and robust #LLM reasoning than with CoT, but using external solvers is meh? Our FLARE💫uses Logic Programming with Exhaustive Simulated Search to achieve this.🧵 With @PMinervini @PSH_Lewis @pat_verga @IAugenstein

https://t.co/cSHn97iLVJ

3

10

33

😅

So happy to see @sarahookr and @PSH_Lewis on the AI 100 :) they have both contributed so much to @cohere and the field in general. Working with such impactful and ingenious people is one of the best perks of being at cohere :)

13

1

119

@karpathy @Ouponatime38 We build this at cohere 🙌 - claim level citations throughout our whole annotation, training, and inference stack.

Command-R by Cohere is OUT NOW! Download it at your favorite model store 🎉 It’s been quite a ride to get to this point and I’m incredibly proud of what we have achieved with RAG, tool use, and all of Cohere. I want to highlight one particular unique aspect of ⌘-R: citations!

2

6

51

@Teknium1 Command R+ is great for tool usage. We added tools (web search, url fetching, document parsing, image generation, CosXL for editing, etc). We even added a calculator before the ipad

0

2

16

document AI landed to @huggingface chat, powered by open-source models (@cohere Command-R+) with tool calling 🛠️✨ explore many tools including image generation, image editing and more at Hugging Chat 🤗💬

5

24

168

New paper! RAG-timely: *MultiContrievers: Analysis of Dense Retrieval Representations* w/ @PSH_Lewis @EntilZhaPR @JaneDwivedi

https://t.co/dUjWrm2NQ5. Months late after the ArXiv upload 😅 because we were too busy at @cohere releasing multiple new LLMs 🔥. TLDR & details in 🧵

arxiv.org

Dense retrievers compress source documents into (possibly lossy) vector representations, yet there is little analysis of what information is lost versus preserved, and how it affects downstream...

1

14

48

Evaluation in AI has only works when a problem space is sufficiently scoped. We no longer evaluate LLMs on specific things, we use them for almost all our digital thinking. There is really no solution to all our eval wishes.

1

6

42

maybe controversial, but: Prompts are a property of models, not benchmarks. Assuming the opposite leads to benchmark hacking. Corollary: LLM devs gotta start releasing their prompts when model drops!

@percyliang all models will favour some sort of prompt, and the reason you get lower scores is that people report with the prompt that makes sense for their models. optimally elicited performance (sans chain-of-thought or k-shot shenanigans) is actually much fairer.

2

4

43

New paper from our team, led by @pat_verga Are you: * Doing evaluation with LLMs? * Using a huge model? * Worried about self-recognition? Try an ensemble of smaller LLMs. Use a PoLL: less biased, faster, 7x cheaper. Works great on QA & Arena-hard evals https://t.co/Lhvx5GN8I8

9

38

183

Command R+ is underrated for everyday use 👀. The speed & the balance of good reasoning, concise answers, and nice writing style makes it perhaps the best daily assistant 🔥

8

11

62

Just updated our benchmark to include the new Cohere Command models and DBRX! See results at

1

2

12

Introducing Rerank 3! Our latest model focused on powering much more complex and accurate search. It's the fastest, cheapest, and highest performance reranker that exists. We're really excited to see how this model influences RAG applications and search stacks.

21

120

717

I’m a huge fan of LLM efforts that emphasise multilingualism because they make AI far more inclusive and accessible. Congratulations @cohere

Multilinguality is something that is crucial for equitable utility of this technology. We want our models to work for as many people, organizations, and markets as possible. We perform strongly across 10 languages and we're eager to expand this further.

4

12

72

👂 @cohere Integration Package 🦜 Hot off the heels of Cohere's v5 sdk supporting tool calling and their new Command R+ model, we're excited to announce the `langchain-cohere` package, which exposes all of their integrations ranging from their chat models to model-specific

7

53

201

This drove me crazy for a while: We had internal experiments showing RM > LLM for evaluation, which felt really counterintuitive to me. Nice to get external confirmation, and thanks for building the benchmark @natolambert ! :)

1

7

63

Cohere's Chat interface with new ⌘R+ model looks... really good! 📄 search + cite 💻 web browsing 🐍 python Watch this https://t.co/3uJkFSKKjK

4

17

129

mind-blow by how good ⌘ R+ multi-step tool use is 🤯 rewrites my sloppy query -> fetches numbers -> plots them with citations

5

25

161