Vector Wang

@VectorWang2

Followers

2K

Following

573

Media

149

Statuses

337

PhD student in robotics manipulation, currently on physics-aware world models for robust manipulation @RiceCompSci. Designer and developer of XLeRobot.

Houston, TX

Joined November 2021

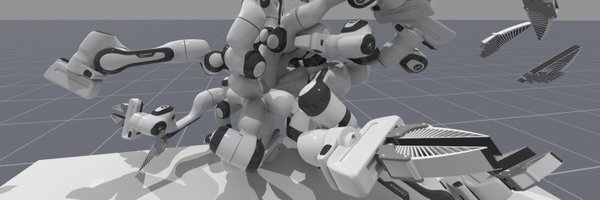

XLeRobot 0.3.0 Showcases Open fridge, get drinks, fill ice, wipe table, clean room, take care plants and cats... All for 660$, fully open-sourced, based on HF LeRobot. Teleop with Joy-con, or RL/VLA. Assembly kit ready for purchase soon Stay tuned! https://t.co/9hK0e8ufr4

9

53

306

GOAT

Today, we present a step-change in robotic AI @sundayrobotics. Introducing ACT-1: A frontier robot foundation model trained on zero robot data. - Ultra long-horizon tasks - Zero-shot generalization - Advanced dexterity 🧵->

0

0

19

So elegant. This is the perfect robot in my view so far.

5

3

69

We're introducing EnvHub to LeRobot! Upload simulation environments to the @hugginigface hub, and load them in lerobot, with one line of code ! env = lerobot.make_env("username/my-env", trust_remote_code=True) Back in 2017, @OpenAI called on the community to build Gym

12

32

180

These are the forms of the robots that can be reliably deployed into people’s homes. Not Optimus, not 1X, not Xiaopeng.

Excited to share our latest progress on DYNA-1 pre-training! 🤖 The base model now can perform diverse, dexterous tasks (laundry folding, package sorting, …) without any post-training, even in unseen environments. This powerful base also allows extremely efficient fine-tuning

9

7

74

finally got around to implementing the software for teleoping the detachable gripper on top of @LeRobotHF going to release open source files for it soon

23

24

298

What’s even better: 💻 🛜 Multidevice, robot-agnostic, fully wireless with real time visualization and runtime mode switching; 📊 👬Complete compatibility with Lerobot Robot/Teleoperator definitions and data pipelines

0

0

4

Amazing team from @AsteraInstitute at @seeedstudio @NVIDIARobotics @LeRobotHF home robot hackathon! ✨ VR app with stream & arm mapping 🎮 Isaac Sim BEHAVIOR env + RL 🗺️ MUSt3R-based navi & 3D reconstr 🧠 GR00T 1.5 training with Jetson Thor All open source:

4

19

75

At the hackathon right now. Incredible jobs from all the teams. More soon.

7

5

153

Thanks Chris for mentioning XLeRobot! Will definitely keep working on this together with everyone in the community to accelerate robotics research and bring robots closer to people’s lives.

Open source has always been a huge part of robotics, and this feels more true now than ever, with a proliferation of innovative open-source hardware and software platforms that have made robotics more accessible and dynamic than ever before, even in an age of huge valuations:

3

5

59

Running a GR00t N1.5 model for matcha pouring. Getting ready for hackathon @seeedstudio

@NVIDIARobotics @VectorWang2

6

13

124

I will be there in Mountain View. Can’t wait to see the talents shine!

Over 100 contestants joined our #EmbodiedAIHackathon in Shenzhen! 🤖🔥 From single #robot arm pick & place to dual-arm manipulation, from handling rigid objects to soft textiles, teams pushed boundaries — evolving into long-horizon actions with stronger generalization. Next

1

0

11

Recent advances in 3D reconstruction with stereo and even monocular cameras make me believe we can achieve the lowest-cost (600$~3000$) practical mobile home robots in the near future (3-5 years), instead of 30~50$k humanoids.

Last night we successfully researched a path to get perfect point clouds on $10 stereo USB cameras at Bracket Bot. If you want to bring robots to billions of people, and work on state of the art robots, DM me

5

0

17

Why Open-source With Isaac sim, GR00T, SmolVLA, 660$ XLeRobot can organize stuff into drawer and zipping up bag in 2 days Made by MakerMods @IsaacSin12 @QILIU9203 @Ryan_Resolution on @seeedstudio @huggingface @NVIDIARobotics Home Robot Hackathon in Shenzhen (Bay Area next)

1

27

240

Finally see some decent research using SO101 as experiment platform!

What's the right architecture for a VLA? VLM + custom action heads (π₀)? VLM with special discrete action tokens (OpenVLA)? Custom design on top of the VLM (OpenVLA-OFT)? Or... VLM with ZERO modifications? Just predict action as text. The results will surprise you. VLA-0:

1

3

65