Quentin de Laroussilhe

@Underflow404

Followers

269

Following

63

Media

5

Statuses

60

Technical Lead Manager, Google ML - I teach machines how to learn. Interested in artificial intelligence, ML and data science.

/home/zurich

Joined June 2010

Hello from the #DLIndaba2022 conference with my colleagues @MehadiSH and @RachDean_Google at the @Google booth!.If you're interested to learn about our opportunities in the Machine Learning space, we'd love to chat with you! Pro tip: Ask for our QR code for opportunities ;)

0

2

27

RT @zama_fhe: Our friends at @Optalysys did a tutorial on using Concrete to create an encrypted version of Conway's Game of Life! Check it….

0

8

0

RT @andrewgwils: Does knowledge distillation really work? .While distillation can improve student generalization, we show it is extremely d….

0

69

0

RT @TheGradient: 1/5 Self-Distillation loop (feeding predictions as new target values & retraining) improves test accuracy. But why? We sho….

0

187

0

RT @obousquet: Google AI Residency 2020 applications are open, with positions in many different locations including Europe and Africa. A fa….

0

128

0

RT @obousquet: The next challenge for image classification: build a generic representation that can be adapted to any task with very few tr….

0

3

0

RT @neilhoulsby: Code released to adapt BERT using few parameters. Can be used to adapt one model to many tasks. Catastrophic forgetting no….

0

57

0

RT @computingnature: Story time! Single neurons in the brain can’t be depended on for reliable information. Here are some neurons from our….

0

692

0

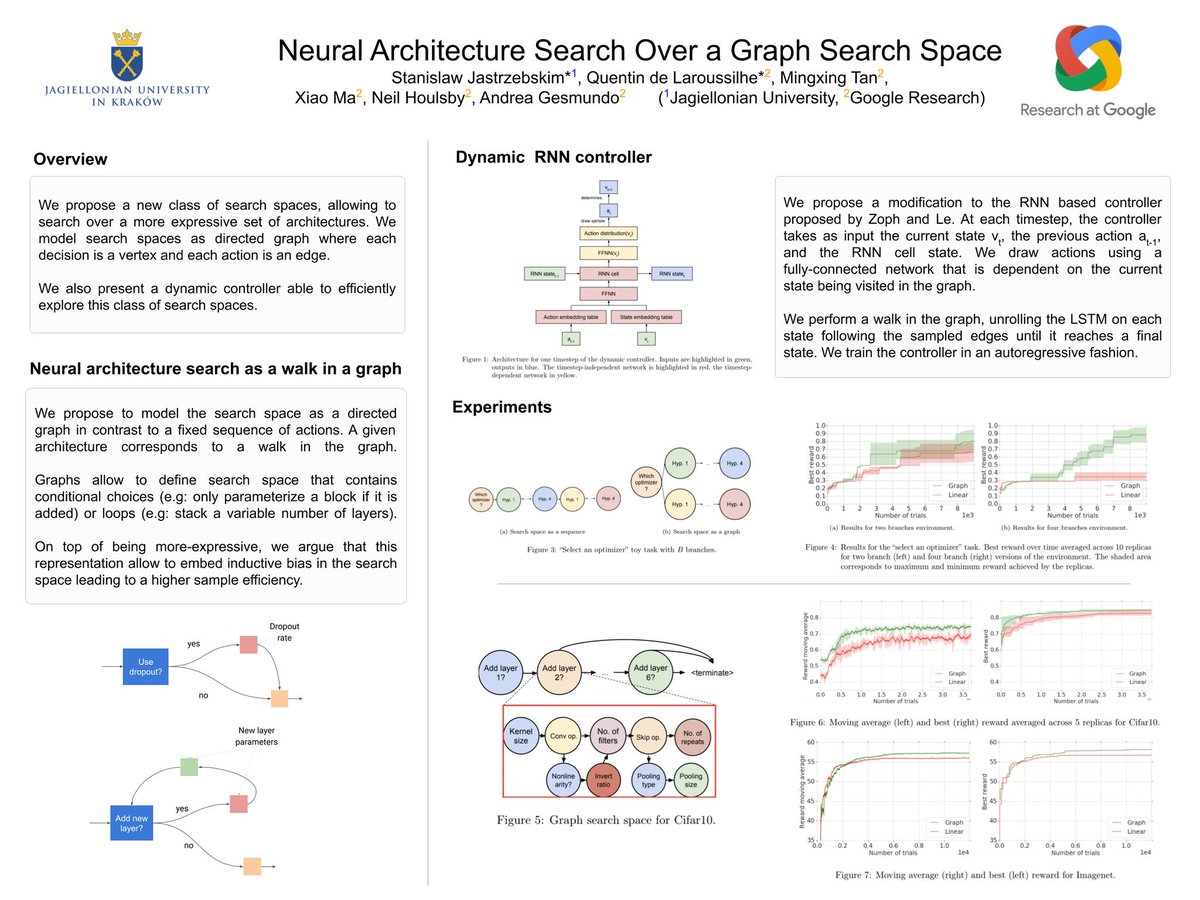

Drop by the AutoML workshop tomorrow at #ICML2019 to discuss our work on graph search spaces for NAS!

0

0

6

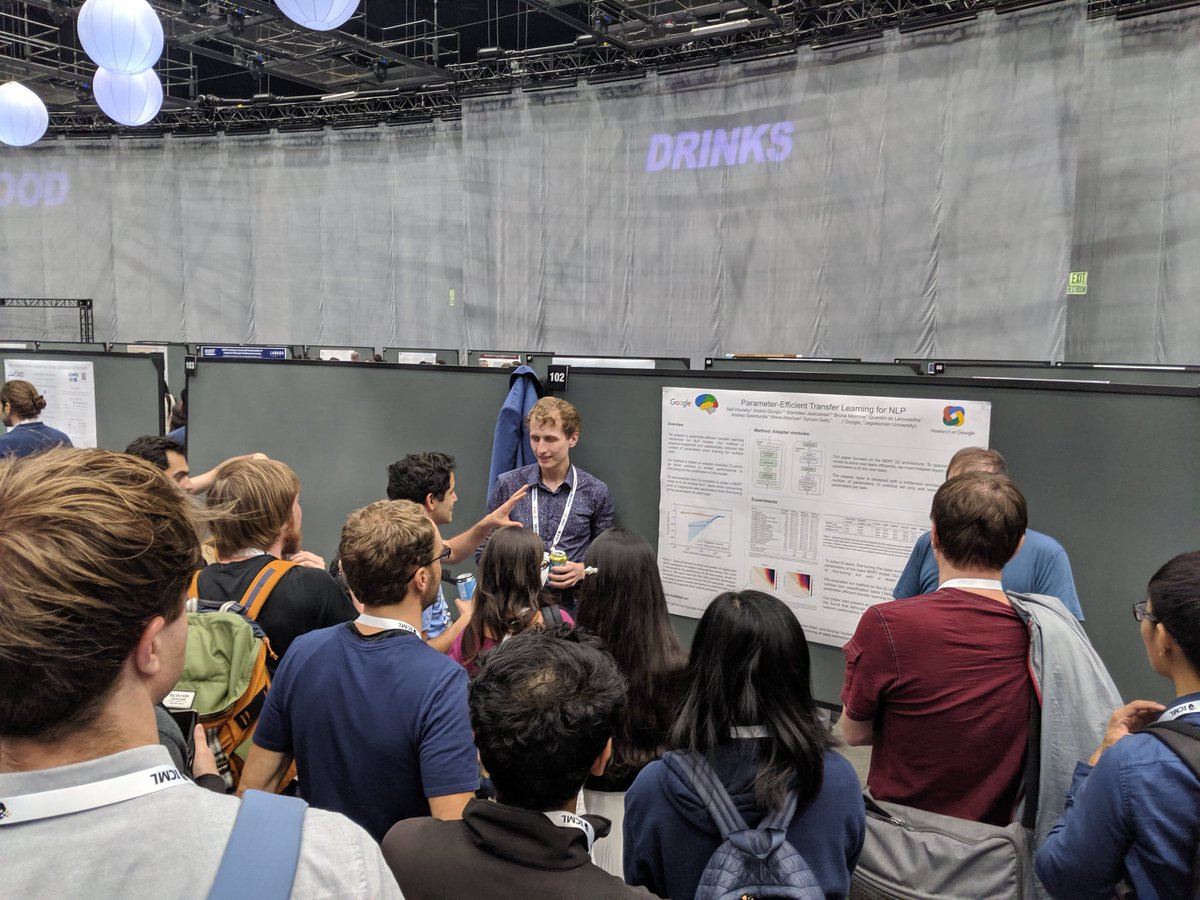

You are serving multiple fine-tuned NLP models? We're presenting how to cut-down the RAM needed by few order of magnitude at poster 102! #ICML2019 @kudkudakpl

0

0

5

If you are interested to train NLP models to solve N tasks in a parameter-efficient fashion, @kudkudakpl will present our results on Thursday at 11:20am in room 104 #ICML2019. Poster #102 at 6:30pm. Joint work w/ @neilhoulsby Andrei, Bruna, @agesmundo Mona, @sylvain_gelly

0

4

10

RT @MichaelOberst: The Whova app schedule at #ICML2019 does not appear to be robust to adversarial words like “lunch”. .

0

2

0

RT @OlivierBachem: I added a summary of all our talks, posters and demos at #ICML2019 to Have a look if you are in….

0

14

0

RT @JeremiahHarmsen: BERT is in #TFHub and here's a runnable notebook! 🎉.More BERT modules at .

0

7

0

RT @neilhoulsby: It turns out that only a few parameters need to be trained to fine-tune huge text transformer models. Our latest paper is….

0

40

0

RT @googlestudents: The projects #GoogleInterns tackle and how they apply their new skills after finishing their internships often amaze us….

0

7

0

RT @OlivierBachem: Do you want to get an overview over recent advances in autoencoders without reading 30+ papers? Check out our review pap….

0

47

0