Tony Wang

@TonyWangIV

Followers

677

Following

4K

Media

22

Statuses

274

Monitoring AI @ US CAISI, PhD student @MIT_CSAIL. Engineer at heart.

Washington, DC

Joined August 2017

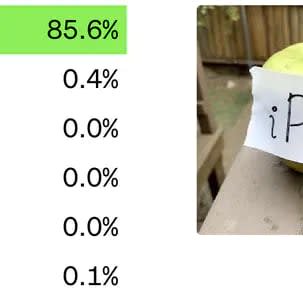

Sharing a new paper by some collaborators and myself. We show that it is possible to covertly jailbreak models by leveraging finetuning access. We call this Covert Malicious Finetuning (CMFT). If this vulnerability exists in future more powerful models, then safe deployment of.

New paper! We introduce Covert Malicious Finetuning (CMFT), a method for jailbreaking language models via fine-tuning that avoids detection. We use our method to covertly jailbreak GPT-4 via the OpenAI finetuning API.

1

3

24

Some more thoughts on this paper here:

lesswrong.com

This post discusses our recent paper Covert Malicious Finetuning: Challenges in Safeguarding LLM Adaptation and comments on its implications for AI safety.

0

1

3

RT @OwainEvans_UK: New paper, surprising result:.We finetune an LLM on just (x,y) pairs from an unknown function f. Remarkably, the LLM can….

0

221

0

Roughly 18 months ago, my collaborators and I discovered that supposedly superhuman Go AIs can be defeated by humans using a simple adversarial strategy. In the time since, we've been testing a few baseline techniques for defending against this exploit. Unfortunately, in our new.

🛡 Is AI robustness possible, or are adversarial attacks unavoidable? We tested three defenses to make superhuman Go AIs robust. Our defenses manage to protect against known threats, but unfortunately new adversaries bypass them, sometimes using qualitatively new attacks! 🧵

26

111

768

RT @bshlgrs: ARC-AGI’s been hyped over the last week as a benchmark that LLMs can’t solve. This claim triggered my dear coworker Ryan Green….

0

177

0

RT @visakanv: The point isn’t to achieve the million views on YouTube . That’s truly just the exhaust coming out of the creative process. T….

0

1

0

Finally got around to filling out @robbensinger's AI x-risk chart!

Here are some of my views on AI x-risk. I'm pretty sure these discussions would go way better if there was less "are you in the Rightthink Tribe, or the Wrongthink Tribe?", and more focus on specific claims. Maybe share your own version of this image, and start a conversation?

1

0

8

Very cool newsletter. Was pleasantly surprised that there seem to be people in China in positions of power that take AI safety seriously.

"What's going on with AI Safety in China?" – a question I've often encountered in the past year. Check out "AI Safety in China" - a newsletter by Concordia AI, sharing insights and updates on China's AI safety and governance landscape