Suvansh Sanjeev

@SuvanshSanjeev

Followers

2K

Following

1K

Media

106

Statuses

521

Research @OpenAI. ex-🤖 @berkeley_ai, @CMU_Robotics. Teamwork makes the dream work, and Dreamworks makes Shrek.

Joined February 2016

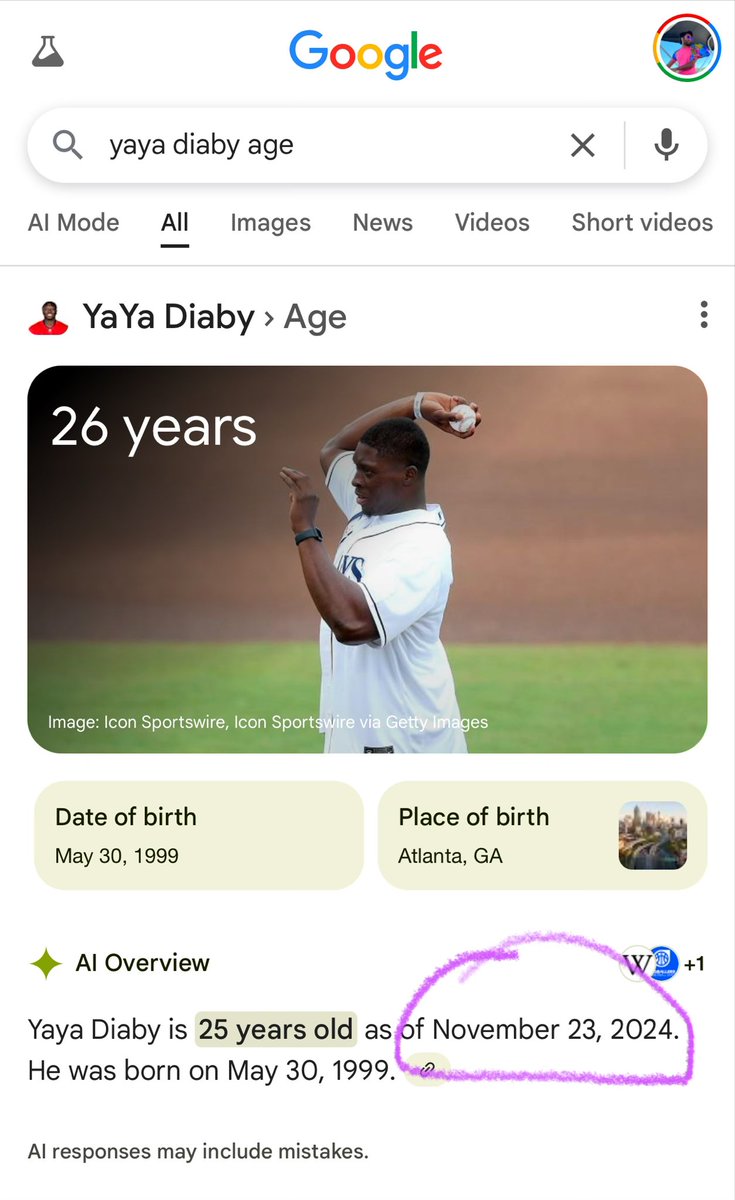

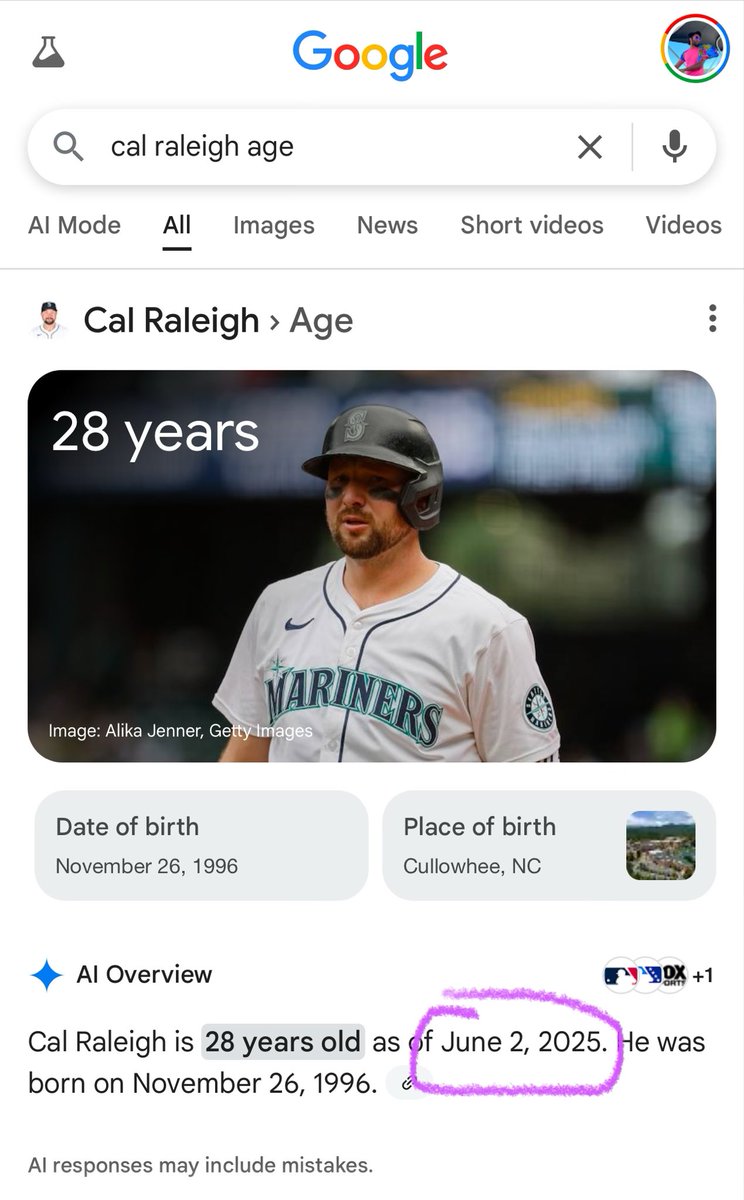

GPT-5 is what you’ve been waiting for – it defines and extends the cost-intelligence frontier across model sizes today. it’s been a long journey, and we’ve landed pivotal improvements across many axes in the whole GPT-5 family. and hey no more model picker (by default)!

2

11

79

@Amaury_Hayat Finally, GPT-5 Nano was the first model to correctly answer a particular number theory problem. Its reasoning was invalid and the success was a numerical coincidence. This goes to show the importance and challenge of making sure answers are unguessable.

0

0

9

RT @julieswangg: GPT-5 is here! it's been a wild ride, and i've been honored to work with the dream team (s/o @j_mcgraph @strongduality) to….

0

3

0

yooo love our team 😍.

GPT-5 is proof that synthetic data just keeps working! And that OpenAI has the best synthetic data team in the world 👁️. @SebastienBubeck the team has our eyeballs on you! 🙌

0

1

27

RT @BorisMPower: The 20b model is quite possibly the densest packed intelligence created in the universe so far! 🚀.

0

20

0

hallman is a certified model wizard

Excited to share gpt-oss with the world!.We are releasing two open-weights models – one 5.1B active 117B total params and one 3.6B active 20.9B total params. They are very good for their size. In particular, running 20B locally is a truly magical experience. Enjoy 🫡.

0

0

17

damn this is such an awe-inspiring release from GDM – real-time interactive rendering really makes your imagination run wild. notably they're positioning it as a step closer to pragmatic utility beyond gaming!.

Introducing Genie 3, our state-of-the-art world model that generates interactive worlds from text, enabling real-time interaction at 24 fps with minutes-long consistency at 720p. 🧵👇

1

0

7

this was a first-class effort worked on by amazing researchers, and the results speak for themselves. I'm proud of OpenAI for this release – open-weights models are huge for Broadly Distributing the Benefits of AI research. glad this model made it out alive 🙃

2

5

97

RT @tszzl: pretraining is an elegant science, done by mathematicians who sit in cold rooms writing optimization theory on blackboards, engi….

0

194

0

if you could buy and sell intelligence futures (“1B tokens of GPT-5 quality in 2027”), could this incentivize more ambitious open source training runs?. insightful exploratory thread proposing intelligence and compute markets from @stevenydc, who i’ve had the pleasure of working.

AI companies are the new utilities. Compute goes in → intelligence comes out → distribute through APIs. But unlike power companies who can stockpile coal, and hedge natural gas futures, OpenAI can't stockpile compute. Every idle GPU second = money burned. Use it or lose it.

0

0

6

lol wired couldn’t be bothered to finish the sentence it’s quoting . hint: it flips the connotation on its head.

SCOOP: OpenAI CEO Sam Altman is hitting back at Meta CEO Mark Zuckerberg’s recent AI talent poaching spree. "Missionaries will beat mercenaries," Altman wrote in an internal memo to OpenAI researchers. WIRED has obtained it:

0

0

2

not to mention you gotta meet the economically valuable tasks where they are – AGI will not be made in a cave. such a hypothetical effort would generate data, environments, and product surfaces that are all plausibly on the path to AGI.

Many saying this shows that no AGI is imminent. But IMO what it shows is that "AGI" is not a particularly *practical* milestone. Suppose you have human-level intelligence. Great, what new problems can that solve?. Designing a CPU is just step 0 of building the actual software.

1

0

7