Ruohan Zhang

@RuohanZhang76

Followers

1,048

Following

425

Media

6

Statuses

75

Postdoc @stanfordsvl @StanfordAILab ; robot, brain, art; climbing, soccer, cooking, music, dance

Joined September 2021

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Back to Back

• 1247306 Tweets

Drake

• 435304 Tweets

Kendrick

• 435263 Tweets

#23point5EP9

• 415138 Tweets

#ขวัญฤทัยEP10

• 300730 Tweets

Sant Rampal Ji Maharaj

• 252198 Tweets

ONGSASUN LOVEBIRDS

• 215157 Tweets

Ole Miss

• 96436 Tweets

Euphoria

• 89385 Tweets

Reus

• 71454 Tweets

Sony

• 58935 Tweets

猫の恩返し

• 44324 Tweets

Porto Alegre

• 41834 Tweets

#الهلال_التعاون

• 41201 Tweets

#ساعه_استجابه

• 38074 Tweets

Taylor Made

• 19691 Tweets

まことお兄さん

• 18450 Tweets

ウズベキスタン

• 18289 Tweets

Kdot

• 18227 Tweets

Leclerc

• 15400 Tweets

D-3 to BLOSSOM

• 14335 Tweets

Al Green

• 13878 Tweets

Jack Antonoff

• 13670 Tweets

Father's Day

• 13026 Tweets

Odell

• 12103 Tweets

冒頭10分

• 11608 Tweets

Helldivers 2

• 10535 Tweets

Last Seen Profiles

Pinned Tweet

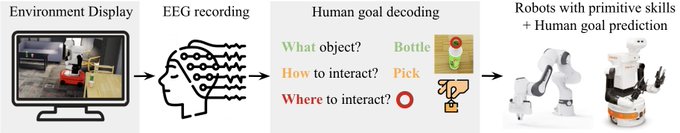

Introducing our new work

@corl_conf

2023, a novel brain-robot interface system: NOIR (Neural Signal Operated Intelligent Robots).

Website:

Paper:

🧠🤖

20

186

757

Project co-lead by amazing

@sharonal_lee

,

@minjune_hwang

,

@ayano_hiranaka

,

@chenwang_j

,

With

@wensi_ai

,

@RyanRTJJ

,

@Shreyagupta08

, Yilun Hao,

@GabraelLevine

,

@RuohanGao1

Advised by Prof. Anthony Norcia,

@drfeifei

,

@jiajunwu_cs

2

1

18

Proud to be part of the amazing BEHAVIOR team, hope to see you at our Tutorial on Monday!

Do you want to learn to train and evaluate embodied AI solutions for 1000 household tasks in a realistic simulator? Join our BEHAVIOR Tutorial at

#ECCV2022

: Benchmarking Embodied AI Solutions in Natural Tasks!

Time: Monday, Oct 24th 14:00 local time (4:00 Pacific Time)

10

69

424

0

1

14

Check out our recent article

@gradientpub

for training decision AI with human guidance! The original JAAMAS review paper was written with Faraz Torabi

@GarrettWarnell

@PeterStone_TX

.

How do humans transfer their knowledge and skills to artificial decision-making agents?

What kind of knowledge and skills should humans provide and in what format?

@RuohanZhang76

, a postdoc at

@StanfordSVL

and

@StanfordAILab

, provides a summary:

👇

1

4

15

0

1

11

Excited to be part of this NeurIPS workshop, if you are interested in attention, please consider submitting your work!

0

1

10

Check out our new work on using foundation model for robot manipulation!

0

0

8

In honor of our upcoming

@NeurIPSConf

workshop on "All Things Attention", and the fact that the deadline for you to submit your work has been extended to **Oct 3**, I present a thread on attention and decision making in AI!

The Submission Deadline has been extended to Oct 3, 2022 (11:59PM AoE)

@NeurIPSConf

Consider submitting your work to our workshop

@attentioneurips

See details here:

1

2

5

1

2

7

Real world performance is amazing.

0

1

4

Glad to be part of the team and thanks

@sunfanyun

for the lead. Come to talk to us at

@NeurIPSConf

this year!

How can we effectively predict the dynamics of multi-agent systems?

💥 Identify the relationships. 💥

We are excited to share IMMA at

#Neurips2022

, a SOTA forward prediction model that infers agent relationships -- simply by observing their behavior.

1/

2

4

24

0

0

3

@chenwang_j

it’s a pleasure to work with you and this team. The key insight is that for robot learning from humans, data for training high-level planer and low-level visuomotor skills can be different. PLAY data is a good candidate for learning to plan.

1

0

1

Amazing work!

Our language decoding paper (

@AmandaLeBel3

@shaileeejain

@alex_ander

) is out! We found that it is possible to use functional MRI scans to predict the words that a user was hearing or imagining when the scans were collected

15

81

232

0

0

1