Michael Poli

@MichaelPoli6

Followers

3K

Following

2K

Media

63

Statuses

420

AI, numerics and systems. Co-founder @RadicalNumerics.

Joined August 2018

Life update: I started Radical Numerics with Stefano Massaroli, Armin Thomas, Eric Nguyen, and a fantastic team of engineers and researchers. We are building the engine for recursive self‑improvement (RSI): AI that designs and refines AI, accelerating discovery across science and

8

23

230

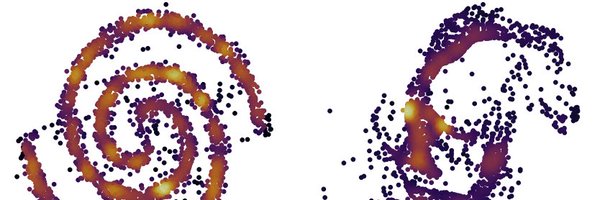

We just released the largest open-source diffusion language model (RND1). RND1 is important to me on a personal level: it symbolizes our commitment to open-source exploration of radically different designs for AI at scale — training objectives, architectures, domains. There is

Introducing RND1, the most powerful base diffusion language model (DLM) to date. RND1 (Radical Numerics Diffusion) is an experimental DLM with 30B params (3B active) with a sparse MoE architecture. We are making it open source, releasing weights, training details, and code to

1

3

20

Fun explainer about Evo2 and its architecture, StripedHyena 2. Hybrids are so 2023, multi-hybrids are the future!

🧬 Evo 2 reads a whole genome like a book. It can see patterns from a few DNA letters to entire chromosomes, thanks to a mix of zoom-lenses: 🔍 Close-up (motifs) 🔎 Mid-range (genes) 🌍 Global (long-range control) Details in 🧵

0

3

20

A careful study of architecture transfer dynamics, to be presented as oral at NeurIPS 2025 (top 0.3% of all submissions). Advances in grafting methods significantly accelerate research on architecture design, scaling recipes, and even post-training.

Grafting Diffusion Transformers accepted to #NeurIPS2025 as an Oral! We have lots of interesting analysis, a test bed for model grafting, and insights🚀 📄Paper: https://t.co/OjsrOZi7in 🌎Website:

0

3

10

Welcome to the age of generative genome design! In 1977, Sanger et al. sequenced the first genome—of phage ΦX174. Today, led by @samuelhking, we report the first AI-generated genomes. Using ΦX174 as a template, we made novel, high-fitness phages with genome language models. 🧵

33

212

1K

I believe LLMs will inevitably surpass humans in coding. Let us think about how humans actually learn to code. Human learning of coding has two stages. First comes memorization and imitation: learning syntax and copying good projects. Then comes trial and error: writing code,

125

115

1K

Radical Numerics' @exnx at @TEDTalks, describing how Evo models are paving the way to generative genomics

✨ Excited to share a few life updates! 🎤 My TED Talk is now live! I shared the origin story of Evo, titled: "How AI could generate new life forms" TED talk: https://t.co/dh7iWcPaBu ✍️ I wrote a blog post about what it’s *really* like to deliver a TED talk blog:

0

0

8

@osanseviero great release, tho you forgot to include the SoTA in the chart: LFM2-350M @LiquidAI_

7

19

168

I am very excited to take up the role of chief scientist for meta super-intelligence labs. Looking forward to building asi and aligning it to empower people with the amazing team here. Let’s build!

445

336

9K

It’s time for the American AI community to wake up, drop the "open is not safe" bullshit, and return to its roots: open science and open-source AI, powered by an unmatched community of frontier labs, big tech, startups, universities, and non‑profits. If we don’t, we’ll be forced

48

78

572

Getting mem-bound kernels to speed-of-light isn't a dark art, it's just about getting the a couple of details right. We wrote a tutorial on how to do this, with code you can directly use. Thanks to the new CuTe-DSL, we can hit speed-of-light without a single line of CUDA C++.

🦆🚀QuACK🦆🚀: new SOL mem-bound kernel library without a single line of CUDA C++ all straight in Python thanks to CuTe-DSL. On H100 with 3TB/s, it performs 33%-50% faster than highly optimized libraries like PyTorch's torch.compile and Liger. 🤯 With @tedzadouri and @tri_dao

7

58

520

It's easy (and fun!) to get nerdsniped by complex architecture designs. But over the years, I've seen hybrid gated convolutions always come out on top in the right head-to-head comparisons. The team brings a new suite of StripedHyena-style decoder models, in the form of SLMs

Liquid AI open-sources a new generation of edge LLMs! 🥳 I'm so happy to contribute to the open-source community with this release on @huggingface! LFM2 is a new architecture that combines best-in-class inference speed and quality into 350M, 700M, and 1.2B models.

2

13

47

One of the best ways to reduce LLM latency is by fusing all computation and communication into a single GPU megakernel. But writing megakernels by hand is extremely hard. 🚀Introducing Mirage Persistent Kernel (MPK), a compiler that automatically transforms LLMs into optimized

14

127

772

Excited about this line of work. Pretrained models are surprisingly resilient to architecture modification, opening up entirely new options for customization and optimization: operator swapping, rewiring depth into width, and more.

1/ Model architectures have been mostly treated as fixed post-training. 🌱 Introducing Grafting: A new way to edit pretrained diffusion transformers, allowing us to customize architectural designs on a small compute budget. 🌎 https://t.co/fjOTVqfVZr Co-led with @MichaelPoli6

0

3

17

Excited to be presenting our new work–HMAR: Efficient Hierarchical Masked Auto-Regressive Image Generation– at #CVPR2025 this week. VAR (Visual Autoregressive Modelling) introduced a very nice way to formulate autoregressive image generation as a next-scale prediction task (from

1

25

56

When we put lots of text (eg a code repo) into LLM context, cost soars b/c of the KV cache’s size. What if we trained a smaller KV cache for our documents offline? Using a test-time training recipe we call self-study, we find that this can reduce cache memory on avg 39x

16

75

330