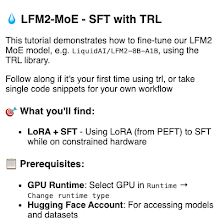

Liquid AI

@LiquidAI_

Followers

16K

Following

413

Media

79

Statuses

327

Build efficient general-purpose AI at every scale.

Cambridge, MA

Joined March 2023

Introducing Liquid Nanos ⚛️ — a new family of extremely tiny task-specific models that deliver GPT-4o-class performance while running directly on phones, laptops, cars, embedded devices, and GPUs with the lowest latency and fastest generation speed. > model size: 350M to 2.6B >

30

130

1K

New LFM2 release 🥳 It's a Japanese PII extractor with only 350M parameters. It's extremely fast and on par with GPT-5 (!) in terms of quality. Check it out, it's available today on @huggingface!

13

24

180

We have a new nano LFM that is on-par with GPT-5 on data extraction with 350M parameters. Introducing LFM2-350M-PII-Extract-JP 🇯🇵 Extracts personally identifiable information (PII) from Japanese text → returns structured JSON for on-device masking of sensitive data. Delivers

14

35

394

Download on HF: https://t.co/qBpYCvN4Wi Deploy with LEAP: https://t.co/So08EizY3o Join our discord channel to get live-updates on latest from Liquid: https:/discord.gg/liquid-ai

leap.liquid.ai

Liquid Leap empowers AI developers to deploy the most capable edge models with one command.

0

1

10

おはようございます!Liquid Nanosファミリーに新しく追加されたLFM2-350M-PII-Extract-JPを紹介します。 日本語テキストから個人情報(PII)を抽出し、 デバイス上での機密データのマスキングに使える構造化されたJSONを出力します。

1

5

17

We have a new nano LFM that is on-par with GPT-5 on data extraction with 350M parameters. Introducing LFM2-350M-PII-Extract-JP 🇯🇵 Extracts personally identifiable information (PII) from Japanese text → returns structured JSON for on-device masking of sensitive data. Delivers

14

35

394

I added LFM 2 8B A1B in @LocallyAIApp for iPhone 17 Pro and iPhone Air The first mixture of experts model by @LiquidAI_, 8B total parameters (1B active), performance similar to 3-4B models but speed of a 1B model Runs great on the 17 Pro with Apple MLX

7

11

109

Meet LFM2-8B-A1B by @LiquidAI_ - 8B total and 1B active params 🐘 - 5x faster on CPUs and GPUs ⚡️ - Perfect for fast, private, edge 📱/💻/🚗/🤖

Meet LFM2-8B-A1B, our first on-device Mixture-of-Experts (MoE)! 🐘 > LFM2-8B-A1B is the best on-device MoE in terms of both quality and speed. > Performance of a 3B-4B model class, with up to 5x faster inference profile on CPUs and GPUs. > Quantized variants fit comfortably on

2

4

41

Enjoy our even better on-device model! 🐘 Running on @amd AI PCs with the fastest inference profile!

Meet LFM2-8B-A1B, our first on-device Mixture-of-Experts (MoE)! 🐘 > LFM2-8B-A1B is the best on-device MoE in terms of both quality and speed. > Performance of a 3B-4B model class, with up to 5x faster inference profile on CPUs and GPUs. > Quantized variants fit comfortably on

2

6

54

Meet LFM2-8B-A1B, our first on-device Mixture-of-Experts (MoE)! 🐘 > LFM2-8B-A1B is the best on-device MoE in terms of both quality and speed. > Performance of a 3B-4B model class, with up to 5x faster inference profile on CPUs and GPUs. > Quantized variants fit comfortably on

13

91

506

Full Blog: https://t.co/3mXMiwBzrS HF: https://t.co/xUs9IDxCiZ GGUF: https://t.co/1zR457VpV8 Fine-tune it: https://t.co/jNNbbAYpXr n=6

colab.research.google.com

Colab notebook

1

2

31

In addition to integrating LFM2-8B-A1B on llama.cpp and ExecuTorch to validate inference efficiency on CPU-only devices, we’ve also integrated the model into vLLM to deploy on GPU in both single-request and online batched settings. Our 8B LFM2 MoE model not only outperforms

1

1

15

Across devices on CPU, LFM2-8B-A1B is considerably faster than the fastest variants of Qwen3-1.7B, IBM Granite 4.0, and others. 4/n

2

2

28

Architecture. Most MoE research focuses on cloud models in large-scale batch serving settings. For on-device applications, the key is to optimize latency and energy consumption under strict memory requirements. LFM2-8B-A1B is one of the first models to challenge the common belief

1

1

30

LFM2-8B-A1B has greater knowledge capacity than competitive models and is trained to provide quality inference across a variety of capabilities. Including: > Knowledge > Instruction following > Mathematics > Language translation 2/n

1

3

35

Meet LFM2-8B-A1B, our first on-device Mixture-of-Experts (MoE)! 🐘 > LFM2-8B-A1B is the best on-device MoE in terms of both quality and speed. > Performance of a 3B-4B model class, with up to 5x faster inference profile on CPUs and GPUs. > Quantized variants fit comfortably on

13

91

506

The last 90 days we shipped hard at @LiquidAI_.🚢 🐘 LFM2 tiny instances. fastest on-device models 350M, 700M, 1.2B with a flagship new architecture. 🐸 LEAP. our device ai platform, from use-case to model deployment on phones and laptops in 5min. 👁️ LFM2 Vision language

5

14

104

Today we are broadening access to local AI with the launch of Apollo on Android. The @apolloaiapp is our low-latency cloud-free “playground in your pocket” that allows users to instantly access fast, effective AI - without sacrificing privacy or security. Together, Apollo and

🔉🤖 The announcement you’ve been waiting for is here: Apollo is available on Android! Now you can easily access all the local, secure AI technology you’ve loved on iOS from whichever phone is in your pocket. Apollo’s low-latency, cloud-free platform and library of small models

3

26

154

Today we are broadening access to local AI with the launch of Apollo on Android. The @apolloaiapp is our low-latency cloud-free “playground in your pocket” that allows users to instantly access fast, effective AI - without sacrificing privacy or security. Together, Apollo and

🔉🤖 The announcement you’ve been waiting for is here: Apollo is available on Android! Now you can easily access all the local, secure AI technology you’ve loved on iOS from whichever phone is in your pocket. Apollo’s low-latency, cloud-free platform and library of small models

3

26

154