Jacob Portes

@JacobianNeuro

Followers

990

Following

4K

Media

22

Statuses

522

Research Scientist @MosaicMLxDatabricks. I like it when brains inspire AI 🧠+🖥️

NYC

Joined April 2021

@answerdotai @LightOnIO Also nice to see that our work on MosaicBERT was a part of this journey @alexrtrott @sam_havens @nikhilsard @danielking36 @ml_hardware @moinnadeem @jefrankle ModernBERT repo 🖥️: https://t.co/4n5u8qaMgy MosaicBERT paper 📜 https://t.co/R9nlUhnLml

4

4

17

One of my favorite results for π*0.6 is this video: 13 hours of making lattes, Americanos, and espresso for folks at our office in San Francisco.

11

28

240

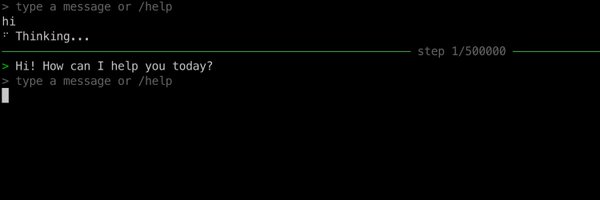

i wrote some thoughts on the missing context of grep and semantic search amidst Cognition and Cursor releasing somewhat opposing views on retrieve:

nuss-and-bolts.com

the answer is...it depends

4

7

29

Big personal update 💥 After founding & exiting two companies (a database pioneer & a CDP powerhouse), I’m starting a new chapter: I've joined @databricks! I’m here to build and lead their brand-new engineering office, right here in NYC 🗽

11

3

40

Introducing GEN-0, our latest 10B+ foundation model for robots ⏱️ built on Harmonic Reasoning, new architecture that can think & act seamlessly 📈 strong scaling laws: more pretraining & model size = better 🌍 unprecedented corpus of 270,000+ hrs of dexterous data Read more 👇

49

284

1K

As a former neuroscientist now in AI research, I loved this New Yorker piece by @jsomers that explores whether and how LLMs are good models of cognition. Highly recommend!

James Somers asks neuroscientists and artificial-intelligence researchers about the uncanny similarities between large language models and the human brain. https://t.co/6oz5LB9BVD

0

0

3

I guess we are now very close to open-weights vs closed source parity. Can't test it since I am traveling and my laptop broke (😭), but many people say it's better than Sonnet/Gemini/Grok. Very exciting times!

🚀 Hello, Kimi K2 Thinking! The Open-Source Thinking Agent Model is here. 🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%) 🔹 Executes up to 200 – 300 sequential tool calls without human interference 🔹 Excels in reasoning, agentic search, and coding 🔹 256K context window Built

9

18

326

🚀 Hello, Kimi K2 Thinking! The Open-Source Thinking Agent Model is here. 🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%) 🔹 Executes up to 200 – 300 sequential tool calls without human interference 🔹 Excels in reasoning, agentic search, and coding 🔹 256K context window Built

585

2K

10K

Many AI agent problems are really just information retrieval problems. If the agent has a better way to find and comb through data performantly, you will get far better results. Compute is fungible so you can use it during indexing and processing or you can use later to crank

By spending lots of compute at indexing time, you can reuse that computation to get better performance without increasing inference-time compute! Embeddings are the simplest mechanism that achieve this!

42

38

356

After ~4 years building SOTA models & datasets, we're sharing everything we learned in ⚡The Smol Training Playbook We cover the full LLM cycle: designing ablations, choosing an architecture, curating data, post-training, and building solid infrastructure. We'll help you

36

161

1K

Looking forward to what you guys build @lindensli!

Generalists are useful, but it’s not enough to be smart. Advances come from specialists, whether human or machine. To have an edge, agents need specific expertise, within specific companies, built on models trained on specific data. We call this Specific Intelligence. It's

0

0

1

Cool work from @ReeceShuttle et al. trying to understand the differences between LoRA vs. full finetuning

🧵 LoRA vs full fine-tuning: same performance ≠ same solution. Our NeurIPS ‘25 paper 🎉shows that LoRA and full fine-tuning, even when equally well fit, learn structurally different solutions and that LoRA forgets less and can be made even better (lesser forgetting) by a simple

0

1

4

immensely proud of the team for our best model yet. grateful to be able to work with such a strong team of researchers who are always curious and willing to explore the untrodden path https://t.co/Grkgy4vaqf

14

19

327

The application link can be found here: https://t.co/4SxJLzw2Ts I've also opened up my DMs =)

databricks.com

PhD GenAI Research Scientist Intern, San Francisco, California. Join us! Together we can use data to solve the challenges of tomorrow

0

0

1

Our team at Databricks Research is ramping up internship applications. If you are a PhD student doing research in RL training, multimodal models, information retrieval, evaluation, and coding and data science agents, feel free to DM me!

My team is hiring AI research interns for summer 2026 at Databricks! Join us to learn about AI use cases at thousands of companies, and contribute to making it easier for anyone to build specialized AI agents and models for difficult tasks.

6

17

171

Highly recommend giving this report a read!

🪩The one and only @stateofaireport 2025 is live! 🪩 It’s been a monumental 12 months for AI. Our 8th annual report is the most comprehensive it's ever been, covering what you *need* to know about research, industry, politics, safety and our new usage data. My highlight reel:

1

0

1

Our paper showing that with a general-purpose RLVR recipe, we can get SOTA on the BIRD benchmark is out: https://t.co/z0sbcysvCC Our hybrid approach performs offline RL and online RL to fine-tune a 32B model. This, along with self-consistency, was sufficient to get us to SOTA.

2

5

18

Tractors plow our fields. Cranes build our cities. Satellites link us together. Civilization runs on machines. But what do our machines run on? Announcing Root Access: the Applied AI company for machines.

14

15

82

Really excited to share that FreshStack has been accepted at #neurips25 D&B Track (poster)! 🥁🥁 Huge congratulations to all my @DbrxMosaicAI co-authors! Time to see you in San Diego! 🍻

3

10

64