Helping Hands Lab @ Northeastern

@HelpingHandsLab

Followers

313

Following

166

Media

9

Statuses

91

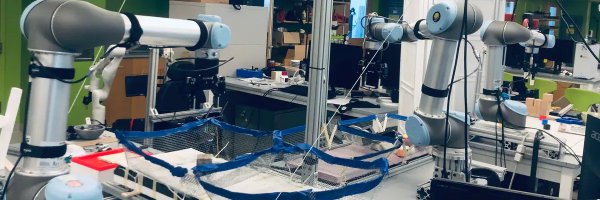

🤖 Robotic Manipulation | Reinforcement Learning | PI Rob Platt @RobotPlatt @KhouryCollege @Northeastern

Boston, Massachusetts

Joined March 2022

SO(3)-equivariant policy learning in the RGB space needs much more exploration. Really like how this work streamlines the pipeline while preserving full symmetry. Amazing work from Boce. Highly recommend checking it out. See you at #NeurIPS2025!

Closed-loop visuomotor control with wrist cameras is widely adopted and powerful, but leveraging full 3D symmetry from only RGB is still hard. Introducing our #NeurIPS2025 Spotlight paper, Image-to-Sphere Policy (ISP), for equivariant policy learning from eye-in-hand RGB images.

0

1

4

Closed-loop visuomotor control with wrist cameras is widely adopted and powerful, but leveraging full 3D symmetry from only RGB is still hard. Introducing our #NeurIPS2025 Spotlight paper, Image-to-Sphere Policy (ISP), for equivariant policy learning from eye-in-hand RGB images.

2

18

39

Equivariant policy typically requires depth for symmetric representation, what if we only have a wrist-mounted RGB camera? ISP projects RGB image onto a sphere for 3D equivariant reasoning. @boce_hu and I will be at #NeurIPS2025 to present this paper, Fri. poster session 6 #2315!

Closed-loop visuomotor control with wrist cameras is widely adopted and powerful, but leveraging full 3D symmetry from only RGB is still hard. Introducing our #NeurIPS2025 Spotlight paper, Image-to-Sphere Policy (ISP), for equivariant policy learning from eye-in-hand RGB images.

0

10

62

Our new #ICML2025 paper, led by @ZhaoHaibo47588, presents a hierarchical equivariance architecture that enables multi-level sample efficiency in visuomotor policy learning. Check it out for more details!

Excited to share our #ICML2025 paper, Hierarchical Equivariant Policy via Frame Transfer. Our Frame Transfer interface imposes high-level decision as a coordinate frame change in the low-level, boosting sim performance by 20%+ and enabling complex manipulation with 30 demos.

0

0

1

Honored to receive my first best paper nomination from @corl_conf! Had such a great time at #CoRL2024, huge thanks to the organizing committee and all my co-authors: Steve, David, @tarikkelestemur, @HaojieHuang13, @ZhaoHaibo47588, Mark, JW, @RobinSFWalters, @RobotPlatt!

Introducing Equivariant Diffusion Policy, a novel sample efficient BC algorithm based on equivariant diffusion. Our method leverages the symmetry in policy denoising to boost learning — needing 5x less training data in sim and mastering complex tasks in real-world with <60 demos.

14

7

89

Perfect start to the #CoRL2024 week! Was a pleasure organizing the NextGen Robot Learning Symposium at @TUDarmstadt with @firasalhafez @GeorgiaChal Thanks to the speakers for the great talks! @YunzhuLiYZ

@NimaFazeli7 @Dian_Wang_ @HaojieHuang13 @Vikashplus @ehsanik @Oliver_Kroemer

1

7

61

Paper link: https://t.co/eTJd2HnNSv Website: https://t.co/5zMSaGgJop Coauthors: @Dian_Wang_, @BizaOndrej, @RubyFreax, @SeanLiu7081, @SaulBadman12, @RobotPlatt, @RobinSFWalters

arxiv.org

Humans can imagine goal states during planning and perform actions to match those goals. In this work, we propose Imagination Policy, a novel multi-task key-frame policy network for solving...

0

0

6

#CoRL2024 IMAGINATION POLICY: Using Generative Point Cloud Models for Learning Manipulation Policies Led by @HaojieHuang13. A key-frame multi-task policy can generate key poses (imagine) and do manipulation precisely with sample efficiency. Presenting at Poster Session 4.

1

9

18

Paper link: https://t.co/GHdh0GeJVh Website: https://t.co/Uwn6p9bBWM Coauthors: @XupengZ, @BizaOndrej, Shuo Jiang, @LinfengZhaoZLF, @HaojieHuang13, @yuqi_Beijing, @RobotPlatt

0

0

5

#CoRL2024 ThinkGrasp: A Vision-Language System for Strategic Part Grasping in Clutter by @RubyFreax. A plug-and-play vision-language grasping system that uses GPT-4o’s advanced contextual reasoning for heavy clutter environment grasping strategies. Presenting at Poster Session 3.

1

9

15

Paper link: https://t.co/FAzp7UsgHz Website: https://t.co/bcpRSOBjeg Coauthors: Stephen Hart, David Surovik, @tarikkelestemur, @HaojieHuang13, @ZhaoHaibo47588, Mark Yeatman, Jiuguang Wang, @RobinSFWalters, @RobotPlatt

arxiv.org

Recent work has shown diffusion models are an effective approach to learning the multimodal distributions arising from demonstration data in behavior cloning. However, a drawback of this approach...

0

0

4

#CoRL2024 Equivariant Diffusion Policy Led by @Dian_Wang_. A sample efficient BC algorithm based on equi diffusion. It leverages symmetry to boost learning with 5x less training data and mastering complex tasks with <60 demos. Presenting at Oral Session 1 and Poster Session 2.

2

12

57

Presenting at Poster Session 2. Paper link: https://t.co/6SmiY2tupo Coauthors: Long Dinh Van The @cjdamato @RobotPlatt

0

0

3

#CoRL2024 Leveraging Mutual Information for Asymmetric Learning under Partial Observability led by @HaiNguy69482974 Addressing asymmetric learning under partial observability (state availability at training) by rewarding actions leads to histories that gain info about the state.

1

7

12

Paper link: https://t.co/TLeEHONTtv Website: https://t.co/DH4QD2f9Ue Coauthors: @XupengZ @Dian_Wang_ Zihao Dong @HaojieHuang13 @330781570 @RobinSFWalters @RobotPlatt

0

0

5

Excited to share our #CoRL2024 paper: OrbitGrasp—a fully SE(3)-equivariant grasp detection method for 6-DoF grasping! arxiv:

Grasp detection is crucial for robotic manipulation but remains challenging in SE(3). We introduce our #CoRL2024 paper: OrbitGrasp, an SE(3)-equivariant grasp learning framework using spherical harmonics for 6-DoF grasp detection. 🌐 https://t.co/HzWP5OghY3

0

1

13

Join us on Monday October. 14th at 2pm (UTC+4) in #IROS2024 Workshop on Equivariant Robotics. A great lineup of keynote speakers will discuss how symmetry penetrates each and every subfield of robotics. Website and Zoom Link: https://t.co/841TWqfvWq

1

10

25

We have released the YouTube recording of our #RSS2024 workshop on "Geometric and Algebraic Structure in Robot Learning"! 🎥Youtube: https://t.co/wCgGY9Bi3P 🏠Webpage:

sites.google.com

Introduction Most robotic applications, including manipulation, navigation and locomotion, require our robots to interact with the physical world, which is rich in geometric and algebraic structures....

🤖 Excited for #RSS2024? Don’t miss our workshop on "Geometric and Algebraic Structure in Robot Learning"! Submit workshop papers (by 6/10 AOE) and dive into discussions on leveraging these structures for enhanced #robotics. 🚀🔍 Join us on 07/19 in Delft, Netherlands!

0

11

27

Instead of inferring a desired object pose directly, this method "imagines" a reconstruction of the entire scene in the target pose. Surprisingly, we find that this improves sample efficiency, even though we are inferring more information.

Checkout our new work, Imagination Policy. We leverage a point cloud diffusion model to “imagine” a target scene, then use SVD to calculate rigid transformations that bring objects to the imagined scene as robot actions. More importantly, Imagination Policy is bi-equivariant!

0

1

8