Digital and Fintech Analytics

@EcomMarketFix_

Followers

52

Following

785

Media

14

Statuses

336

Unlock effective ecommerce strategies, optimize marketing efforts, and solve real-world business challenges with data insights using Python ,maths and Big Data

Joined January 2019

Doctors expected stents to reduce stroke risk — but a major study found the opposite. How statistics was used to decode what's true? You will learn how treatment and control groups are created and its importance. https://t.co/lhgDtZ0Jjn

0

0

0

Quickly turn a GitHub repository into text for LLMs with Gitingest ⚡️ Replace "hub" with "ingest" in any GitHub URL for a text version of the codebase.

63

391

3K

I wish I had had this animation when teaching Markov chain Monte Carlo. Could also help think about the behaviour of deep learning optimisation with non-vanishing gradients.

We often think of an "equilibrium" as something standing still, like a scale in perfect balance. But many equilibria are dynamic, like a flowing river which is never changing—yet never standing still. These dynamic equilibria are nicely described by so-called "detailed balance"

3

34

273

PQN is the best thing that has happened to model-free RL in a while, and people haven't realized it yet 🚀⚡️

@creus_roger just implemented a @cleanrl_lib Parallel Q-Networks algorithm (PQN) implementation! 🚀PQN is DQN without a replay buffer and target network. You can run PQN on GPU environments or vectorized environments. E.g., in envpool, PQN gets DQN's score in 1/10th the time

5

11

43

Nice collection of LLM papers, blogs, and projects, focussing on OpenAI o1 and reasoning techniques. What it offers: 📌 Curates papers, blogs, talks, and Twitter discussions about OpenAI's o1 and LLM reasoning 📌 Tracks frontier developments in LLM reasoning capabilities and

3

105

462

@sama getting this to #1 should be top prio. when you type SearchGPT into the extension store it spits out a bunch of garbage https://t.co/S4OXiaHkk6

chromewebstore.google.com

Change default search engine to ChatGPT search.

5

10

192

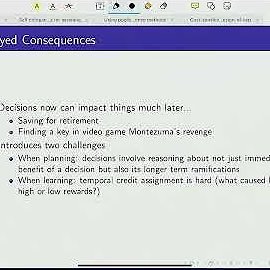

Video lectures, Stanford CS 234 Reinforcement Learning spring 2024, by Emma Brunskill https://t.co/YljhHIHP2S

https://t.co/K57tRfFp5v

youtube.com

To realize the dreams and impact of AI requires autonomous systems that learn to make good decisions. Reinforcement learning is one powerful paradigm for doi...

5

91

471

Must-read papers for LLM-based agents. A nice collection in this Github

5

154

820

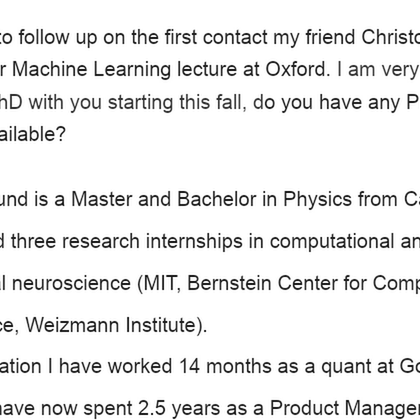

Cold emails are hard and good ones can change a life. Here is my email to @NandoDF that started my career in ML (at the time I was a PM at Google) https://t.co/ILaOtGQvbD Real effort (incl feedback) went into drafting it. Thanks to @EugeneVinitsky for nudging me to put it online

docs.google.com

Subject Follow-up: PhD positions this fall Body Hi Nando, I would like to follow up on the first contact my friend Christoph made today in your Machine Learning lecture at Oxford. I am very interes...

16

61

744

✨Announcing 5 incredible tutorials at @LogConference 2024: 1. Geometric Generative Models by @bose_joey, @AlexanderTong7, @helibenhamu 2. Neural Algorithmic Reasoning II: from Graphs to Language by @PetarV_93, Olga Kozlova, @fedzbar, Larisa Markeeva, Alex Vitvitskyi, @_wilcoln

1

24

95

Already on HF: https://t.co/WR4RwqRVZo!

huggingface.co

Introducing Mochi 1 preview. A new SOTA in open-source video generation. Apache 2.0. magnet:?xt=urn:btih:441da1af7a16bcaa4f556964f8028d7113d21cbb&dn=weights&tr=udp://tracker.opentrackr.org:1337/announce

7

21

190

📣Check out our #NeurIPS24 paper Geometric Trajectory Diffusion Models (GeoTDM), a new diffusion-based generative model that captures the temporal evolution of the ubiquitous geometric systems!! Paper: https://t.co/wy8lQGxaNe Code: https://t.co/6FW1UiRCZV 🧵1/8

1

35

146

I just spent my Saturday writing >5000 words fully documenting every single feature of FastHTML handlers. So please, read it, because I went slightly crazy doing this and I need to believe this helps someone out there...🤪 https://t.co/aHADwfspo7

38

80

923

Since a number of people asked, this is what I mean by deep learning way of understanding reinforcement learning. It's all about one question: how can I differentiate through the total reward my agent is collecting? The total reward (i.e., the value function) is the objective

9

37

302