Gantavya Bhatt

@BhattGantavya

Followers

695

Following

6K

Media

37

Statuses

1K

Ph.D. Student @UW, @nvidia, working in data-efficient ML. Prev: @amazonscience, undergrad @iitdelhi. Passionate Photographer into Alpinism!

Redmond, WA

Joined June 2018

Happy to announce about our recent work - "Deep Submodular Peripteral Networks" on learning parametric family of submodular functions is accepted into #NeurIPS2024 as spotlight 🥳📷! Joint work with @arnaved and @jbilmes! Looking forward to Vancouver & Cascades! Thread soon🧵!.

4

5

31

RT @stochasticlalit: It was amazing to be part of this effort. Huge shout out to the team, and all the incredible pre-training and post-tra….

deepmind.google

Our advanced model officially achieved a gold-medal level performance on problems from the International Mathematical Olympiad (IMO), the world’s most prestigious competition for young...

0

8

0

RT @RafaelValleArt: 🤯 Audio Flamingo 3 is out already. and that's before Audio Flamingo 2 makes its debut at ICML on Wednesday, July 16 a….

0

16

0

RT @jifan_zhang: Releasing HumorBench today. Grok 4 is🥇 on this uncontaminated, non-STEM humor reasoning benchmark. 🫡🫡@xai . Here are coupl….

0

3

0

RT @orevaahia: 🎉 We’re excited to introduce BLAB: Brutally Long Audio Bench, the first benchmark for evaluating long-form reasoning in audi….

0

45

0

RT @ReubenNarad: Whoa. Grok 4 beats o3 on our never-released benchmark: HumorBench, a non-STEM reasoning benchmark that measures humor co….

0

5

0

RT @Harman26Singh: 🚨 New @GoogleDeepMind paper. 𝐑𝐨𝐛𝐮𝐬𝐭 𝐑𝐞𝐰𝐚𝐫𝐝 𝐌𝐨𝐝𝐞𝐥𝐢𝐧𝐠 𝐯𝐢𝐚 𝐂𝐚𝐮𝐬𝐚𝐥 𝐑𝐮𝐛𝐫𝐢𝐜𝐬 📑.👉 We tackle reward hac….

0

30

0

RT @percyliang: Wrapped up Stanford CS336 (Language Models from Scratch), taught with an amazing team @tatsu_hashimoto @marcelroed @neilbba….

0

576

0

RT @marksibrahim: A good language model should say “I don’t know” by reasoning about the limits of its knowledge. Our new work AbstentionBe….

0

18

0

RT @rohanpaul_ai: It’s a hefty 206-page research paper, and the findings are concerning. "LLM users consistently underperformed at neural,….

0

3K

0

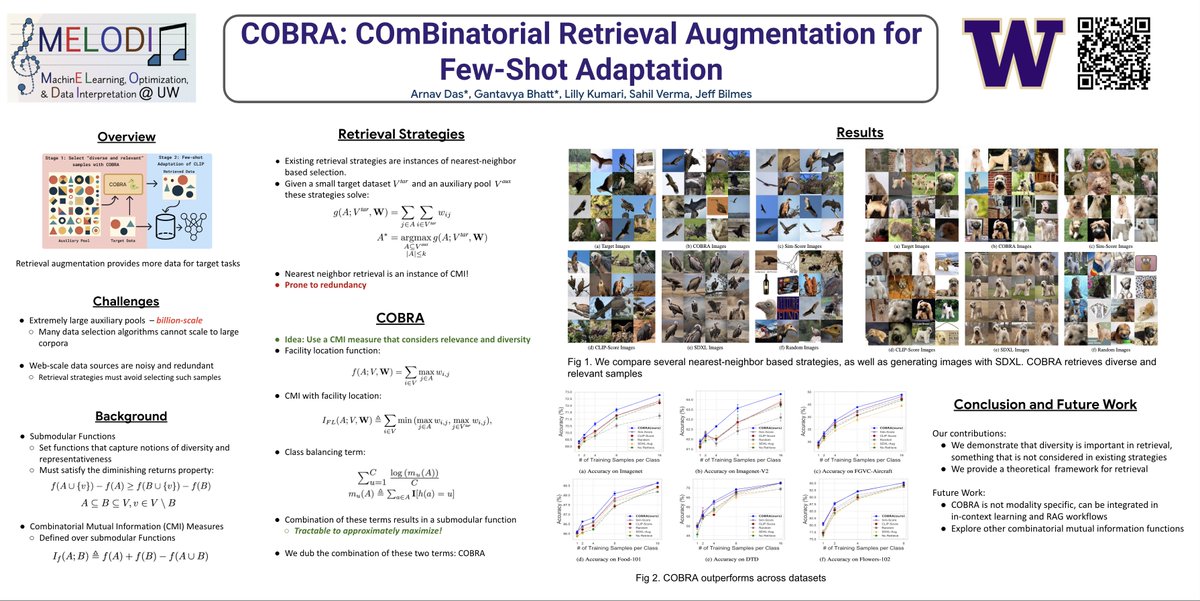

RT @Sahil1V: Using retrieval? --> check out this work by my awesome collaborator on how to increase diversity when retrieving!.

0

2

0

RT @tadityasrinivas: The Matryoshka🪆wave strikes again! .🚀 Excited to share our latest work, accepted to KDD 2025: Matryoshka Model Learnin….

0

4

0

RT @IJCAIconf: Announcing the 2025 IJCAI Computers and Thought Award winner ✨Aditya Grover @adityagrover_, @InceptionAILabs @UCLA. Dr. Grov….

0

12

0

This is an interesting work with unified embedding space in mind!.

🚨 New Paper! 🚨.Guard models slow, language-specific, and modality-limited?. Meet OmniGuard that detects harmful prompts across multiple languages & modalities all using one approach with SOTA performance in all 3 modalities!! while being 120X faster 🚀.

0

2

5

RT @gavinrbrown1: I'm excited to announce that I will join @WisconsinCS as an assistant professor this fall! Time to get to it.

0

7

0

RT @WenhuChen: Very interesting analysis!. However, we found that that you can actually achieve the same performance on the same ONE exampl….

0

28

0

RT @kuchaev: NeMo RL is now open source! It replaces NeMo-Aligner and is the toolkit we use to post train next generations of our models. G….

github.com

Scalable toolkit for efficient model reinforcement - NVIDIA-NeMo/RL

0

65

0