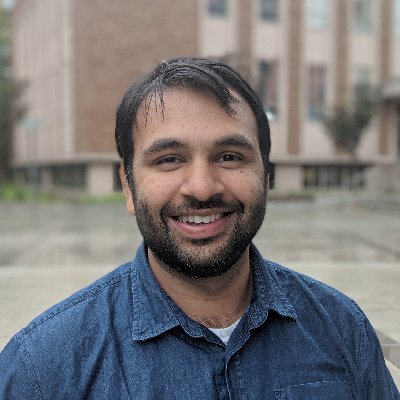

Jifan Zhang

@jifan_zhang

Followers

329

Following

306

Media

17

Statuses

190

Research Fellow @AnthropicAI | Ph.D. @WisconsinCS @WIDiscovery | Previously BS/MS @uwcse, @Meta @Google @Amazon

Joined April 2017

RT @AnthropicAI: Today we're releasing Claude Opus 4.1, an upgrade to Claude Opus 4 on agentic tasks, real-world coding, and reasoning. htt….

0

1K

0

RT @AnthropicAI: New Anthropic research: Persona vectors. Language models sometimes go haywire and slip into weird and unsettling personas….

0

924

0

RT @AnthropicAI: We’re running another round of the Anthropic Fellows program. If you're an engineer or researcher with a strong coding o….

0

187

0

RT @TmlrPub: Deep Active Learning in the Open World. Tian Xie, Jifan Zhang, Haoyue Bai, Robert D Nowak. Action editor: Vincent Fortuin. h….

openreview.net

Machine learning models deployed in open-world scenarios often encounter unfamiliar conditions and perform poorly in unanticipated situations. As AI systems advance and find application in...

0

1

0

RT @FabienDRoger: Very cool result!.I would have not predicted that when the model inits are the same, distillation transmits so much hidde….

0

1

0

RT @saprmarks: Subliminal learning: training on model-generated data can transmit traits of that model, even if the data is unrelated. Thi….

0

22

0

RT @lyang36: 🚨 Olympiad math + AI:. We ran Google’s Gemini 2.5 Pro on the fresh IMO 2025 problems. With careful prompting and pipeline desi….

0

118

0

RT @stochasticlalit: It was amazing to be part of this effort. Huge shout out to the team, and all the incredible pre-training and post-tra….

deepmind.google

Our advanced model officially achieved a gold-medal level performance on problems from the International Mathematical Olympiad (IMO), the world’s most prestigious competition for young...

0

8

0

RT @ajwagenmaker: How can we train a foundation model to internalize what it means to “explore”?. Come check out our work on “behavioral ex….

0

52

0

This work is driven by my first and amazing undergrad advisee Shyam Nuggehalli. Also in collaboration with @stochasticlalit and @rdnowak. An implementation of the algorithm is in the LabelBench repo:

github.com

Contribute to EfficientTraining/LabelBench development by creating an account on GitHub.

0

0

2

The algorithm is also noise tolerant and supports batch labeling, a significant improvement over my previous algorithm GALAXY.

Have limited labeling budget for training neural networks and the underlying data is too unbalanced? Check out our ICML 2022 paper “GALAXY: Graph-based Active Learning at the Extreme”. Joint work w/ @JulianJKS and @rdnowak. (1/6).

1

0

1